Concept#

Gaussian Mixture Models are a statistical technique used for approximating the probability distribution of data, known as density estimation.

From the introduction in the previous section, one might ask the motivation of using a linear combination to approximate a probability distribution. The answer is that the linear combination of simple distributions is a flexible model that can approximate a wide variety of probability distributions.

Consider your dataset that exhibits a multimodal distribution (i.e. multiple modes), then a single Gaussian distribution will not be able to capture the distribution of the data.

Intuition#

Simple Bi-Modal Distribution#

The code below does the following:

x_axisis created as a NumPy array of evenly spaced values ranging from -15 to 15 with a step size of 0.001.gaussian_1andgaussian_2are defined as dictionaries representing two normal distributions with given means and standard deviations:Distribution 1 has a mean of -4 and a standard deviation of 2.

Distribution 2 has a mean of 4 and a standard deviation of 2.

The probability density functions (PDFs) for

gaussian_1andgaussian_2are calculated using thenorm.pdffunction from the SciPy library. The PDFs are computed for each value inx_axis, with the respective mean and standard deviation for each distribution.The

pdf_mergedvariable is created by adding the PDFs ofgaussian_1andgaussian_2element-wise, which represents the combined probability density function of both distributions.

Show code cell source

1def get_gaussian_pdf(x: np.ndarray, mu: float, sigma: float) -> np.ndarray:

2 y = norm.pdf(x, mu, sigma)

3 return y

4

5

6def merge_gaussian_pdf(

7 prior: List[float], pdf_1: np.ndarray, pdf_2: np.ndarray, *args: np.ndarray

8) -> np.ndarray:

9 pdfs = [pdf_1, pdf_2, *args]

10

11 assert len(prior) == len(pdfs)

12

13 # w1 * f1 + w2 * f2 + ...

14 return np.sum([prior[i] * pdfs[i] for i in range(len(prior))], axis=0)

Show code cell source

1x_axis = np.arange(-15, 15, 0.001)

2gaussian_1 = {"mean": -4, "std": 2}

3gaussian_2 = {"mean": 4, "std": 2}

4

5pdf_1 = get_gaussian_pdf(x_axis, gaussian_1["mean"], gaussian_1["std"])

6pdf_2 = get_gaussian_pdf(x_axis, gaussian_2["mean"], gaussian_2["std"])

7

8weights_1, weights_2 = 0.5, 0.5

9

10pdf_merged = merge_gaussian_pdf(

11 [weights_1, weights_2], pdf_1, pdf_2

12) # weights_1 * pdf_1 + weights_2 * pdf_2

This code below will create a figure with three subplots, where the first subplot (ax1) contains Distribution 1 and Distribution 2, the second subplot (ax2) contains the Merged Distribution, and the third subplot (ax3) contains Distribution 1 and Distribution 2 as dotted lines and the Merged Distribution as a solid line. All subplots share the same x-axis.

Show code cell source

1# Create a 3x1 grid of subplots with shared x-axis

2fig, (ax1, ax2, ax3) = plt.subplots(nrows=3, ncols=1, figsize=(12, 10), sharex=True)

3

4# Plot Distribution 1 and Distribution 2 on the first subplot (ax1)

5ax1.plot(x_axis, pdf_1, "r:", label="Gaussian 1")

6ax1.plot(x_axis, pdf_2, "b:", label="Gaussian 2")

7ax1.legend()

8

9# Plot Merged Distribution on the second subplot (ax2)

10ax2.plot(x_axis, pdf_merged, "g-", label="Gaussian Merged")

11ax2.legend()

12

13# Plot pdf_1 and pdf_2 as dotted lines, and pdf_merged as solid line on the third subplot (ax3)

14ax3.plot(x_axis, pdf_1, "r:", label="Gaussian 1 (Dotted)")

15ax3.plot(x_axis, pdf_2, "b:", label="Gaussian 2 (Dotted)")

16ax3.plot(x_axis, pdf_merged, "g-", label="Gaussian Merged (Solid)")

17ax3.legend()

18

19# Show the plots

20plt.show();

In this case, we say that

where this distribution is a mixture of two normal distributions with equal weights of 0.5.

The mixture components are:

parametrized by

The mixture weights are:

Generative Story#

Now the generative story of such a mixture model is as follows.

If we have a mixture model with \(K\) components, then we can sample from the mixture model by first sampling a component \(k\) from the categorical distribution with parameters \(\boldsymbol{\pi}\), and then sampling from the \(k\)-th component distribution with parameters \(\boldsymbol{\mu}_k\) and \(\boldsymbol{\Sigma}_k\).

More concretely, if we know the following parameters:

\(\boldsymbol{\pi} = \begin{bmatrix} \pi_1 & \pi_2 \end{bmatrix} = \begin{bmatrix} 0.5 & 0.5 \end{bmatrix}\)

\(\boldsymbol{\mu} = \begin{bmatrix} \mu_1 & \mu_2 \end{bmatrix} = \begin{bmatrix} 2 & -2 \end{bmatrix}\)

\(\boldsymbol{\Sigma} = \begin{bmatrix} \Sigma_1 & \Sigma_2 \end{bmatrix} = \begin{bmatrix} 3 & 1 \end{bmatrix}\)

then we can sample from the mixture model by

first sampling a component \(k\) from the categorical distribution with parameters \(\boldsymbol{\pi}\), which means either \(k = 1\) or \(k = 2\) with equal probability of 0.5.

then once we know which component we sampled from, we can sample from the component distribution, which in this case is a normal distribution with mean \(\mu_k\) and standard deviation \(\Sigma_k\). For example, if we sampled \(k = 1\), then we can sample from the first component distribution with mean \(\mu_1 = 2\) and standard deviation \(\Sigma_1 = 3\).

Note very carefully, this is the “generative” side, in machine learning we are interested in the “inference” side, which is to infer the parameters of the mixture model from the dataset \(\mathcal{S}\)!

Let’s see in code how we can sample from a mixture model, and that if we sample enough data points, the empirical distribution of the samples will converge to the true distribution of the mixture model.

Show code cell source

1def generate_x(

2 prior: List[float],

3 mu: List[float],

4 sigma: List[float],

5 num_samples: int = 100,

6 num_gaussians: int = 2,

7) -> List[float]:

8 X = []

9 for _ in range(num_samples):

10 # Select a Gaussian based on the prior

11 selected_gaussian = np.random.choice(num_gaussians, p=prior)

12 # Sample from the selected Gaussian

13 x = norm.rvs(

14 loc=mu[selected_gaussian], scale=sigma[selected_gaussian], size=None

15 )

16 X.append(x)

17 return X

Show code cell source

1x_axis = np.arange(-5, 15, 0.001)

2prior = [0.4, 0.4, 0.2] # weights

3mu = [0, 5, 10]

4sigma = [1, 1, 1]

5

6gaussian_1 = norm.pdf(x_axis, loc=mu[0], scale=sigma[0])

7gaussian_2 = norm.pdf(x_axis, loc=mu[1], scale=sigma[1])

8gaussian_3 = norm.pdf(x_axis, loc=mu[2], scale=sigma[2])

9

10mixture_pdf = merge_gaussian_pdf(

11 prior, gaussian_1, gaussian_2, gaussian_3

12) # prior[0] * gaussian_1 + prior[1] * gaussian_2 + prior[2] * gaussian_3

Show code cell source

1sample_sizes = [100, 500, 10000]

2

3fig, axes = plt.subplots(nrows=2, ncols=2, figsize=(12, 10), sharex=True, sharey=True)

4axes = axes.ravel()

5

6axes[0].plot(x_axis, mixture_pdf, label="Mixture PDF")

7axes[0].set_title("Mixture PDF")

8

9for i, n in enumerate(sample_sizes, start=1):

10 samples = generate_x(prior, mu, sigma, num_samples=n, num_gaussians=len(prior))

11 axes[i].hist(samples, bins=30, density=True, alpha=0.5, label=f"n = {n}")

12 axes[i].plot(x_axis, mixture_pdf, label="Mixture PDF")

13 axes[i].set_title(f"Generated Samples (n = {n})")

14

15for ax in axes:

16 ax.set_xlabel("X-axis")

17 ax.set_ylabel("Density")

18 ax.legend()

19

20plt.tight_layout()

21plt.show()

In this code, we’re using a Gaussian Mixture Model (GMM) to generate and visualize samples from a mixture of two Gaussian distributions. The purpose of the visualization is to demonstrate how the GMM can approximate the true underlying distribution as the number of samples increases.

Here’s a step-by-step explanation of the code:

We define sample_sizes as a list containing the number of samples to generate for each subplot (100, 500, and 10000).

We create a 2x2 grid of subplots with shared x and y axes, with a figure size of 12x10.

We plot the mixture PDF on the first subplot (axes[0]). This represents the true underlying distribution that we are trying to approximate with our GMM.

We iterate over the sample_sizes list, and for each sample size, we use the generate_x function to generate samples from the GMM. The generate_x function takes the prior probabilities, the means and standard deviations of the Gaussians, the number of samples, and the number of Gaussians as input arguments.

For each sample size, we plot a histogram of the generated samples on the corresponding subplot. We normalize the histogram to match the density of the true underlying distribution. We also plot the mixture PDF on the same subplot to compare the generated samples with the true distribution.

We set the titles, x-axis labels, and y-axis labels for all subplots, and add a legend to each subplot.

We use plt.tight_layout() to adjust the spacing between subplots, and finally display the figure using plt.show().

In the context of GMM, this code demonstrates how the GMM can be used to generate samples from a mixture of Gaussian distributions. The generated samples are visualized as histograms, which are compared to the true underlying distribution (the mixture PDF) to show how well the GMM approximates the true distribution. As the number of samples increases, the histograms of the generated samples become closer to the mixture PDF, indicating that the GMM is effectively approximating the true distribution.

Note carefully again that this is under the assumption that we already know the parameters of the mixture model, which is not the case in machine learning. In machine learning, we are interested in the “inference” side, which is to infer the parameters of the mixture model from the dataset \(\mathcal{S}\)!

Inference Story#

Now let’s flip the table and see how we can infer the parameters of the mixture model from the dataset \(\mathcal{S}\).

The code above does the following:

Import necessary libraries: NumPy, Matplotlib, and GaussianMixture from scikit-learn.

Generate a synthetic dataset with three clusters:

Set a random seed to ensure reproducibility.

Define the number of samples (500).

Create a dataset by concatenating samples from three normal distributions with different means (0, 5, and 10) and the same standard deviation (1). The dataset is reshaped into a 2D array.

Fit a Gaussian Mixture Model (GMM) to the data:

Instantiate a GaussianMixture object with three components and a fixed random state.

Fit the GMM to the dataset

X.

Plot the data and the Gaussian Mixture Model:

Create an array

x_plotof 1000 linearly spaced values between -5 and 15.Calculate the density of the GMM for each value in

x_plotusing thescore_samplesmethod.Plot a histogram of the dataset with 30 bins, normalized by the total area.

Plot the GMM density estimation using a red line.

Add labels for the x-axis, y-axis, and a title for the plot.

Display the plot using

plt.show().

A reminder, we know the true distribution because we defined them ourselves. In reality, we don’t know the true distribution, and we want to infer the parameters of the mixture model from the dataset \(\mathcal{S}\). The purpose of defining the true distribution is for pedagogical purposes, so that we can compare the true distribution with the estimated distribution from the GMM.

Show code cell source

1x_axis = np.arange(-5, 15, 0.001)

2prior = [0.4, 0.4, 0.2] # weights

3mu = [0, 5, 10]

4sigma = [1, 1, 1]

5

6gaussian_1 = norm.pdf(x_axis, loc=mu[0], scale=sigma[0])

7gaussian_2 = norm.pdf(x_axis, loc=mu[1], scale=sigma[1])

8gaussian_3 = norm.pdf(x_axis, loc=mu[2], scale=sigma[2])

9

10mixture_pdf = merge_gaussian_pdf(

11 prior, gaussian_1, gaussian_2, gaussian_3

12) # prior[0] * gaussian_1 + prior[1] * gaussian_2 + prior[2] * gaussian_3

Show code cell source

1num_samples = 10000

2samples = generate_x(

3 prior, mu, sigma, num_samples=num_samples, num_gaussians=len(prior)

4)

5X = np.array(samples).reshape(-1, 1)

6

7# Fit a Gaussian Mixture Model to the generated samples

8gmm = GaussianMixture(n_components=len(prior), random_state=1992)

9gmm.fit(X)

GaussianMixture(n_components=3, random_state=1992)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

GaussianMixture(n_components=3, random_state=1992)

Remarkable! The parameters inferred from our GMM model has the following:

Show code cell source

1print("Prior:", gmm.weights_)

2print("Mean:", gmm.means_.ravel())

3print("Std:", np.sqrt(gmm.covariances_.ravel()))

Prior: [0.39785786 0.20201207 0.40013007]

Mean: [-0.01573031 10.02728274 5.02481475]

Std: [1.01680719 0.97835049 0.99226471]

When rounded to the nearest integer/decimal, the parameters inferred from our GMM model has the following:

\(\boldsymbol{\pi} = \begin{bmatrix} \pi_1 & \pi_2 & \pi_3 \end{bmatrix} = \begin{bmatrix} 0.398, 0.4, 0.202 \end{bmatrix}\)

\(\boldsymbol{\mu} = \begin{bmatrix} \mu_1 & \mu_2 & \mu_3 \end{bmatrix} = \begin{bmatrix} 0.0, 5.0, 10.0 \end{bmatrix}\)

\(\boldsymbol{\Sigma} = \begin{bmatrix} \Sigma_1 & \Sigma_2 & \Sigma_3 \end{bmatrix} = \begin{bmatrix} 1.02, 0.99, 0.98 \end{bmatrix}\)

Almost spot on with the true parameters!!!

The plot shows promising results, which is not surprising since our estimated parameters are very close to the true parameters.

Show code cell source

1# Plot the data and the Gaussian Mixture Model

2x_plot = np.linspace(-5, 15, 1000).reshape(-1, 1)

3density = np.exp(

4 gmm.score_samples(x_plot)

5) # !!! gmm.score_samples(x_plot) returns the log-likelihood of the samples thus we need to take the exponential to get back raw probabilities.

6

7plt.hist(samples, bins=30, density=True, alpha=0.5, label="Generated Samples")

8plt.plot(x_plot, density, "-r", label="GMM Approximation")

9plt.plot(x_axis, mixture_pdf, "-g", label="True Mixture PDF")

10plt.xlabel("x")

11plt.ylabel("density")

12plt.title("Gaussian Mixture Model Approximation of Generated Samples")

13plt.legend()

14plt.show()

When you predict on the samples X, you get the cluster/component in which

each sample belongs to. In this case, the samples are generated from three

clusters, so the predicted labels are either 0, 1, or 2.

When you predict_proba on the samples X, you get the (log) probability

of each sample belonging to each cluster/component. In this case, the samples

are generated from three clusters, so the predicted probabilities are a 2D array

with shape (n_samples, n_components).

Then the highest probability is the cluster/component in which the sample belongs to, which is the predicted label.

Show code cell source

1gmm.predict(X), gmm.predict_proba(X)

(array([2, 2, 2, ..., 1, 2, 2]),

array([[1.26320804e-03, 2.57233727e-09, 9.98736789e-01],

[6.13952593e-06, 7.67030705e-07, 9.99993093e-01],

[3.20714893e-07, 1.82204807e-05, 9.99981459e-01],

...,

[1.19404179e-15, 9.92155660e-01, 7.84434023e-03],

[3.76091554e-07, 1.53545161e-05, 9.99984269e-01],

[1.08080724e-08, 6.99100159e-04, 9.99300889e-01]]))

Inference with 2D Data#

We can also visualize the inferred parameters of the mixture model in 2D.

Show code cell source

1# Generate a synthetic dataset with three clusters

2np.random.seed(42)

3n_samples = 500

4X1 = np.random.multivariate_normal([0, 0], [[1, 0], [0, 1]], int(0.4 * n_samples))

5X2 = np.random.multivariate_normal([5, 5], [[1, 0], [0, 1]], int(0.4 * n_samples))

6X3 = np.random.multivariate_normal([10, 10], [[1, 0], [0, 1]], int(0.2 * n_samples))

7X = np.vstack([X1, X2, X3])

8print(X.shape)

(500, 2)

Show code cell source

1# Fit a Gaussian Mixture Model to the data

2gmm = GaussianMixture(n_components=3, random_state=42)

3gmm.fit(X)

4

5print("Prior:")

6pprint(gmm.weights_)

7

8print("Mean:")

9pprint(gmm.means_)

10

11print("Covariance:")

12pprint(gmm.covariances_)

Prior:

array([0.40019011, 0.19999749, 0.3998124 ])

Mean:

array([[4.93719589e+00, 4.98357806e+00], │ [1.01268460e+01, 1.01296468e+01], │ [6.10397816e-03, 3.69680874e-02]])

Covariance:

array([[[ 1.02610394, -0.02077184], │ │ [-0.02077184, 1.00397773]], │ │ [[ 0.78282208, -0.04590306], │ │ [-0.04590306, 1.03591285]], │ │ [[ 0.91310488, -0.02686654], │ │ [-0.02686654, 0.92005989]]])

Show code cell source

1x = X[:, 0]

2y = X[:, 1]

3step = 0.01

4X_plot, Y_plot = make_meshgrid(x, y, step=step)

5print(X_plot.shape, Y_plot.shape)

6# Plot the data points and the Gaussian Mixture Model contours

7# x = np.linspace(-5, 15, 100)

8# y = np.linspace(-5, 15, 100)

9# X_plot, Y_plot = np.meshgrid(x, y)

10pos = np.empty(X_plot.shape + (2,))

11pos[:, :, 0] = X_plot

12pos[:, :, 1] = Y_plot

13print(pos.shape)

(1645, 1777) (1645, 1777)

(1645, 1777, 2)

Show code cell source

1fig, ax = plt.subplots()

2ax.scatter(X[:, 0], X[:, 1], alpha=0.5)

3

4# plot for each component a contour of the probability density function

5for mean, cov in zip(gmm.means_, gmm.covariances_):

6 rv = multivariate_normal(mean, cov)

7 ax.contour(X_plot, Y_plot, rv.pdf(pos), levels=10)

8

9plt.xlabel("x")

10plt.ylabel("y")

11plt.title("Gaussian Mixture Model Approximation of a Multimodal Distribution in 2D")

12plt.show()

Show code cell source

1# Generate a synthetic dataset with overlapping clusters

2np.random.seed(42)

3n_samples = 500

4X1 = np.random.multivariate_normal([0, 0], [[2, 0.5], [0.5, 2]], int(0.4 * n_samples))

5X2 = np.random.multivariate_normal([3, 3], [[1, -0.5], [-0.5, 1]], int(0.4 * n_samples))

6X3 = np.random.multivariate_normal(

7 [0, 5], [[1.5, 0.5], [0.5, 1.5]], int(0.2 * n_samples)

8)

9X = np.vstack([X1, X2, X3])

10

11# Fit a Gaussian Mixture Model to the data

12gmm = GaussianMixture(n_components=3, random_state=42)

13gmm.fit(X)

14

15print("Prior:")

16pprint(gmm.weights_)

17

18print("Mean:")

19pprint(gmm.means_)

20

21print("Covariance:")

22pprint(gmm.covariances_)

23

24

25# Plot the data points and the Gaussian Mixture Model contours

26x = X[:, 0]

27y = X[:, 1]

28step = 0.01

29X_plot, Y_plot = make_meshgrid(x, y, step=step)

30print(X_plot.shape, Y_plot.shape)

31# x = np.linspace(-6, 6, 100)

32# y = np.linspace(-6, 10, 100)

33# X_plot, Y_plot = np.meshgrid(x, y)

34

35pos = np.empty(X_plot.shape + (2,))

36pos[:, :, 0] = X_plot

37pos[:, :, 1] = Y_plot

38print(pos.shape)

39

40fig, ax = plt.subplots()

41ax.scatter(X[:, 0], X[:, 1], alpha=0.5)

42

43for mean, cov in zip(gmm.means_, gmm.covariances_):

44 rv = multivariate_normal(mean, cov)

45 ax.contour(X_plot, Y_plot, rv.pdf(pos), levels=10)

46

47plt.xlabel("x")

48plt.ylabel("y")

49plt.title("Gaussian Mixture Model Approximation of Overlapping Distributions in 2D")

50plt.show()

Prior:

array([0.39155155, 0.3990295 , 0.20941894])

Mean:

array([[-0.08107823, -0.0093926 ], │ [ 3.07416739, 2.85294415], │ [-0.17687862, 4.98805321]])

Covariance:

array([[[ 1.59221756, 0.38233697], │ │ [ 0.38233697, 1.86035845]], │ │ [[ 0.99917348, -0.37393606], │ │ [-0.37393606, 0.85648915]], │ │ [[ 1.38890481, 0.35019933], │ │ [ 0.35019933, 1.35042648]]])

(1348, 1176) (1348, 1176)

(1348, 1176, 2)

Prior is a Latent Variable#

The weights we have defined for each gaussian component are called the prior of the mixture model. This just means if we draw a sample \(\mathbf{x}\) from the mixture model, the probability of it belonging to the \(k\)-th component is \(\pi_k\).

In our case above, we have defined the prior as \(\boldsymbol{\pi} = \begin{bmatrix} 0.4, 0.4, 0.2 \end{bmatrix}\), which means if we draw a sample \(\mathbf{x}\) from the mixture model, the probability of it belonging to the 1st component is 0.4, the probability of it belonging to the 2nd component is 0.4, and the probability of it belonging to the 3rd component is 0.2.

The prior is a latent variable, which means it is not observed in the dataset \(\mathcal{S}\), but it is inferred from the dataset \(\mathcal{S}\). This may sound magical, but it actually is just the number of data points in each component, divided by the total number of data points.

Recall our samples consist of 10000 data points with \(3\) components. We defined the prior = [0.4, 0.4, 0.2], which means the number of data points in each component is \(4000\), \(4000\), and \(2000\) respectively.

This variable is unobserved, because we really do not know what it is when we were handed the dataset \(\mathcal{S}\).

Let’s see an example.

Problem Formulation#

Remark 31 (Notation Reminder)

Firstly, notation wise:

means the probability density function (PDF) of a multivariate normal distribution with mean \(\boldsymbol{\mu}\) and covariance \(\boldsymbol{\Sigma}\) evaluated at \(\boldsymbol{x}\). The \(\mid\) symbol means “given” but do not confuse with the conditional probability.

A Primer#

Given a set \(\mathcal{S}\) containing \(N\) data points:

where the vector \(\mathbf{x}^{(n)}\) is the \(n\)-th sample with \(D\) number of features, given by:

We can further write \(\mathcal{S}\) as a disjoint union of \(K\) sets, as follows:

where \(C_k\) is the set of data points that belong to cluster \(k\):

Furthermore, we denote \(z^{(n)}\) as the true cluster assignment of the \(n\)-th data point \(\mathbf{x}^{(n)}\).

However, in practice, we don’t have access to the true cluster assignments \(z^{(n)}\). Our goal is to estimate the cluster assignments \(z^{(n)}\) from the data points \(\mathbf{x}^{(n)}\).

In K-Means, we discussed Lloyd’s algorithm, a hard clustering method that outputs estimated cluster assignments \(\hat{y}^{(n)}\) for each data point \(\mathbf{x}^{(n)}\). Note do not be confused by the notation, here \(z\) is the true cluster assignment, while \(\hat{y}\) is the estimated cluster assignment. In K-Means however, I conveniently used \(y\) to denote the true cluster assignment, and \(\hat{y}\) to denote the estimated cluster assignment. We have an additional layer of complexity here, as we will be using \(z\) to denote the true cluster assignment, and \(y\) to denote the a-posteriori probability of the data point \(\mathbf{x}^{(n)}\) belonging to the \(k\)-th cluster \(C_{k}\).

As we have seen from the examples earlier, it is desirable to quantify the degree by which a data point belongs to a cluster. Soft clustering methods use a continues range, such as the closed interval \([0,1]\), of possible values for the degree of belonging. In contrast, hard clustering methods use only two possible values for the degree of belonging to a specific cluster, either “full belonging” or no “belonging at all”. While hard clustering methods assign a given data point to precisely one cluster, soft clustering methods typically assign a data point to several different clusters with non-zero degree of belonging [Jung, 2023].

Consequently, we can define for each data point \(\mathbf{x}^{(n)} \in \mathcal{S}\), an associated cluster assignment vector \(\widehat{\mathbf{y}}^{(n)}\) as follows:

where \(\hat{y}_k^{(n)}\) is the degree of belonging of the \(n\)-th data point \(\mathbf{x}^{(n)}\) to the \(k\)-th cluster \(C_k\). This is reminiscent of the your usual classification problem, where we have a set of \(K\) classes, and we want to assign a data point \(\mathbf{x}^{(n)}\) to one of the \(K\) classes.

In this case, we can think of \(\widehat{\mathbf{y}}^{(n)}\) as the posterior probability of the \(n\)-th data point \(\mathbf{x}^{(n)}\) belonging to the \(k\)-th cluster \(C_k\), or with our current setup, the posterior probability of the \(n\)-th data point \(\mathbf{x}^{(n)}\) given the cluster assignment \(z^{(n)} = k\).

Example 15 (Example)

Consider the following example:

then it can be interpreted as the degree of belonging of the first data point \(\mathbf{x}^{(1)}\) to each of the three clusters \(C_1, C_2, C_3\) respectively. In this example, the first data point \(\mathbf{x}^{(1)}\) has a \(10\%\) chance of belonging to cluster \(C_1\), a \(70\%\) chance of belonging to cluster \(C_2\), and a \(20\%\) chance of belonging to cluster \(C_3\). We can therefore say that the first data point \(\mathbf{x}^{(1)}\) is more likely to belong to cluster \(C_2\) than to cluster \(C_1\) or cluster \(C_3\).

However, to even find the posterior probability, we need to know what the distribution of \(\mathbb{P}\) is. In the next section, we will discuss the distribution of the posterior probability and how we can estimate it.

Gaussian Mixture Model#

A widely used soft clustering method is the Gaussian Mixture Model (GMM), which uses a probabilistic model for the data points \(\mathcal{S}=\left\{\mathbf{x}^{(n)}\right\}_{n=1}^N\).

A Gaussian mixture model is a density model where we combine a finite number of \(K\) Gaussian distributions \(\mathcal{N}\left(\boldsymbol{x} \mid \boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k\right)\) so that

where we defined \(\boldsymbol{\theta}:=\left\{\boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k, \pi_k: k=1, \ldots, K\right\}\) as the collection of all parameters of the model. This convex combination of Gaussian distribution gives us significantly more flexibility for modeling complex densities than a simple Gaussian distribution (which we recover from \((\star \star)\) for \(K=1\)) [Deisenroth et al., 2021].

Overall, the main goal is to find the 3 parameters of the mixture model that best fit the data \(\mathcal{S}\).

To elaborate further the setup defined in (91), we have:

The probability distribution above means that the (joint) probability of observing a data point \(\boldsymbol{x}^{(n)}\) is the sum of the probability of observing \(\boldsymbol{x}^{(n)}\) from each of the \(K\) clusters, parametrized by the mean and covariance vector/matrix, weighted by the probability of the cluster assignment \(z^{(n)} = k\), parameterized by \(\pi_k\). This is a mouthful, we will break it down further in the sections below. Notation wise, we can write this as:

\[ \mathbb{P}\left(\boldsymbol{X} ; \boldsymbol{\theta}\right) := \mathbb{P}_{\boldsymbol{\theta}}\left(\boldsymbol{X}\right) = \mathbb{P}_{\left\{\boldsymbol{\pi}, \boldsymbol{\mu}, \boldsymbol{\Sigma}\right\}}\left(\boldsymbol{X}\right) \]The first constraint \((\star\star)\) ensures that the probability of observing a data point \(\boldsymbol{x}^{(n)}\) from any of the \(K\) clusters is \(1\). This is a normalization constraint, and is necessary to ensure that the probability distribution is a valid probability distribution.

The shape and dimensions for the parameters are given:

\(\boldsymbol{\pi}\) is a vector of mixing coefficients (prior weights):

\[ \boldsymbol{\pi} = \begin{bmatrix} \pi_1 & \pi_2 & \cdots & \pi_k \end{bmatrix}^{\mathrm{T}} \in \mathbb{R}^K \]\(\boldsymbol{\mu}_k\) is a vector of means for the \(k\)-th cluster:

\[ \boldsymbol{\mu}_k = \begin{bmatrix} \mu_{k1} & \mu_{k2} & \cdots & \mu_{kD} \end{bmatrix}^{\mathrm{T}} \in \mathbb{R}^D \]\(\boldsymbol{\Sigma}_k\) is a covariance matrix for the \(k\)-th cluster:

\[\begin{split} \boldsymbol{\Sigma}_k = \begin{bmatrix} \Sigma_{k11} & \Sigma_{k12} & \cdots & \Sigma_{k1D} \\ \Sigma_{k21} & \Sigma_{k22} & \cdots & \Sigma_{k2D} \\ \vdots & \vdots & \ddots & \vdots \\ \Sigma_{kD1} & \Sigma_{kD2} & \cdots & \Sigma_{kDD} \end{bmatrix} \in \mathbb{R}^{D \times D} \end{split}\]

The Perpectives#

There are two interpretations of the Gaussian mixture model: the latent variable perspective and the data likelihood perspective.

The Mixture Model Perspective#

Mixture model perspective: In this perspective, GMM is seen as a simple mixture of multiple Gaussian distributions. The goal is to model the probability density function (PDF) of the observed data as a weighted sum of the individual Gaussian PDFs. Each Gaussian component has its own mean and covariance matrix, and the model learns the weights, means, and covariances that best fit the data. This perspective focuses on the density estimation aspect of GMM and is less concerned with the underlying latent variables.

The Latent Variable Perspective#

Latent variable perspective: In this perspective, GMM is viewed as a generative probabilistic model that assumes there are some hidden (latent) variables responsible for generating the observed data points. Each hidden variable corresponds to one of the Gaussian components in the mixture. The data points are assumed to be generated by first sampling the latent variable (component) from a categorical distribution and then sampling the data point from the corresponding Gaussian distribution. This perspective is closely related to the Expectation-Maximization (EM) algorithm, which alternates between estimating the component assignments (latent variables) and updating the Gaussian parameters (mean, covariance) to maximize the likelihood of the observed data.

Summary#

Both perspectives ultimately lead to the same model, but they highlight different aspects of GMM and can be useful in different contexts. For example, the latent variable perspective is more suitable for clustering and classification tasks, while the mixture model perspective is more useful for density estimation and generating new samples from the modeled distribution.

In the next few sections, we will discuss the latent variable perspective, but note there may be some mix of the two perspectives in the following sections. For example, when we discuss about the posterior probability of the latent variables, we will also mention that it is the “responsibilities” in the mixture model perspective.

The Mixture Model Perspective#

In the previous section on, we have actually already defined the Mixture Model Perspective of the Gaussian Mixture Model.

The Gaussian Mixture Model#

To recap, a Gaussian mixture model is a density model where we combine a finite number of \(K\) Gaussian distributions \(\mathcal{N}\left(\boldsymbol{x} \mid \boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k\right)\) so that

where we defined \(\boldsymbol{\theta}:=\left\{\boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k, \pi_k: k=1, \ldots, K\right\}\) as the collection of all parameters of the model. This convex combination of Gaussian distribution gives us significantly more flexibility for modeling complex densities than a simple Gaussian distribution (which we recover from \((\star \star)\) for \(K=1\)) [Deisenroth et al., 2021].

Overall, the main goal is to find the 3 parameters of the mixture model that best fit the data \(\mathcal{S}\).

The Responsibilities#

Another quantity that will play an important role is the conditional probability of \(\boldsymbol{z}\) given \(\boldsymbol{x}\). We shall denote this quantity as the responsibility of the \(k\)-th component for generating the data point \(\boldsymbol{x}\), and denote it as \(r^{(n)}_k\):

Therefore, mixture components have a high responsibility for a data point when the data point could be a plausible sample from that mixture component.

Note that \(\boldsymbol{r}^{(n)}\) is a \(K\) dimensional vector:

is a (normalized) probability vector, i.e., \(\sum_{k} r^{(n)}_{k}=1\) with \(r^{(n)}_{k} \geqslant 0\). This probability vector distributes probability mass among the \(K\) mixture components, and we can think of \(\boldsymbol{r}^{(n)}\) as a “soft assignment” of \(\boldsymbol{x}^{(n)}\) to the \(K\) mixture components. Therefore, the responsibility \(r^{(n)}_{k}\) from (93) represents the probability that \(\boldsymbol{x}^{(n)}\) has been generated by the \(k\) th mixture component.

The Latent Variable Perspective#

Notice that if we approach the GMM from the latent variable perspective, we are more interested in the probability of the latent variable \(\boldsymbol{z}^{(n)}\) given the data \(\boldsymbol{x}^{(n)}\), as we will see later.

Within this model, we assume the following.

The Generative Process#

Consider a mental model that there are \(K\) gaussian distributions, each representing a cluster. The data point \(\boldsymbol{x}^{(n)}\) is generated by first sampling the latent variable \(\boldsymbol{z}^{(n)}\) from a categorical distribution and then sampling the data point \(\boldsymbol{x}^{(n)}\) from the corresponding Gaussian distribution. This is the generative process of the GMM.

More concretely, the sampling process can be described below.

Algorithm 6 (GMM Sampling Process)

The construction of this latent-variable model (see the corresponding graphical model in Figure 11.9) lends itself to a very simple sampling procedure (generative process) to generate data:

Sample \(z^{(n)} \sim p(\boldsymbol{z})=\boldsymbol{\pi}\) where \(z^{(n)}\) is a discrete random variable with \(K\) possible values.

Sample \(\boldsymbol{x}^{(n)} \sim p\left(\boldsymbol{x} \mid z^{(n)}\right)\).

In the first step, we select a mixture component \(k\) at random according to \(p(\boldsymbol{z})=\boldsymbol{\pi}\); in the second step we draw a sample from the corresponding mixture component \(k\). When we discard the samples of the latent variable so that we are left with the \(\boldsymbol{x}^{(n)}\), we have valid samples from the GMM. This kind of sampling, where samples of random variables depend on samples from the variable’s parents in the graphical model, is called ancestral sampling [Deisenroth et al., 2021].

This generative process prompts a few questions:

How do we define the categorical distribution \(p(\boldsymbol{z})\) (i.e. the prior distribution of the latent variable \(\boldsymbol{z}\))?

How do we define the Gaussian distribution \(p\left(\boldsymbol{x} \mid z^{(n)}\right)\) (i.e. the conditional distribution of the data \(\boldsymbol{x}\) given the latent variable \(\boldsymbol{z}\), also known as the likelihood)?

How do we define the joint distribution \(p\left(\boldsymbol{x}, \boldsymbol{z}\right)\) (i.e. the joint distribution of the data \(\boldsymbol{x}\) and the latent variable \(\boldsymbol{z}\))?

How do we define the marginal distribution \(p\left(\boldsymbol{x}\right)\) (i.e. the marginal distribution of the data \(\boldsymbol{x}\))?

How do we define the posterior distribution \(p\left(\boldsymbol{z} \mid \boldsymbol{x}\right)\) (i.e. the posterior distribution of the latent variable \(\boldsymbol{z}\) given the data \(\boldsymbol{x}\))?

Assumption 1: The Distribution of the Data Point \(\boldsymbol{x}^{(n)}\) given the Latent Variable \(\boldsymbol{z}^{(n)}\)#

Consider a mental model that there are \(K\) gaussian distributions, each representing a cluster \(C_k\). The data point \(\boldsymbol{x}^{(n)}\) is generated by first sampling the latent variable \(\boldsymbol{z}^{(n)}\) from a categorical distribution and then sampling the data point \(\boldsymbol{x}^{(n)}\) from the corresponding Gaussian distribution. This is the generative process of the GMM.

We first start by defining the \(K\) clusters, each represented by a different (multivariate) gaussian distribution.

The Latent Clusters#

Each cluster \(C_k\) for \(k=1, \ldots, K\) is represented by a multivariate gaussian distribution:

and the geometry of the clusters are completely determined by their mean vectors \(\boldsymbol{\mu}_k\) and covariance matrices \(\boldsymbol{\Sigma}_k\).

This notation can be confusing because \(C_k\) is not really a random variable, instead it is a probability distribution. Consequently, the data points that \(C_k\)’s probability distribution generates are random variables, which we will define next.

The Data Points \(\boldsymbol{x}^{(n)}\) is the Likelihood#

Any data point \(\boldsymbol{x}\) can be generated by sampling from one of the \(K\) clusters:

which means the following:

where this distribution is parametrized by \(\boldsymbol{\mu}_k\) is the mean vector of the \(k\)-th cluster, and \(\boldsymbol{\Sigma}_k\) is the covariance matrix of the \(k\)-th cluster.

This formulation further allows us to interpret a specific data point \(\boldsymbol{x}^{(n)}\) as a realization drawn from the probability distribution (95) of a specific cluster \(C_k\).

We can represent the distribution defined in (95) more concisely as:

Notice the similarity between the expression (96) and the likelihood expression in a Naive Bayes model? Yes, indeed this expression is none other than the likelihood of observing the data point \(\boldsymbol{x}^{(n)}\) given the latent variable \(Z^{(n)} = k\).

The Likelihood of One Single Data Point \(\boldsymbol{x}^{(n)}\)#

Let \(x^{(n)}\) denote the \(n\)-th data point, with \(n = 1, \dots, N\).

Let \(z^{(n)}\) denote the latent variable corresponding to the \(n\)-th data point, representing the Gaussian component it belongs to. \(z^{(n)}\) can take on values \(1, \dots, K\), where \(K\) is the number of Gaussian components.

The likelihood of the \(n\)-th data point belonging to the \(k\)-th Gaussian component can be denoted as

\[ p(x^{(n)} | z^{(n)} = k ; \boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k) \quad \text{for } k = 1, \dots, K \]Since each \(p(x^{(n)} | z^{(n)} = k)\) is parametrized by the mean and covariance vector/matrix, we can write the below without ambiguity:

\[ p(x^{(n)} | z^{(n)} = k) = \mathcal{N}(x^{(n)} | \boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k) \]where \(\mathcal{N}\) is the multivariate Gaussian distribution.

Consequently, we obtain all \(K\) components of the likelihood vector:

\[\begin{split} \begin{aligned} \boldsymbol{L}^{(n)} &= \begin{bmatrix} p(x^{(n)} | z^{(n)} = 1) \\ p(x^{(n)} | z^{(n)} = 2) \\ \vdots \\ p(x^{(n)} | z^{(n)} = K) \end{bmatrix}_{K \times 1} \\ &= \begin{bmatrix} \mathcal{N}(x^{(n)} | \boldsymbol{\mu}_1, \boldsymbol{\Sigma}_1) \\ \mathcal{N}(x^{(n)} | \boldsymbol{\mu}_2, \boldsymbol{\Sigma}_2) \\ \vdots \\ \mathcal{N}(x^{(n)} | \boldsymbol{\mu}_K, \boldsymbol{\Sigma}_K) \end{bmatrix}_{K \times 1} \\ &= \begin{bmatrix} L_1^{(n)} \\ L_2^{(n)} \\ \vdots \\ L_K^{(n)} \end{bmatrix}_{K \times 1} \end{aligned} \end{split}\]and all elements sum to 1, fully representing the likelihood of the \(n\)-th data point belonging to each of the \(K\) Gaussian components.

The Likelihood of the Entire Dataset \(\boldsymbol{X}\)#

We are only talking about the likelihood of a single data point \(\boldsymbol{x}^{(n)}\) belonging to a specific cluster \(C_k\) (\(z^{(n)} = k\)). We will now discuss how to compute the likelihood of the entire dataset \(\boldsymbol{X}\) belonging to a specific cluster \(C_k\).

Given \(\mathcal{S} = \left\{ \boldsymbol{x}^{(1)}, \boldsymbol{x}^{(2)}, \ldots, \boldsymbol{x}^{(N)} \right\}\), the likelihood of the entire dataset \(\boldsymbol{X}\) belonging to a specific cluster \(C_k\) can be written as:

Assumption 2: The Latent Variable \(\boldsymbol{z}\)#

We have discussed about the likelihood of a single data point \(\boldsymbol{x}^{(n)}\) belonging to a specific cluster \(C_k\) (\(z^{(n)} = k\)). The next logical question is to ask: what is the probability distribution of \(\boldsymbol{z}\)?

Similar to the feature vectors \(\boldsymbol{x}^{(n)}\), the cluster assignment \(z^{(n)}\) can also be interpreted as realization drawn from a latent discrete random variable \(Z\).

The Prior Distribution of \(\boldsymbol{z}\)#

In contrast to the feature vectors \(\boldsymbol{x}^{(n)}\), we do not observe (know) the true cluster indices \(z^{(n)}\). After all, the goal of soft clustering is to estimate the cluster indices \(z^{(n)}\). We obtain a soft clustering method by estimating the cluster indices \(z^{(n)}\) based solely on the data points in \(\mathcal{S}\). To compute these estimates we assume that the (true) cluster indices \(z^{(n)}\) are realizations of iid RVs with the common probability distribution (or probability mass function):

where \(\boldsymbol{\pi} = \begin{bmatrix} \pi_1 & \pi_2 & \ldots & \pi_K \end{bmatrix}^{\mathrm{T}}\) is a \(K\)-dimensional vector of probabilities. It is also common to denote the prior distribution as a one-hot vector.

As mentioned in the previous step, one will soon realize that this is the prior in a Bayes model. With this, we have answered the question of what the probability distribution of \(Z\) is.

The (prior) probabilities are either assumed known or estimated from data. The choice for the probabilities \(\pi_k\) could reflect some prior knowledge about different sizes of the clusters. For example, if cluster \(C_1\) is known to be larger than cluster \(C_2\), we might choose the prior probabilities such that \(\pi_1 > \pi_2\) [Jung, 2023].

The Categorical Distribution#

Let’s now discuss the probability distribution of the latent variable \(Z\).

Definition 53 (Categorical Distribution)

Let \(Z\) be a discrete random variable with \(K\) number of states. Then \(Z\) follows a categorical distribution with parameters \(\boldsymbol{\pi}\) if

Consequently, the PMF of the categorical distribution is defined more compactly as,

where \(I\{Z = k\}\) is the indicator function that is equal to 1 if \(Z = k\) and 0 otherwise.

More often, we use the one-hot encoding to represent the categorical distribution. The one-hot encoding is a vector of size \(K\) where all elements are 0 except for the \(k\)-th element which is 1. For example, if \(K = 3\), the one-hot encoding of \(k = 2\) is \(\mathbf{y} = \begin{bmatrix} 0 & 1 & 0 \end{bmatrix}^{\mathrm{T}}\).

Definition 54 (Categorical (Multinomial) Distribution)

This formulation is adopted by Bishop’s[Bishop, 2007], the categorical distribution is defined as

where

is an one-hot encoded vector of size \(K\),

The \(z_k\) is the \(k\)-th element of \(\mathbf{z}\), and is equal to 1 if \(Y = k\) and 0 otherwise. The \(\pi_k\) is the \(k\)-th element of \(\boldsymbol{\pi}\), and is the probability of \(\mathbf{Z} = k\).

This notation alongside with the indicator notation in the previous definition allows us to manipulate the likelihood function in a more compact way.

Prior Distribution of the Entire Dataset \(\mathcal{S}\)#

The prior distribution \(\boldsymbol{\pi}\) is shared by all the data points in \(\mathcal{S}\).

Let \(z^{(n)}\) denote the latent variable corresponding to the \(n\)-th data point, representing the Gaussian component it belongs to. \(z^{(n)}\) can take on values \(1, \dots, K\), where \(K\) is the number of Gaussian components.

The prior probability of the \(k\)-th Gaussian component can be denoted as \(P(Z^{(n)} = k)\), for \(k = 1, \dots, K\).

These probabilities can be represented as a vector

\[\begin{split} \boldsymbol{\pi} = \begin{bmatrix} p(z^{(n)} = 1) \\ p(z^{(n)} = 2) \\ \vdots \\ p(z^{(n)} = K) \end{bmatrix}_{K \times 1} = \begin{bmatrix} \pi_1 \\ \pi_2 \\ \vdots \\ \pi_K \end{bmatrix}_{K \times 1} \end{split}\]The sum of all prior probabilities should be equal to 1, as they represent probabilities: \(\sum_{k=1}^K p(z^{(n)} = k) = 1\).

In the context of GMM, the prior probabilities can be interpreted as the probability that a randomly chosen sample belongs to the \(k\)-th Gaussian component.

Note that this prior is a global one shared across all data points. In other words, the prior probability of a data point belonging to a Gaussian component is the same as the prior probability of any other data point belonging to the same Gaussian component.

Assumption 3: The Joint Distribution of \(\boldsymbol{x}^{(n)}\) and \(\boldsymbol{z}^{(n)}\)#

So far, what have we gotten? We have defined two distributions, one is the likelihood of observation \(\boldsymbol{x}^{(n)}\) given the cluster assignment \(z^{(n)}\) and the other is the prior distribution of the cluster assignment \(z^{(n)}\).

Now, recall that when the likelihood and prior are multiplied together, we obtain the joint distribution of the data and the cluster assignment, as follows:

which is equivalent to the following:

where

\(\boldsymbol{X}^{(n)} = \boldsymbol{x}^{(n)} \mid Z^{(n)} = k \sim \mathcal{N}(\boldsymbol{x} ; \boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k) \) is the probability distribution of the data points \(\boldsymbol{x}^{(n)}\) given the cluster assignment \(z^{(n)}=k\). This is also known as the mixture component.

\(Z = k \sim \text{Cat}(\boldsymbol{\pi})\) is the probability distribution of the cluster assignment \(z^{(n)}=k\), also known as the mixing coefficient \(\pi_k\).

Why is the Joint Distribution the Product of the Likelihood and Prior?#

One question that might come to mind is why the joint distribution is the product of the likelihood and prior. The answer is that the joint distribution is the product of the likelihood and prior because of the chain rule.

Let’s just state the base case to see why.

By Bayes’ rule, we have:

and if we set \(A = x^{(n)}\) and \(B = z^{(n)} = k\), then we have:

and \(\cap\) is the intersection symbol, so we have the joint probability of the data point \(x^{(n)}\) and the latent label \(z^{(n)} = k\).

Weighted Likelihood#

Recall that we defined the prior, \(\boldsymbol{\pi}\), as the probability of a data point belonging to a Gaussian component, and the likelihood as the probability of a data point given the Gaussian component it belongs to, \(\mathcal{N}(\boldsymbol{x} ; \boldsymbol{\mu}_k, \boldsymbol{\Sigma}_k)\).

Then we have just seen that the joint distribution of the data and the cluster assignment is the product of the prior and the likelihood. This is also known as the weighted likelihood.

The weighted likelihood is the joint probability of the data point \(x^{(n)}\) and the latent label \(z^{(n)} = k\). Basically it answers the question: “What is the probability of observing the data point \(x^{(n)}\) and the latent label \(z^{(n)} = k\)?”

To see why, first consider how we define the weighted likelihood:

where \(P(x^{(n)} | z^{(n)} = k)\) is the likelihood of the \(n\)-th data point belonging to the \(k\)-th Gaussian component, and \(P(z^{(n)} = k)\) is the prior probability of the \(k\)-th Gaussian component.

First, some intuition, it is called weighted because the likelihood is weighted by the prior probability of the latent label \(z^{(n)} = k\). In other words, if we have likelihoods \(P(x^{(n)} | z^{(n)} = 2)\) for \(k=2\) to be say \(0.2\) and the prior probability of \(z^{(n)} = 2\) to be \(0.9\), then the weighted likelihood is \(0.2 \times 0.9 = 0.18\) because we have super high confidence that the data point \(x^{(n)}\) belongs to the \(k=2\) Gaussian component. However, if the prior probability of \(z^{(n)} = 2\) is \(0.1\), then the weighted likelihood is \(0.2 \times 0.1 = 0.02\) because we have low confidence that the data point \(x^{(n)}\) belongs to the \(k=2\) Gaussian component so the “likelihood” got weighed down by the low prior probability.

Weighted Likelihood of One Single Data Point \(\boldsymbol{x}^{(n)}\)#

The weighted likelihood of a single data point \(\boldsymbol{x}^{(n)}\) is obtained by multiplying the likelihood of the data point belonging to each Gaussian component by the corresponding mixing coefficient (weight) of that component. Let \(\boldsymbol{\pi}\) be the vector of mixing coefficients, with \(\pi_k\) representing the weight of the \(k\)-th Gaussian component. Then, the weighted likelihood of the data point \(\boldsymbol{x}^{(n)}\) can be written as:

Here, \(\boldsymbol{W}^{(n)}\) is the vector of weighted likelihoods of the data point \(\boldsymbol{x}^{(n)}\) belonging to each of the \(K\) Gaussian components, and \(W_k^{(n)}\) represents the weighted likelihood of the data point \(\boldsymbol{x}^{(n)}\) belonging to the \(k\)-th Gaussian component.

Weighted Likelihood of the Entire Dataset \(\boldsymbol{X}\)#

To compute the weighted likelihood of the entire dataset \(\boldsymbol{X}\), we need to calculate the weighted likelihood for each data point \(\boldsymbol{x}^{(n)}\) and then combine them. For this purpose, we can represent the weighted likelihood of the entire dataset as a matrix \(\boldsymbol{W}\) of size \(N \times K\), where \(N\) is the number of data points and \(K\) is the number of Gaussian components:

Each row of the matrix \(\boldsymbol{W}\) corresponds to the weighted likelihood vector \(\boldsymbol{W}^{(n)}\) for a data point \(\boldsymbol{x}^{(n)}\). To obtain the weighted likelihood of the entire dataset, we can either sum or compute the product of all elements in the matrix \(\boldsymbol{W}\), depending on the desired objective (e.g., maximizing the log-likelihood).

Now the returned is a matrix of shape \((N, K)\), where \(N\) is the number of data points and \(K\) is the number of Gaussian components. The \(n\)-th row and \(k\)-th column element is the weighted likelihood of the \(n\)-th data point belonging to the \(k\)-th Gaussian component.

In code, we need to separate the weighted likelihood matrix \(\boldsymbol{W}\) into two matrices, as follows:

Mixing coefficients matrix, \(\boldsymbol{\Pi}\), of shape \((N \times K)\), where each row contains the mixing coefficients \(\boldsymbol{\pi}\) repeated for each data point:

Likelihood matrix, \(\boldsymbol{L}\), of shape \((N \times K)\), where each element \((i, j)\) represents the likelihood of the \(i\)-th data point belonging to the \(j\)-th Gaussian component:

Now, you can obtain the weighted likelihood matrix \(\boldsymbol{W}\) by performing element-wise multiplication (Hadamard product) of the mixing coefficients matrix \(\boldsymbol{\Pi}\) and the likelihood matrix \(\boldsymbol{L}\):

Joint Distribution Fully Determines the Model#

With the joint distribution defined, the model is fully determined. Why do we say so? Because the joint distribution of the data point \(\boldsymbol{x}^{(n)}\) and the latent variable \(z^{(n)}\) is fully determined by the parameters \(\boldsymbol{\theta}\), which are the model parameters.

Consequently, if we want to find the marginal, we need to integrate out the latent variable \(z^{(n)}\) from the joint distribution. Then subsequently, we can also find the posterior distribution of the latent variable \(z^{(n)}\) by using Bayes’ rule. Therefore, when we say the joint distribution fully determines the model, what is really means is that we have all the necessary tools to find anything related to the random variables \(z^{(n)}\) and \(\boldsymbol{x}^{(n)}\).

The Gaussian Mixture Model and the Marginal Distribution#

The Gaussian Mixture Model#

Recall that we defined our Gaussian Mixture Model as a linear combination of \(K\) multivariate Gaussian distributions:

We now claim that the mixture of \(K\) multivariate Gaussian distributions is a valid distribution, and it is none other than the marginal distribution of \(\boldsymbol{X}^{(n)}\).

The Marginal Distribution#

We go back to fundamentals and ask what is the marginal distribution of a random variable \(X\)?

In our setting, it is the probability of the data point \(x^{(n)}\). Basically it answers the question: “What is the probability of observing the data point \(x^{(n)}\)?”

Since \(\boldsymbol{x}^{(n)}\) is a \(D\)-dimensional vector, we can think of it as a point in a \(D\)-dimensional space. The marginal distribution is the probability of observing this point in this space. Since it is in high dimensions usually, \(\boldsymbol{x}^{(n)}\) is usually a point in a high-dimensional space, and hence follow a multi-variate distribution.

The marginal distribution of the data points \(\mathbf{x}^{(n)}\):

where this is the actual Gaussian Mixture Model that we are trying to fit to the data.

One question is how do we get this marginal distribution? We can get it by marginalizing out the latent variable \(z^{(n)}\) from the joint distribution. And what does it mean by “marginalizing out” the latent variable \(z^{(n)}\)? This concept is tied to the concept of conditional probability and the law of total probability.

Marginalizing Out the Latent Variable#

Recall marginal distribution is none other than the denominator of the posterior distribution in Bayes’ rule:

and the denominator is called the marginal distribution. The expansion of the denominator as a summation of the numerator uses the law of total probability.

In other words, to marginalize out the latent variable \(Z\), we can simply sum over all possible values of \(Z\). As a result, we get a mixture of \(K\) multivariate Gaussian distributions, where each Gaussian distribution is defined by the parameters \(\boldsymbol{\mu}_k\) and \(\boldsymbol{\Sigma}_k\) and its corresponding mixing coefficient \(\pi_k\).

Thus, we have concluded in defining a systematic distribution in which our data points \(\mathbf{x}^{(n)}\) come from. This is the Gaussian Mixture Model.

But more is to come, because estimating the parameters is not simple, there is no closed-form solution to the problem. And if you look closely enough, the marginal distribution depends on both the parameters \(\boldsymbol{\mu}\), \(\boldsymbol{\Sigma}\) and \(\boldsymbol{\pi}\). However, unlike our classification problem with true labels \(y\), we do not have access to the true labels \(z\) in this case. We only have access to the data points \(\mathbf{x}\). This is a problem because we can no longer “estimate” the empirical distribution of \(z\) by simply counting the number of occurrences of each \(z\) in the dataset. But hope is not lose as we can make use of the expectation-maximization (EM) algorithm to solve this problem.

Marginal of One Single Data Point \(\boldsymbol{x}^{(n)}\)#

The marginal of a single data point \(\boldsymbol{x}^{(n)}\) is obtained by summing the weighted likelihoods of the data point belonging to each Gaussian component. Mathematically, it can be written as:

Marginal of the Entire Dataset \(\boldsymbol{X}\)#

The marginal of the entire dataset \(\boldsymbol{X}\) is collated as follows:

The Posterior Distribution#

Now we can answer the posterior distribution of the cluster assignment \(z^{(n)}\) given the data points \(\mathbf{x}^{(n)}\).

This is the degree of belonging is none other than the posterior distribution!

Posterior of One Single Data Point \(\boldsymbol{x}^{(n)}\)#

Using the posterior distribution equation (99), we can calculate the posterior probability of a single data point \(\boldsymbol{x}^{(n)}\) belonging to each of the \(K\) Gaussian components. The result is a vector of size \(K \times 1\), where the \(k\)-th element represents the posterior probability of the data point \(\boldsymbol{x}^{(n)}\) belonging to the \(k\)-th Gaussian component:

Here, \(\boldsymbol{R}^{(n)}\) is the posterior probability vector for the data point \(\boldsymbol{x}^{(n)}\).

There is a reason we use \(\boldsymbol{R}\) instead of say \(\boldsymbol{P}\) for shorthand representation of the posterior probability vector. The reason is that \(\boldsymbol{R}\) is also known as the responsibility of the \(k\)-th Gaussian component for the data point \(\boldsymbol{x}^{(n)}\), which we will see later.

Posterior of the Entire Dataset \(\boldsymbol{X}\)#

To compute the posterior probability of the entire dataset \(\boldsymbol{X}\), we need to calculate the posterior probability for each data point \(\boldsymbol{x}^{(n)}\) and then combine them. For this purpose, we can represent the posterior probability of the entire dataset as a matrix \(\boldsymbol{R}\) of size \(N \times K\), where \(N\) is the number of data points and \(K\) is the number of Gaussian components:

Each row of the matrix \(\boldsymbol{P}\) corresponds to the posterior probability vector \(\boldsymbol{P}^{(n)}\) for a data point \(\boldsymbol{x}^{(n)}\). The posterior probability of the entire dataset can be used to assess the overall clustering quality, assign data points to the most probable cluster, or update the model parameters in an iterative manner (e.g., using the Expectation-Maximization algorithm).

Parameter Estimation (Mixture Model Perspective)#

Assume we are given a dataset \(\mathcal{S}\)

where each data point \(\boldsymbol{x}_{n}\) are drawn i.i.d. from an unknown distribution \(\mathcal{D}\) defined as:

but since \(\mathcal{Z}\) is treated as a latent variable, we only have information to:

Our objective is to find a good approximation/representation of this unknown distribution \(\mathbb{P}_{\left\{\boldsymbol{\pi}, \boldsymbol{\mu}, \boldsymbol{\Sigma}\right\}}\left(\mathcal{X} \right)\) by means of a GMM with \(K\) mixture components. The parameters of the GMM are the \(K\) means \(\boldsymbol{\mu}_{k}\), the covariances \(\boldsymbol{\Sigma}_{k}\), and mixture weights \(\pi_{k}\). We summarize all these free parameters in the symbol \(\boldsymbol{\theta}\):

See the section here for more information.

The Vectorized Parameters#

However, to facilitate the notation, we will use the following vectorized representation of the parameters:

The Mixture Weights \(\boldsymbol{\pi}\)#

\(\boldsymbol{\pi}\) is a \(K\)-dimensional vector of mixture weights:

and can be broadcasted to

for Hamadard product with \(\boldsymbol{R}\).

The Means \(\boldsymbol{\mu}\)#

These are the means of the Gaussian components in the Gaussian Mixture Model.

Let

be the mean vectors of the Gaussian components, with

being a column vector representing the mean of the \(k\)-th Gaussian component and \(D\) being the number of features.

Thus collating all \(K\) mean vectors \(\boldsymbol{\mu}_1, \boldsymbol{\mu}_2, \dots, \boldsymbol{\mu}_K\) into a matrix \(M\) of dimensions \((K, D)\), we have

The Covariances \(\boldsymbol{\Sigma}\)#

self.covariances_: These are the covariance matrices of the Gaussian components in the Gaussian Mixture Model. In the context of GMM, self.covariances_ is a 3D array of shape (num_components, num_features, num_features), where each “slice” along the first axis represents the covariance matrix of the corresponding Gaussian component. Let

be the covariance matrices of the Gaussian components, with each \(\boldsymbol{\Sigma}_k\) being a symmetric positive-definite matrix of dimensions \((D, D)\):

The self.covariances_ array can be represented as a tensor \(\boldsymbol{C}\) with dimensions \((K, D, D)\), where the \(k\)-th “slice” is the covariance matrix \(\boldsymbol{\Sigma}_k\).

Likelihood and Log-Likelihood of Marginal Distribution#

As with any probabilistic model that requires parameter estimation, we need to define a likelihood function for the dataset \(\mathcal{S}\).

In the following, we detail how to obtain a maximum likelihood estimate \(\widehat{\boldsymbol{\theta}}\) of the model parameters \(\boldsymbol{\theta}\). We start by writing down the likelihood of the marginal likelihood of the observing the data, i.e., the predictive distribution of the training data given the parameters. We exploit our i.i.d. assumption, which leads to the factorized likelihood

where every individual likelihood term \(p\left(\boldsymbol{x}^{(n)} \mid \boldsymbol{\theta}\right)\) is a Gaussian mixture density.

Then we obtain the log-likelihood as

We will abbreviate the log-likelihood as \(\mathcal{L}\left(\boldsymbol{\theta} \mid \mathcal{S} = \left\{\boldsymbol{x}^{(1)}, \ldots, \boldsymbol{x}^{(n)}\right\}\right)\) when the context is clear.

No Closed-Form Solution#

We aim to find parameters \(\widehat{\boldsymbol{\theta}}^{*}\) that maximize the log-likelihood \(\mathcal{L}\) defined in (101). Our “normal” procedure would be to compute the gradient \(\mathrm{d} \mathcal{L} / \mathrm{d} \boldsymbol{\theta}\) of the \(\log\)-likelihood with respect to the model parameters \(\boldsymbol{\theta}\), set it to \(\mathbf{0}\), and solve for \(\boldsymbol{\theta}\). However, unlike our previous examples for maximum likelihood estimation (e.g., when we discussed linear regression), we cannot obtain a closed-form solution. However, we can exploit an iterative scheme to find good model parameters \(\widehat{\boldsymbol{\theta}}\), which will turn out to be the EM algorithm for GMMs. The key idea is to update one model parameter at a time while keeping the others fixed [Deisenroth et al., 2021].

Remark 32 (Remark: Closed-Form Solution for Single Gaussian)

If we were to consider a single Gaussian as the desired density, the sum over \(k\) in (101) vanishes, and the log can be applied directly to the Gaussian component, such that we get

This simple form allows us to find closed-form maximum likelihood estimates of \(\boldsymbol{\mu}\) and \(\boldsymbol{\Sigma}\), as discussed in the chapter on maximum likelihood estimation. In (101), we cannot move the log into the sum over \(k\) so that we cannot obtain a simple closed-form maximum likelihood solution [Deisenroth et al., 2021].

Overall, what this means is that we can obtain a closed-form solution for the parameters of a single Gaussian, but not for a mixture of Gaussians.

To find out more why this constitutes a problem, one can read section 9.2.1 in Bishop, Christopher M.’s book “Pattern Recognition and Machine Learning”.

Parameter Estimation (The Necessary Conditions)#

Even though there is no closed form solution, we can still use iterative gradient-based optimization to find good model parameters \(\widehat{\boldsymbol{\theta}}\). Consequently, Any local optimum of a function exhibits the property that its gradient with respect to the parameters must vanish (necessary condition).

In our case, we obtain the following necessary conditions when we optimize the log-likelihood in (101) with respect to the GMM parameters \(\boldsymbol{\mu}_{k}, \boldsymbol{\Sigma}_{k}, \pi_{k}\) :

In matrix/vector form, we have

the derivative of the log-likelihood with respect to the mean parameters \(\boldsymbol{\mu}_1, \boldsymbol{\mu}_2 \ldots, \boldsymbol{\mu}_K\) is

the derivative of the log-likelihood with respect to the covariance parameters \(\boldsymbol{\Sigma}_1, \boldsymbol{\Sigma}_2 \ldots, \boldsymbol{\Sigma}_K\) is

the derivative of the log-likelihood with respect to the mixing coefficients \(\pi_1, \pi_2 \ldots, \pi_K\) is

The Chain Rule (Matrix Calculus)#

See section 5.2.2. Chain Rule of Mathematics for Machine Learning, written by Deisenroth, Marc Peter, Faisal, A. Aldo and Ong, Cheng Soon.

For all three necessary conditions, by applying the chain rule, we require partial derivatives of the form

where \(\boldsymbol{\theta}=\left\{\boldsymbol{\mu}_{k}, \boldsymbol{\Sigma}_{k}, \pi_{k}, k=1, \ldots, K\right\}\) are the model parameters and

Running Example#

We will use the following running example to illustrate the GMM. This example is from [Deisenroth et al., 2021].

Example 16 (Running Example (Initialization))

We consider a one-dimensional dataset \(\mathcal{S}=\{-3,-2.5,-1,0,2,4,5\}\) consisting of seven data points and wish to find a GMM with \(K=3\) components that models the density of the data. We initialize the mixture components as

and assign them equal weights \(\pi_{1}=\pi_{2}=\pi_{3}=\frac{1}{3}\). The corresponding model (and the data points) are shown below.

Note we have not yet explained why are we “randomly” initializing the mixture components and the mixture weights. This is part of the EM algorithm and will be explained in the next sections.

Show code cell source

1import numpy as np

2import matplotlib.pyplot as plt

3from scipy.stats import norm

4

5def create_gmm(mus, sigmas, pis, x_range):

6 pdfs = [pi * norm.pdf(x_range, mu, sigma) for pi, mu, sigma in zip(pis, mus, sigmas)]

7 gmm_pdf = np.sum(pdfs, axis=0)

8 return pdfs, gmm_pdf

9

10def plot_gmm(data, mus, sigmas, pis, x_range, pdfs, gmm_pdf, title, ax=None):

11 ax = ax or plt.gca()

12 ax.scatter(data, np.zeros_like(data), marker='o', color='k', label='Data points')

13 for k, pdf in enumerate(pdfs, start=1):

14 ax.plot(x_range, pdf, label=f'$\mathcal{{N}}(x \mid {mus[k-1]}, {sigmas[k-1]})$')

15 ax.plot(x_range, gmm_pdf, label='GMM', linestyle='--', color='black')

16 ax.set_xlabel('x')

17 ax.set_ylabel('Density')

18 ax.legend()

19 ax.set_title(title)

20

21data = np.array([-3, -2.5, -1, 0, 2, 4, 5])

22K = 3

23

24# Initialize the mixture components

25mus = np.array([-4, 0, 8])

26sigmas = np.array([1, 0.2, 3])

27pis = np.array([1/3, 1/3, 1/3])

28

29x_range = np.linspace(-7.5, 15, 1000)

30pdfs, gmm_pdf = create_gmm(mus, sigmas, pis, x_range)

31plot_gmm(data, mus, sigmas, pis, x_range, pdfs, gmm_pdf, 'Initial Gaussian Mixture Model and Data Points')

2024-12-17 09:43:08,606 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,613 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,619 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,660 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,666 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,673 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,740 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,745 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,750 - INFO - Substituting symbol N from STIXNonUnicode

Next, we can calculate the responsibilities \(r^{(n)}_{k}\) for each data point \(x^{(n)}\) and each mixture component \(k\).

For our example from Example 16, we compute the responsibilities \(r^{(n)}_{k}\)

Here the \(n\)th row tells us the responsibilities of all mixture components for \(x^{(n)}\). The sum of all \(K\) responsibilities for a data point (sum of every row) is 1 . The \(k\) th column gives us an overview of the responsibility of the \(k\) th mixture component. We can see that the third mixture component (third column) is not responsible for any of the first four data points, but takes much responsibility of the remaining data points. The sum of all entries of a column gives us the values \(N_{k}\), i.e., the total responsibility of the \(k\) th mixture component. In our example, we get \(N_{1}=2.058, N_{2}=\) \(2.008, N_{3}=2.934\) [Deisenroth et al., 2021].

Estimating the Mean Parameters \(\boldsymbol{\mu}_k\)#

Theorem 11 (Update of the GMM Means)

The update of the mean parameters \(\boldsymbol{\mu}_{k}, k=1, \ldots, K\), of the \(G M M\) is given by

where

the responsibilities \(r^{(n)}_{k}\) are defined in (93), which is the probability that the \(k\) th mixture component generated the \(n\)-th data point.

\(N_{k}=\sum_{n=1}^{N} r^{(n)}_{k}\) can be interpreted the number of data points assigned to the \(k\) th mixture component.

Remark 33 (The update of the GMM Means depends on the responsibilities)

The update of the means \(\boldsymbol{\mu}_{k}\) of the individual mixture components in (104) depends on all means, covariance matrices \(\boldsymbol{\Sigma}_{k}\), and mixture weights \(\pi_{k}\) via \(r^{(n)}_{k}\) given in (93). Therefore, we cannot obtain a closed-form solution for all \(\boldsymbol{\mu}_{k}\) at once.

What this means is that in order to update the means, we need to first compute the responsibilities \(r^{(n)}_{k}\), but computing the responsibilities requires us to know the means \(\boldsymbol{\mu}_{k}\), which we want to update. This is a typical problem in iterative algorithms, which we will discuss in more detail in the next section.

Proof. The proof is taken from [Deisenroth et al., 2021].

From (102) we see that the gradient of the log-likelihood with respect to the mean parameters \(\boldsymbol{\mu}_{k}, k=1, \ldots, K\), requires us to compute the partial derivative

where we exploited that only the \(k\) th mixture component depends on \(\boldsymbol{\mu}_{k}\).

We use our result from (b) in (102) and put everything together so that the desired partial derivative of \(\mathcal{L}\) with respect to \(\boldsymbol{\mu}_{k}\) is given as

Here we used the identity from (103) and the result of the partial derivative in (b) to get to (d). The values \(r^{(n)}_{k}\) are the responsibilities we defined in (93).

We now solve (e) for \(\boldsymbol{\mu}_{k}^{\text {new }}\) so that \(\frac{\partial \mathcal{L}\left(\boldsymbol{\mu}_{k}^{\mathrm{new}}\right)}{\partial \boldsymbol{\mu}_{k}}=\mathbf{0}^{\top}\) and obtain

where we defined

as the total responsibility of the \(k\) th mixture component for the entire dataset. This concludes the proof of Theorem 11.

Some Intuition#

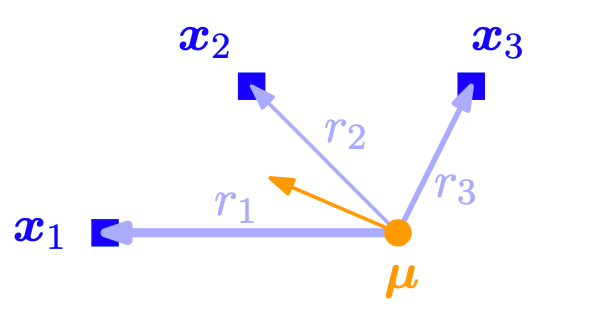

Intuitively, (104) can be interpreted as an importance-weighted Monte Carlo estimate of the mean, where the importance weights of data point \(\boldsymbol{x}^{(n)}\) are the responsibilities \(r^{(n)}_{k}\) of the \(k\) th cluster for \(\boldsymbol{x}^{(n)}, k=1, \ldots, K\). Therefore, the mean \(\boldsymbol{\mu}_{k}\) is pulled toward a data point \(\boldsymbol{x}^{(n)}\) with strength given by \(r^{(n)}_{k}\). The means are pulled stronger toward data points for which the corresponding mixture component has a high responsibility, i.e., a high likelihood. Figure Fig. 17 illustrates this.

Fig. 17 Update of the mean parameter of mixture component in a GMM. The mean \(\boldsymbol{\mu}\) is being pulled toward individual data points with the weights given by the corresponding responsibilities. Image Credit: [Deisenroth et al., 2021].#

We can also interpret the mean update in (104) as the expected value of all data points under the distribution given by

which is a normalized probability vector, i.e.,

Update Mean of Running Example#

Example 17 (Running Example: Update Mean)

In our example from Example 16, the mean values are updated as follows:

Here we see that the means of the first and third mixture component move toward the regime of the data, whereas the mean of the second component does not change so dramatically. Figure 11.3 illustrates this change, where Figure 11.3(a) shows the GMM density prior to updating the means and Figure 11.3(b) shows the GMM density after updating the mean values \(\mu_{k}\).

Show code cell source

1# Update the means

2mus_new = np.array([-2.7, -0.4, 3.7])

3

4# Create a new GMM with the updated means

5pdfs_new, gmm_pdf_new = create_gmm(mus_new, sigmas, pis, x_range)

6

7# Create the original GMM with the initial means

8pdfs_original, gmm_pdf_original = create_gmm(mus, sigmas, pis, x_range)

9

10fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(14, 5))

11

12# Plot the original GMM

13plot_gmm(data, mus, sigmas, pis, x_range, pdfs_original, gmm_pdf_original, 'Initial Gaussian Mixture Model and Data Points', ax1)

14

15# Plot the updated GMM

16plot_gmm(data, mus_new, sigmas, pis, x_range, pdfs_new, gmm_pdf_new, 'Updated Gaussian Mixture Model and Data Points with New Mean.', ax2)

17

18plt.show()

2024-12-17 09:43:08,838 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,844 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,850 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,899 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,905 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,911 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:08,999 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:09,005 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:09,010 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:09,059 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:09,065 - INFO - Substituting symbol N from STIXNonUnicode

2024-12-17 09:43:09,071 - INFO - Substituting symbol N from STIXNonUnicode

The update of the mean parameters in (104) look fairly straightforward. However, note that the responsibilities \(r^{(n)}_{k}\) are a function of \(\pi_{j}, \boldsymbol{\mu}_{j}, \boldsymbol{\Sigma}_{j}\) for all \(j=1, \ldots, K\), such that the updates in (104) depend on all parameters of the GMM, and a closed-form solution, which we obtained for linear regression, cannot be obtained.

Another important thing one needs to realize is that the update of the means’s right hand side’s \(N_k\) and \(r^{(n)}_{k}\) are all based on the previous iteration’s parameters (or current depending on how you term it). See code for concrete logical flow.

Estimating the Mean Parameters \(\boldsymbol{\mu}_{k}\) in Python#

We can estimate the mean parameters \(\boldsymbol{\mu}_{k}\) in Python using the following code snippet:

means = responsibilities.T @ X / nk[:, np.newaxis] # (K, D)

where

responsibilitiesis a \(N \times K\) matrix of responsibilities \(r^{(n)}_{k}\), andnkis a \(K\)-dimensional vector of \(N_{k}\) values.

Why? Because if you look at equation (104):

where the last equality leads is:

responsibilities[:, k].T @ X

so in order to find all \(K\) mean parameters \(\boldsymbol{\mu}_{k}\), we just need to repeat the above code snippet for all \(k=1, \ldots, K\):

where \(\boldsymbol{M}\) is a \(K \times D\) matrix of mean parameters \(\boldsymbol{\mu}_{k}\) and \(\boldsymbol{R}\) is a \(N \times K\) matrix of responsibilities \(r^{(n)}_{k}\).

So we update our code snippet to:

responsibilities.T @ X

and to divide by \(N_{k}\), we just need to broadcast the nk vector to the shape of the matrix obtained from the previous code snippet:

responsibilities.T @ X / nk[:, np.newaxis]

and we are done finding the new mean parameters \(\boldsymbol{\mu}_{k}\) in Python code (for all \(k=1, \ldots, K\)).

Estimating the Covariance Parameters \(\boldsymbol{\Sigma}_{k}\)#

Theorem 12 (Update of the GMM Covariances)

The proof is taken from [Deisenroth et al., 2021].

The update of the covariance parameters \(\boldsymbol{\Sigma}_{k}, k=1, \ldots, K\) of the \(G M M\) is given by

where \(r^{(n)}_{k}\) and \(N_{k}\) are defined in (93) and (107), respectively.

Proof. To prove Theorem 12, our approach is to compute the partial derivatives of the log-likelihood \(\mathcal{L}\) with respect to the covariances \(\boldsymbol{\Sigma}_{k}\), set them to \(\mathbf{0}\), and solve for \(\boldsymbol{\Sigma}_{k}\). We start with our general approach

We already know \(1 / p\left(\boldsymbol{x}^{(n)} ; \boldsymbol{\theta}\right)\) from (11.16). To obtain the remaining partial derivative \(\partial p\left(\boldsymbol{x}^{(n)} ; \boldsymbol{\theta}\right) / \partial \boldsymbol{\Sigma}_{k}\), we write down the definition of the Gaussian distribution \(p\left(\boldsymbol{x}^{(n)} ; \boldsymbol{\theta}\right)\) (see (11.9)) and drop all terms but the \(k\) th. We then obtain

We now use the identities

and obtain (after some rearranging) the desired partial derivative required in (112) as

Putting everything together, the partial derivative of the log-likelihood with respect to \(\boldsymbol{\Sigma}_{k}\) is given by (do pay attention to color coding):

We see that the responsibilities \(r^{(n)}_{k}\) also appear in this partial derivative. Setting this partial derivative to \(\mathbf{0}\), we obtain the necessary optimality condition

where \(\boldsymbol{I}\) is the identity matrix.

By solving for \(\boldsymbol{\Sigma}_{k}\), we obtain

where \(\boldsymbol{r}_{k}\) is the probability vector defined in (108). This gives us a simple update rule for \(\boldsymbol{\Sigma}_{k}\) for \(k=1, \ldots, K\) and proves Theorem 12.

Similar to the update of \(\boldsymbol{\mu}_{k}\) in (104), we can interpret the update of the covariance in (111) as an importance-weighted expected value of the square of the centered data \(\tilde{\mathcal{S}}_{k}:=\left\{\boldsymbol{x}^{(1)}-\boldsymbol{\mu}_{k}, \ldots, \boldsymbol{x}^{(N)}-\boldsymbol{\mu}_{k}\right\}\)

Update Covariance Matrix of Running Example#

Example 18 (Running Example: Update Covariance Matrix)

In our example from Example 16, the (co)variance values are updated as follows:

Here we see that the means of the first and third mixture component move toward the regime of the data, whereas the mean of the second component does not change so dramatically.

The figure below illustrates the change in the (co)variance values. The figure on the left shows the GMM density prior to updating the (co)variance values, whereas the figure on the right shows the GMM density after updating the (co)variance values.

Show code cell source

1# Update the variances

2sigmas_new = np.sqrt(np.array([0.14, 0.44, 1.53]))

3

4# Create the updated GMM with new means and variances

5pdfs_new2, gmm_pdf_new2 = create_gmm(mus_new, sigmas_new, pis, x_range)

6

7fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(14, 5))

8

9# Plot the GMM with updated means

10plot_gmm(data, mus_new, sigmas, pis, x_range, pdfs_new, gmm_pdf_new, 'Updated Gaussian Mixture Model and Data Points with New Mean.', ax1)

11

12# Plot the updated GMM with new means and variances

13plot_gmm(data, mus_new, sigmas_new, pis, x_range, pdfs_new2, gmm_pdf_new2, 'Updated Gaussian Mixture Model and Data Points with New Variances', ax2)

14

15plt.tight_layout()

16plt.show()