Training a Mini-GPT to Learn Two-Digit Addition#

Motivation#

Generative Pre-trained Transformer (GPT) are well known to perform bad on arithmetic tasks such as addition. This should not come as a surprise since GPT is a language model and not a math model. It is designed to train on a large corpus of text and learn the patterns and structure of natural language. While we do encounter many arithmetic operations in corpus, the encoding of these operations are often in a form that is in the text sense, not in the mathematical sense. After all, what GPT does best is to predict the next token over the entire vocabulary distribution.

In one of the examples provided from the repository minGPT, Karpathy demonstrates training a GPT model to learn the addition of two numbers presented as strings. This is a simple task designed to illustrate how a decoder-only model can be trained to learn “addition”. Thus, the input is a sequence of characters representing an addition operation (like “12 + 35”) and the output is the sequence of characters representing the result of the addition (like “47”).

To this end, we replicate his example, which serves as a proof-of-concept to show that decoder only models, which are often used for language-related tasks, can learn other patterns or “languages,” such as the “language” of arithmetic.

from __future__ import annotations

from tqdm.auto import tqdm

import inspect

import math

import os

import sys

import time

from dataclasses import dataclass

from pathlib import Path

from typing import Any, Callable, Dict, List, Optional, Tuple, Union

import matplotlib.pyplot as plt

import rich

import seaborn as sns

import torch

from omegaconf import OmegaConf as om

from rich.pretty import pprint

from torch import nn

from torch.optim import Optimizer

from torch.optim.lr_scheduler import LRScheduler

from torch.utils.data import DataLoader, Dataset, Subset, random_split

def find_root_dir(current_path: Path | None = None, marker: str = ".git") -> Path | None:

"""

Find the root directory by searching for a directory or file that serves as a

marker.

Parameters

----------

current_path : Path | None

The starting path to search from. If None, the current working directory

`Path.cwd()` is used.

marker : str

The name of the file or directory that signifies the root.

Returns

-------

Path | None

The path to the root directory. Returns None if the marker is not found.

"""

if not current_path:

current_path = Path.cwd()

current_path = current_path.resolve()

for parent in [current_path, *current_path.parents]:

if (parent / marker).exists():

return parent

return None

current_file_path = Path(os.getcwd())

root_dir = find_root_dir(current_file_path, marker="omnivault")

if root_dir is not None:

sys.path.append(str(root_dir))

from omnivault._types._alias import Accuracy, Loss

from omnivault.core.logger import RichLogger

from omnivault.transformer.config.composer import Composer, DataConfig

from omnivault.transformer.config.constants import MaybeConstant

from omnivault.transformer.config.decoder import (

AddNormConfig,

DecoderBlockConfig,

DecoderConfig,

MultiHeadedAttentionConfig,

PositionwiseFeedForwardConfig,

)

from omnivault.transformer.config.generator import GeneratorConfig

from omnivault.transformer.config.global_ import MaybeGlobal

from omnivault.transformer.config.optim import OPTIMIZER_REGISTRY, AdamConfig, OptimizerConfig

from omnivault.transformer.config.scheduler import SCHEDULER_REGISTRY, LambdaLRConfig

from omnivault.transformer.config.trainer import TrainerConfig

from omnivault.transformer.core.callbacks import save_state

from omnivault.transformer.core.dataset import (

AdderDataset,

construct_dummy_batch_future_masks,

construct_dummy_batch_target_padding_masks,

create_loader,

split_dataset,

)

from omnivault.transformer.core.optim import apply_weight_decay_to_different_param_groups

from omnivault.transformer.core.tokenizer import AdderTokenizer

from omnivault.transformer.core.trainer import Trainer, TrainerEvent

from omnivault.transformer.core.vocabulary import AdderVocabulary

from omnivault.transformer.decoder.core import GPTDecoder

from omnivault.transformer.modules.attention.core import ScaledDotProductAttention

from omnivault.transformer.projects.adder.main import evaluate_and_generate_on_valid_epoch_end

from omnivault.transformer.utils.general_utils import create_directory, download_file

from omnivault.transformer.utils.visualization import show_attention_heatmaps

from omnivault.utils.config_management.omegaconf import load_yaml_config, merge_configs

from omnivault.utils.inspector.core import get_field_annotations

from reproducibility.seed import seed_all

else:

raise ImportError("Root directory not found.")

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[2], line 59

57 from omnivault.transformer.core.optim import apply_weight_decay_to_different_param_groups

58 from omnivault.transformer.core.tokenizer import AdderTokenizer

---> 59 from omnivault.transformer.core.trainer import Trainer, TrainerEvent

60 from omnivault.transformer.core.vocabulary import AdderVocabulary

61 from omnivault.transformer.decoder.core import GPTDecoder

File ~/work/omniverse/omniverse/omnivault/transformer/core/trainer.py:15

13 from torch.nn.parallel import DistributedDataParallel

14 from torch.utils.data import DataLoader

---> 15 from torchmetrics.text import Perplexity

16 from tqdm import tqdm

18 from omnivault._types._alias import Loss

ModuleNotFoundError: No module named 'torchmetrics'

Config#

yaml_cfg = load_yaml_config(yaml_path=root_dir / "omnivault/transformer/projects/adder/config.yaml")

cfg = merge_configs(yaml_cfg, args_list=[])

om.resolve(cfg) # inplace ops

constants: MaybeConstant = MaybeConstant(

NUM_DIGITS=2,

TOKENS=[

"0",

"1",

"2",

"3",

"4",

"5",

"6",

"7",

"8",

"9",

"+",

"*",

"-",

"=",

"<BOS>",

"<EOS>",

"<PAD>",

"<UNK>",

],

)

global_config: MaybeGlobal = MaybeGlobal(seed=42, debug=True, debug_samples=100)

data_config: DataConfig = DataConfig(**cfg.data)

optimizer_config = AdamConfig(name="torch.optim.Adam", lr=0.2, betas=(0.9, 0.98), eps=1e-9)

cfg.trainer.device = "cpu"

cfg.trainer.max_epochs = 9

trainer_config = TrainerConfig(**cfg.trainer)

generate_config = GeneratorConfig(**cfg.generator)

composer = Composer(

constants=constants,

global_=global_config,

data=data_config,

optimizer=optimizer_config,

trainer=trainer_config,

generator=generate_config,

)

pprint(composer)

LOGGER = RichLogger(**composer.logger.model_dump(mode="python")).logger

Composer( │ constants=MaybeConstant( │ │ NUM_DIGITS=2, │ │ TOKENS=[ │ │ │ '0', │ │ │ '1', │ │ │ '2', │ │ │ '3', │ │ │ '4', │ │ │ '5', │ │ │ '6', │ │ │ '7', │ │ │ '8', │ │ │ '9', │ │ │ '+', │ │ │ '*', │ │ │ '-', │ │ │ '=', │ │ │ '<BOS>', │ │ │ '<EOS>', │ │ │ '<PAD>', │ │ │ '<UNK>' │ │ ] │ ), │ logger=LoggerConfig( │ │ log_file=None, │ │ module_name=None, │ │ propagate=False, │ │ log_root_dir=None, │ │ rich_handler_config={ │ │ │ 'level': 'INFO', │ │ │ 'console': MISSING, │ │ │ 'show_level': True, │ │ │ 'show_path': True, │ │ │ 'show_time': True, │ │ │ 'rich_tracebacks': True, │ │ │ 'markup': True, │ │ │ 'log_time_format': '[%Y-%m-%d %H:%M:%S]' │ │ } │ ), │ global_=MaybeGlobal(seed=42, debug=True, debug_samples=100), │ data=DataConfig( │ │ context_length=11, │ │ dataset_name='adder_dataset', │ │ dataset_size=10000, │ │ dataset_path='./data/adder/adder_dataset.txt', │ │ dataset_dir='./data/adder', │ │ dataset_url='https://raw.githubusercontent.com/gao-hongnan/omniverse/dev/omnivault/transformer/projects/adder/assets/adder_dataset.txt', │ │ split=[0.7, 0.2, 0.1], │ │ collate_fn={'batch_first': True, 'pad_token_id': 16}, │ │ train_loader={ │ │ │ 'batch_size': 32, │ │ │ 'shuffle': True, │ │ │ 'num_workers': 0, │ │ │ 'pin_memory': False, │ │ │ 'drop_last': False │ │ }, │ │ valid_loader={ │ │ │ 'batch_size': 32, │ │ │ 'shuffle': False, │ │ │ 'num_workers': 0, │ │ │ 'pin_memory': False, │ │ │ 'drop_last': False │ │ }, │ │ test_loader={ │ │ │ 'batch_size': 128, │ │ │ 'shuffle': False, │ │ │ 'num_workers': 0, │ │ │ 'pin_memory': False, │ │ │ 'drop_last': False │ │ } │ ), │ model=MISSING, │ optimizer=AdamConfig(name='torch.optim.Adam', lr=0.2, betas=(0.9, 0.98), eps=1e-09, weight_decay=0.0), │ criterion=MISSING, │ scheduler=MISSING, │ trainer=TrainerConfig( │ │ device=device(type='cpu'), │ │ max_epochs=9, │ │ log_every_n_steps=100, │ │ eval_every_n_steps=4, │ │ step_scheduler_on_batch_or_epoch='epoch', │ │ use_amp=False, │ │ autocast_config={'enabled': False, 'dtype': None, 'cache_enabled': None}, │ │ scaler_config={ │ │ │ 'enabled': False, │ │ │ 'init_scale': 65536.0, │ │ │ 'growth_factor': 2.0, │ │ │ 'backoff_factor': 0.5, │ │ │ 'growth_interval': 2000 │ │ }, │ │ gradient_accumulation_steps=1, │ │ clip_grad_norm={'max_norm': 1.0, 'norm_type': 2.0, 'error_if_nonfinite': False, 'foreach': None}, │ │ apply_weight_decay_to_different_param_groups=False, │ │ save_dir='./data/adder/checkpoints/2024-05-16_14-38-11', │ │ save_every_epoch=False, │ │ save_best_only=True, │ │ monitor='valid_this_epoch_average_loss', │ │ mode='min' │ ), │ generator=GeneratorConfig(max_tokens=4, temperature=1.0, greedy=True, top_k=None, top_p=None), │ distributed=DistributedConfig( │ │ log_dir='logs_distributed', │ │ log_level=20, │ │ log_on_master_or_all=True, │ │ master_addr='localhost', │ │ master_port='29500', │ │ nnodes=1, │ │ nproc_per_node=1, │ │ node_rank=0, │ │ world_size=1, │ │ backend='gloo', │ │ init_method='env://' │ ) )

Reproducibility#

Reproducibility in deep learning ensures that experiments can be repeated with identical results, critical for verifying research findings and deploying reliable models. Distributed training introduces complexity because it involves multiple computation units which may not synchronize their random states perfectly. If training is paused and resumed, ensuring each unit starts with the correct seed to reproduce the exact computational path becomes challenging. To address this, one can find more sophisticated examples in libraries like Composer, where the whole library’s core is built around training deep neural nets in any environment (distributed or not) with reproducibility in mind.

References

print(get_field_annotations(func_or_method=seed_all)[0])

print("\n")

print(inspect.getdoc(seed_all))

seed_all(composer.global_.seed, seed_torch=True, set_torch_deterministic=False)

[('seed', <class 'int'>, 1992), ('seed_torch', <class 'bool'>, True), ('set_torch_deterministic', <class 'bool'>, True)]

Seeds all relevant random number generators to ensure reproducible

outcomes. Optionally seeds PyTorch and activates deterministic

behavior in PyTorch based on the flags provided.

Parameters

----------

seed : int, default=1992

The seed number for reproducibility.

seed_torch : bool, default=True

If True, seeds PyTorch's RNGs.

set_torch_deterministic : bool, default=True

If True, activates deterministic mode in PyTorch.

Returns

-------

seed : int

The seed number used for reproducibility.

42

Vocabulary#

vocabulary = AdderVocabulary.from_tokens(tokens=constants.TOKENS, num_digits=constants.NUM_DIGITS) # type: ignore[attr-defined]

token_to_index = vocabulary.token_to_index

index_to_token = vocabulary.index_to_token

vocab_size = vocabulary.vocab_size

pprint(token_to_index)

pprint(index_to_token)

pprint(vocab_size)

{ │ '0': 0, │ '1': 1, │ '2': 2, │ '3': 3, │ '4': 4, │ '5': 5, │ '6': 6, │ '7': 7, │ '8': 8, │ '9': 9, │ '+': 10, │ '*': 11, │ '-': 12, │ '=': 13, │ '<BOS>': 14, │ '<EOS>': 15, │ '<PAD>': 16, │ '<UNK>': 17 }

{ │ 0: '0', │ 1: '1', │ 2: '2', │ 3: '3', │ 4: '4', │ 5: '5', │ 6: '6', │ 7: '7', │ 8: '8', │ 9: '9', │ 10: '+', │ 11: '*', │ 12: '-', │ 13: '=', │ 14: '<BOS>', │ 15: '<EOS>', │ 16: '<PAD>', │ 17: '<UNK>' }

18

Assign vocab_size to composer.model because we don’t want to hardcode

vocab_size beforehand, and want to derive concrete values from the

Vocabulary object.

try:

composer.model.vocab_size = vocab_size

except AttributeError as err:

LOGGER.error(err)

[2024-05-16 14:38:11] ERROR _Missing instances are immutable 2890644827.py:4

Ah okay haha, this is the price of writing overly complex and useless code to look fancy and you end up a mess. Anyways, we will handle this later on where we can explicitly instantiate the model config class.

Tokenization#

tokenizer = AdderTokenizer(vocabulary=vocabulary)

assert tokenizer.vocabulary.token_to_index == token_to_index

assert tokenizer.vocabulary.index_to_token == index_to_token

pprint(tokenizer.encode("1"))

[14, 1, 15]

sequence = "15+57=072"

sequences = ["15+57=072", "01+02=003"]

encoded_sentence = tokenizer.encode(sequence)

print(f"Encoded sentence: {encoded_sentence}")

decoded_sentence = tokenizer.decode(encoded_sentence)

print(f"Decoded sentence: {decoded_sentence}")

Encoded sentence: [14, 1, 5, 10, 5, 7, 13, 0, 7, 2, 15]

Decoded sentence: 15+57=072

encoded_sentences = tokenizer.encode_batch(sequences) # type: ignore[attr-defined]

print(f"Encoded sentences: {encoded_sentences}")

decoded_sentences = tokenizer.decode_batch(encoded_sentences) # type: ignore[attr-defined]

print(f"Decoded sentences: {decoded_sentences}")

Encoded sentences: [[14, 1, 5, 10, 5, 7, 13, 0, 7, 2, 15], [14, 0, 1, 10, 0, 2, 13, 0, 0, 3, 15]]

Decoded sentences: ['15+57=072', '01+02=003']

Dataset#

Create Dataset#

def pad_number(num: int, length: int) -> str:

"""

Pad numbers with zeros in front so that they have uniform length.

Note, if a + b = c and num digits allowed to add is 2, then for

a and b we always pad to length 2, but for c we always pad to length 3.

Example

-------

6 + 90 = 96 -> 06 + 90 = 096

Parameters

----------

num : int

Number to be padded.

num_digits : int

Length of the resulting padded number string.

Returns

-------

str

Padded number string.

"""

return str(num).zfill(length)

def equation_to_string(a: int, b: int, c: int, num_digits: int) -> str:

"""

Formats the addition equation as a string.

Parameters

----------

a : int

First addend.

b : int

Second addend.

c : int

Sum of a and b.

num_digits : int

Number of digits each number in the equation should have.

Returns

-------

str

Formatted equation string.

"""

padded_a = pad_number(a, num_digits)

padded_b = pad_number(b, num_digits)

padded_c = pad_number(c, num_digits + 1) # note the padding here!

return f"{padded_a}+{padded_b}={padded_c}"

def decode_equation(

vocab: AdderVocabulary, equation: torch.Tensor | List[int], show_special_tokens: bool = False

) -> str:

"""

Convert an equation in list format to string format.

Parameters

----------

equation : List[int]

The equation in list format.

Returns

-------

str

The equation in string format.

"""

if isinstance(equation, torch.Tensor):

equation = equation.tolist()

UNK = vocab.token_to_index[vocab.UNK]

decoded_equation = "".join([str(index_to_token.get(x, UNK)) for x in equation])

if show_special_tokens:

return decoded_equation

return decoded_equation.replace("<BOS>", "").replace("<EOS>", "").replace("<PAD>", "").replace("<UNK>", "")

def batch_decode_equation(vocab: AdderVocabulary, equations: torch.Tensor | List[List[int]]) -> List[str]:

decoded_equations = []

for equation in equations:

decoded_equation = decode_equation(vocab, equation)

decoded_equations.append(decoded_equation)

return decoded_equations

def encode_equation(vocab: AdderVocabulary, equation: str, num_digits: int, device: torch.device) -> torch.Tensor:

"""

Convert an equation (up to the equal sign in it) in string format to a list.

Parameters

----------

equation : str

The equation in string format.

num_digits : int

Number of digits each number in the equation should have.

device : torch.device

The device to which the tensor should be sent.

Returns

-------

torch.Tensor

The equation in list format as a tensor.

"""

plus_idx = equation.index(vocab.ADD)

equal_idx = equation.index(vocab.EQUAL)

BOS = vocab.token_to_index[vocab.BOS]

UNK = vocab.token_to_index[vocab.UNK]

a = pad_number(int(equation[:plus_idx]), num_digits)

b = pad_number(int(equation[plus_idx + 1 : equal_idx]), num_digits)

new_equation = f"{a}+{b}="

return torch.tensor([BOS] + [token_to_index.get(n, UNK) for n in new_equation], dtype=torch.int).to(device)

def create_add_dataset(

vocab: AdderVocabulary, num_digits: int, dataset_size: int, rng_seed: int = 1337

) -> Tuple[List[torch.Tensor], List[str]]:

BOS = vocab.token_to_index[vocab.BOS]

EOS = vocab.token_to_index[vocab.EOS]

UNK = vocab.token_to_index[vocab.UNK]

rng = torch.Generator()

rng.manual_seed(rng_seed)

max_num = 10**num_digits - 1

dataset_str = []

for _ in range(dataset_size):

a = torch.randint(low=0, high=max_num + 1, size=(1,), generator=rng).item()

b = torch.randint(low=0, high=max_num + 1, size=(1,), generator=rng).item()

c = a + b

equation = equation_to_string(a, b, c, num_digits)

dataset_str.append(equation)

dataset_tensor = [torch.tensor([BOS] + [token_to_index.get(n, UNK) for n in x] + [EOS]) for x in dataset_str]

return dataset_tensor, dataset_str

dataset_tensor, dataset_str = create_add_dataset(vocab=vocabulary, num_digits=2, dataset_size=4)

pprint(dataset_tensor)

pprint(dataset_str)

[ │ tensor([14, 1, 5, 10, 5, 7, 13, 0, 7, 2, 15]), │ tensor([14, 9, 2, 10, 0, 0, 13, 0, 9, 2, 15]), │ tensor([14, 9, 5, 10, 5, 3, 13, 1, 4, 8, 15]), │ tensor([14, 1, 5, 10, 1, 0, 13, 0, 2, 5, 15]) ]

['15+57=072', '92+00=092', '95+53=148', '15+10=025']

print(f"Decoded equation: {decode_equation(vocabulary, dataset_tensor[0])}")

assert (

decode_equation(vocabulary, dataset_tensor[0])

== dataset_str[0]

== decode_equation(vocabulary, [15, 1, 5, 10, 5, 7, 13, 0, 7, 2, 14])

)

Decoded equation: 15+57=072

if we encode equation, we can encode up to equal sign like below.

print(f"Encoded equation: {encode_equation(vocabulary, dataset_str[0], num_digits=2, device=composer.trainer.device)}")

torch.testing.assert_close(

encode_equation(vocabulary, dataset_str[0], num_digits=2, device=composer.trainer.device),

torch.tensor([14, 1, 5, 10, 5, 7, 13], dtype=torch.int32),

)

Encoded equation: tensor([14, 1, 5, 10, 5, 7, 13], dtype=torch.int32)

Uncomment the below code to generate the dataset into a text file and yes, I am lazy to add a config variable for whether to generate the dataset or not.

# dataset, dataset_str = create_add_dataset(vocab, self.num_digits, self.dataset_size)

# write dataset_str to a file

# with open("dataset_str.txt", "w") as f:

# for item in dataset_str:

# f.write("%s\n" % item)

Encoding Strategy Overview#

Our strategy for encoding arithmetic expressions is pretty self-explanatory,

where given a string D1 + D2 = D3, we encode it as <BOS>D1+D2=0D3<EOS>.

However, this is verbose for clarity sake. In fact, Karpathy’s encoding strategy

simplifies arithmetic expressions by concatenating the digits of operands and

the result into a single string without explicit symbols for operations or

equality. This method relies on a fixed number of digits (num_digits) for

operands, which streamlines the model’s interpretation of the sequence. For

example, if num_digits is set to 2, every encoded expression is structured to

follow a predictable pattern: the first two digits represent the first operand,

the next two digits represent the second operand, and the final digits are

encoded as 3 digits because the max sum of two 2-digit numbers is 199, which is

3 digits. The digits of the result are encoded in reverse order. This

counterintuitive approach is designed to align with the GPT model’s learning

algorithm, facilitating easier learning of the addition operation by mimicking

the traditional right-to-left calculation process in addition.

To illustrate, let’s examine the encoding of arithmetic expressions with

num_digits=2:

For the expression 6 + 39 = 45, we have the following:

The first two digits

06represent the number 6, zero-padded to adhere to thenum_digits=2requirement.The next two digits

39represent the number 39, already fitting the digit requirement.The final part

054represents the result 45, reversed to54and preceded by a zero to maintain the total length of \(2n + (n + 1) = 7 \) digits fornum_digits=2.

Constructing PyTorch Dataset#

create_directory(composer.data.dataset_dir)

download_file(url=composer.data.dataset_url, output_path=composer.data.dataset_path)

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 97k 100 97k 0 0 205k 0 --:--:-- --:--:-- --:--:-- 207k

with open(composer.data.dataset_path, "r") as file:

sequences = [line.strip() for line in file]

dataset = AdderDataset(data=sequences, tokenizer=tokenizer)

pprint(next(iter(dataset)))

( │ tensor([14, 1, 5, 10, 5, 7, 13, 0, 7, 2]), │ tensor([16, 16, 16, 16, 16, 16, 0, 7, 2, 15]), │ tensor([True, True, True, True, True, True, True, True, True, True]), │ tensor([[ True, False, False, False, False, False, False, False, False, False], │ │ [ True, True, False, False, False, False, False, False, False, False], │ │ [ True, True, True, False, False, False, False, False, False, False], │ │ [ True, True, True, True, False, False, False, False, False, False], │ │ [ True, True, True, True, True, False, False, False, False, False], │ │ [ True, True, True, True, True, True, False, False, False, False], │ │ [ True, True, True, True, True, True, True, False, False, False], │ │ [ True, True, True, True, True, True, True, True, False, False], │ │ [ True, True, True, True, True, True, True, True, True, False], │ │ [ True, True, True, True, True, True, True, True, True, True]]) )

Construct Batches, Collate Function and DataLoader#

We first reverse engineer what our dataset is returning. The disclaimer here is

that for decoder only models like GPT, many people often omit the padding mask

since all the samples \(\mathbf{x}\) are chunked to sequence/context length of

window size \(T\), and future masks are usually handled within the Attention

class since we will never attend to the future tokens. However, for the sake of

clarity, we will include the padding and future mask in the dataset (i.e.

actually it is for the sake of my own understanding when I started to implement

decoder from scratch).

input, target, target_padding_mask, future_mask = next(iter(dataset))

Input and Target#

I think if you’ve read my section here, then we would easily see that given an input sequence \(\mathbf{x}\), the target sequence \(\mathbf{y}\) is simply the input sequence \(\mathbf{x}\) shifted by one time step to the left.

print(f"Input : {input}")

print(f"Target: {target}")

Input : tensor([14, 1, 5, 10, 5, 7, 13, 0, 7, 2])

Target: tensor([16, 16, 16, 16, 16, 16, 0, 7, 2, 15])

Target Padding Mask#

When you’re dealing with sequences of different lengths, you pad the shorter

sequences with a special token PAD (usually \(0\) or \(-100\)) to make them the

same length as the longest one in the batch. These paddings should not

contribute to the model’s learning, so you need to mask them out. In practice,

you’ll often see a mask argument in Attention layers in PyTorch where if

True, the attention scores are set to -inf for the padded positions so that

these positions become zero after the softmax operation, thereby not

contributing to the weighted sum of the input sequence.

In a decoder-only model like GPT, the input sequence is essentially the target. The model aims to generate tokens that come after the given input, treating it as the “history” or “context” for the task of text generation. Unlike encoder-decoder models like the original Transformer, where the encoder processes a source sequence and the decoder generates a target sequence, a decoder-only model works solely with what would traditionally be considered the target sequence.

Consequently, although the terminology “target padding mask” might seem more intuitive in the context of encoder-decoder models, where the distinction between source (input) and target (output) sequences is clear. The distinction is blurred in decoder-only models like GPT as the model processes input to predict the next token in a sequence. Here, the source is essentially the target at different stages of processing: the model uses previous tokens (source) to predict the next token (target). However, during my implementation, I was mainly referring to transformer models that use encoder-decoder architecture, and the terminology therefore stemmed from that context.

The definition of a target padding mask is a binary mark that ignores pad-tokens in the source input (in decoder only model, the source is the target). And the shape is \((\mathcal{B}, T)\).

Let’s illustrate the target padding mask with an example. Suppose we have a batch of sequences with different lengths:

target_batch = [

[5, 7, 9],

[8, 6],

[3, 12, 4, 11, 17],

[2, 1, 4, 5],

]

pprint(target_batch)

[[5, 7, 9], [8, 6], [3, 12, 4, 11, 17], [2, 1, 4, 5]]

If we try to “batch” these sequences, PyTorch would throw an error indicating that you need all sequences to have the same length.

try:

target_batch = torch.tensor(target_batch, dtype=torch.int64)

except ValueError as err:

LOGGER.error(err)

[2024-05-16 14:38:12] ERROR expected sequence of length 3 at dim 1 (got 2) 1205213247.py:4

To address this issue, we could pad the sequences to the same length and create a mask to indicate

which positions are padded. We pad the shorter sequences with a special token PAD

to make them the same length as the longest one in the batch.

PAD = vocabulary.token_to_index[vocabulary.PAD]

max_len = max(len(seq) for seq in target_batch)

target_batch = [seq + [PAD] * (max_len - len(seq)) for seq in target_batch]

pprint(target_batch)

target_batch = torch.tensor(target_batch, dtype=torch.int64)

pprint(target_batch)

[[5, 7, 9, 16, 16], [8, 6, 16, 16, 16], [3, 12, 4, 11, 17], [2, 1, 4, 5, 16]]

tensor([[ 5, 7, 9, 16, 16], │ │ [ 8, 6, 16, 16, 16], │ │ [ 3, 12, 4, 11, 17], │ │ [ 2, 1, 4, 5, 16]])

batch_size, seq_len = target_batch.size()

target_padding_mask = target_batch != PAD

pprint(target_padding_mask)

assert target_padding_mask.size() == (batch_size, seq_len) == (4, 5)

tensor([[ True, True, True, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, True, True], │ │ [ True, True, True, True, False]])

Of course, we would need a batch of these masks, so we would have a shape of \((\mathcal{B}, T)\) like mentioned above. As we will see later, we will still need to broadcast the shape to \((\mathcal{B}, 1, T, T)\) to match the shape of the attention scores.

Theoretically speaking, it is possible for the sequence length \(T\) to vary

across samples \(\mathbf{x}\). However, we usually have the same length for all

samples in GPT, and in this particular case, we do know that each sample

necessarily have the same length by design. However, for the sake of

explanation, we note that in our Dataset, it will only generate 1 single

sample data point and do not worry about different sequence length across other

samples in the dataset \(\mathcal{S}\), but in deep learning we train in

mini-batches \(\mathcal{B}\), and with different batch sizes we may encounter

issues (i.e. matrix multiplication may not work).

Future Mask#

In the decoder, each position can only attend to positions that come before it in the sequence to maintain the auto-regressive property. This is different from the encoder, where all positions can attend to all other positions.

The definition of future mask is basically a look-ahead mask to ensure that each

position only attends to positions before it in the sequence where we mask out

future positions (i.e., positions that come after the current position) so that

they don’t contribute to the current attention scores. Before the softmax

operation, we’ll mark these positions as -inf so that they become zero after

the softmax operation - effectively zeroing out the attention scores for future

positions. What does zeroing out these masked logits actually does? Basically,

the attention mechanism can be thought of as a weighted average of all the

tokens in the input sequence. Each token is assigned a weight, with higher

weights indicating more relevance to the token under consideration. If a certain

token should not be considered at all (e.g., it’s a future token that should not

be visible to the current decoder step, or it’s a padding token), its weight

should be zero.

The shape of the future mask is \((T, T)\) for a target sequence/sample \(\mathbf{x}\) of length \(T\). Let’s see a concrete example to illustrate the future mask.

seq_len = 5

future_mask = torch.triu(torch.ones(seq_len, seq_len), diagonal=1)

future_mask = future_mask == 0

pprint(future_mask)

assert future_mask.size() == (seq_len, seq_len) == (5, 5)

tensor([[ True, False, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, True]])

Merge Padding and Future Masks#

We see from our decoder implementation below, that one of the method is

creating the target masks. In other words, we are creating the target padding

masks and future masks, and merging them together.

1def create_target_masks(

2 self,

3 batch_size: int,

4 seq_len: int,

5 target_padding_masks: torch.BoolTensor | NotGiven = NOT_GIVEN,

6 future_masks: torch.BoolTensor | NotGiven = NOT_GIVEN,

7) -> torch.BoolTensor:

8 target_masks_shape = (batch_size, 1, seq_len, seq_len)

9 if target_padding_masks is NOT_GIVEN and future_masks is NOT_GIVEN:

10 target_padding_masks = cast(

11 torch.BoolTensor, construct_dummy_batch_target_padding_masks(batch_size, seq_len)

12 )

13 future_masks = cast(torch.BoolTensor, construct_dummy_batch_future_masks(batch_size, seq_len))

14

15 if target_padding_masks is NOT_GIVEN:

16 target_padding_masks = cast(

17 torch.BoolTensor, construct_dummy_batch_target_padding_masks(batch_size, seq_len)

18 )

19

20 if future_masks is NOT_GIVEN:

21 future_masks = cast(torch.BoolTensor, construct_dummy_batch_future_masks(batch_size, seq_len))

22

23 assert target_padding_masks.shape == future_masks.shape == target_masks_shape # type: ignore[union-attr]

24

25 return cast(

26 torch.BoolTensor,

27 torch.logical_and(cast(torch.Tensor, target_padding_masks), cast(torch.Tensor, future_masks)).bool(),

28 )

The purpose of applying logical_and between target_padding_mask and

future_mask is to combine the constraints from both masks when calculating

self-attention scores in the transformer’s decoder. The target_padding_mask is

designed to mask out the padding tokens in the input sequence, while the

future_mask ensures that a given position cannot attend to future positions in

the sequence. By combining these masks, you can perform the necessary masking

for both padding and future tokens in a single step.

Here’s how it works:

target_padding_mask: Masks out the padding tokens so that they don’t contribute to the attention calculations. True values mean “attend to this token,” and False values mean “ignore this token.”future_mask: The future mask is created as a lower triangular matrix, where the lower triangle, including the diagonal, is filled with ones, and the upper triangle is filled with zeros. Masks out future tokens in a sequence so that a token at a given position can only attend to positions that come before it (and itself). True values mean “attend to this token,” and False values mean “ignore this token.”logical_and(target_padding_mask, future_mask): Combines the two masks. A True in the resulting mask means that the condition for both padding and future attention is satisfied.

By combining these two masks, the decoder obeys the autoregressive property,

ensuring it doesn’t see future tokens, while also ignoring padding tokens in the

input sequence. We may term it the target_mask.

First Sample First Token#

target_padding_maskhas size of[4, 5].We zoom in to the first row (sample) which is of length 5.

This length 5 is the sequence length, which is

T, T, T, F, Findicating the last 2 tokens being padded.

future_maskhas size of[5, 5].We note that this is indepedent of batch size. Each sample should have the same future mask shape of

[L, L].This

L=5should necessary be same for the sequence length intarget_padding_mask.

First, let’s consider one batch of 4 samples. What we do first is to broadcast

future_maskto[4, 5, 5]because we want each sample/row in the batch to have the same future mask. As shown below:

pprint(future_mask)

future_mask = future_mask.view(1, seq_len, seq_len).expand(size=(batch_size, -1, -1))

pprint(future_mask)

pprint(future_mask.shape)

tensor([[ True, False, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, True]])

tensor([[[ True, False, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, True]], │ │ │ │ [[ True, False, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, True]], │ │ │ │ [[ True, False, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, True]], │ │ │ │ [[ True, False, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, True]]])

torch.Size([4, 5, 5])

Now, we can zoom in to one particular sample since both

target_padding_maskandfuture_maskhave the same first dimension of batch size.What is incomplete is that we need to broadcast

target_padding_mask’s last dimension to have the same dimensions asfuture_mask. This means we broadcast[4, 5]to[4, 5, 5]. But why?For simplicity, we slice the first same of both below.

The first row of the

future_maskof the first sample isT, F, F, F, F. This corresponds to what? This is the future mask of the first token in the sequence. Well, that is confusing, because it apparently have 5 elements, and has “information” of the other 4 tokens in the sequence. Let’s explain in details below:Regarding the first row of the

future_maskin the first sample, which is[T, F, F, F, F], it might initially seem confusing why there are 5 elements. Each of these elements, in fact, corresponds to whether the first token can attend to other tokens at each respective position in the sequence. Here’s how to interpret it:The first element (

True) indicates that the first token can attend to itself.The next four elements (

False) specify that the first token should not attend to any of the future tokens in the sequence.

Consequently, what is the first token in the sequence of the

target_padding_mask? Recall earlier we mentioned that the first sample’starget_padding_maskisT, T, T, F, Fand therefore the first token in the sequence isT.What do we want to achieve here? We want to make sure that the model does not attend to tokens in the sequence that are masked with

False.In other words, the first token in the sequence of the first sample has

target_padding_maskofTandfuture_masksofT, F, F, F, F.We need to broadcast this

TtoT, T, T, T, Tto align withT, F, F, F, Fbecause? Because we need ensure that this first token in the sequence is also able to considered in relation to every other token in the sequence.So the first token is not a padded token, which is

T, similarly, the first token needs to attend to itself at the first position, henceTandTgiveT. But for the secondTin the now broadcastedtarget_padding_mask, it is still representing the first token or?Broadcasting the first token’s

target_padding_maskvalue ofTto[T, T, T, T, T]ensures that when this first token is being considered for attention computations, it is free to attend to any position, barring any restrictions set byfuture_mask.Tricky: after broadcasting, each

Tin[T, T, T, T, T]is still representing the first token. They indicate that when the first token is compared with any token in the sequence (including itself), it is not a padding token. The element-wiseANDwith thefuture_maskthen further refines this by restricting it from attending to future tokens.

pprint(target_padding_mask)

pprint(target_padding_mask[0])

target_padding_mask = target_padding_mask.view(batch_size, 1, seq_len).expand(size=(batch_size, seq_len, seq_len))

pprint(target_padding_mask)

pprint(target_padding_mask.shape)

tensor([[ True, True, True, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, True, True], │ │ [ True, True, True, True, False]])

tensor([ True, True, True, False, False])

tensor([[[ True, True, True, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, False, False]], │ │ │ │ [[ True, True, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, False, False, False]], │ │ │ │ [[ True, True, True, True, True], │ │ [ True, True, True, True, True], │ │ [ True, True, True, True, True], │ │ [ True, True, True, True, True], │ │ [ True, True, True, True, True]], │ │ │ │ [[ True, True, True, True, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, False]]])

torch.Size([4, 5, 5])

pprint(target_padding_mask[0])

pprint(future_mask[0])

pprint(target_padding_mask[0] & future_mask[0])

tensor([[ True, True, True, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, False, False]])

tensor([[ True, False, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, True, False], │ │ [ True, True, True, True, True]])

tensor([[ True, False, False, False, False], │ │ [ True, True, False, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, False, False], │ │ [ True, True, True, False, False]])

First Sample Fourth Token#

Now let’s look at another example—the 4th token in the sequence, where

target_padding_mask = [T, T, T, F, F] and future_mask is a lower triangular

matrix with Trues.

4th Token’s target_padding_mask: The 4th token has a value of

Fintarget_padding_mask, indicating it’s a padding token.4th Row of future_mask: The 4th row in

future_maskis[True, True, True, True, False]. This means that if this token were not a padding token, it would be allowed to attend to all the previous tokens in the sequence and itself, but not to any future token.Broadcast target_padding_mask: To align

target_padding_maskwithfuture_mask, we’d broadcastFfrom thetarget_padding_maskto[F, F, F, F, F]. This way, when we consider the 4th token in relation to any other token in the sequence, it’s still marked as a padding token.Element-wise AND with future_mask: After broadcasting, you’d perform an element-wise AND between

[F, F, F, F, F]and[True, True, True, True, False], resulting in[F, F, F, F, F].Interpretation: This effectively means that the 4th token won’t attend to any other token in the sequence, and no token will attend to it either, as it is a padding token.

So, the masks are doing their jobs correctly: the target_padding_mask

indicates whether each token is a padding token or not, and future_mask

dictates the “rules” of attention regarding what each token can attend to.

Combining them ensures that both conditions are met.

Further Add a Singleton Dimension in Target Masks#

Now both masks are of shape: (B, L, L) but we need to add a singleton

dimension to the last dimension to make it (B, 1, L, L).

In deep learning frameworks like PyTorch, the dimensions of the tensors involved in operations like matrix multiplication or attention mechanisms often have specific semantic meanings. In the context of attention mechanisms, especially in the transformer architecture, the attention mask usually has a shape that is compatible with the attention logits for element-wise multiplication.

In the transformer model, the attention logits are often computed as a dot

product between query and key vectors, resulting in a tensor of shape

(Batch size, Num heads, Sequence length, Sequence length) or (B, H, L, L).

Here, B is the batch size, H is the number of attention heads, and L is

the sequence length.

To make the mask tensor compatible for element-wise operations with this 4D

tensor, it needs to have a shape that can be broadcasted to (B, H, L, L). A

mask of shape (B, 1, L, L) fulfills this requirement.

The singleton dimension is added so that the mask can be easily broadcast to the

shape of the attention logits tensor during the computation. When a tensor with

shape (B, 1, L, L) is element-wise multiplied with a tensor of shape

(B, H, L, L), the singleton dimension (the 1) allows the mask to be used for

each attention head without explicitly replicating the mask H times. This is

more memory-efficient and often faster.

Thus, adding a singleton dimension in masks is a preparatory step that allows for efficient element-wise operations later in the model’s forward pass.

target_padding_mask = target_padding_mask.unsqueeze(1)

pprint(target_padding_mask.shape)

future_mask = future_mask.unsqueeze(1)

pprint(future_mask.shape)

target_mask = target_padding_mask & future_mask

pprint(target_mask.shape)

torch.Size([4, 1, 5, 5])

torch.Size([4, 1, 5, 5])

torch.Size([4, 1, 5, 5])

Why mask our target in Adder?#

If you see the source code of how the AdderDataset is constructed, you will

see that we masked out all the tokens before (and including) the equal sign.

For example, if our sequence is 12+97=109, the input sequence will be

tokenized to the following:

input = [BOS, 1, 2, +, 9, 7, =, 1, 0, 9]

target = [1, 2, +, 9, 7, =, 1, 0, 9, EOS]

What our code below does is to mask out the tokens before the equal sign for the target sequence.

target = [MASK, MASK, MASK, MASK, MASK, MASK, 1, 0, 9, EOS]

def construct_target_tensor(self, input_sequence: torch.Tensor) -> torch.LongTensor:

target = input_sequence.clone()

where_equal_index = torch.where(input_sequence == self.equal_token_id)[0].item()

where_equal_index = int(where_equal_index) # to appease mypy lol

target[: where_equal_index + 1] = self.pad_token_id

return torch.LongTensor(target[1:])

Simply put, we do not care what the model predict for anything before the equal sign. By masking out (or ignoring) the tokens before the =, we are asking the model to “focus” on generating the correct answer after the equal sign.

Split to Train-Valid-Test#

batch_size = 256

composer.data.train_loader["batch_size"] = batch_size

composer.data.valid_loader["batch_size"] = batch_size

composer.data.test_loader["batch_size"] = batch_size

train_dataset, valid_dataset, test_dataset = split_dataset(

dataset=dataset, split=composer.data.split, seed=composer.global_.seed

)

train_size, valid_size, test_size = len(train_dataset), len(valid_dataset), len(test_dataset)

train_size, valid_size, test_size

(7000, 2000, 1000)

# max_seq_len is determined by 1+ num_digits + 1 + num_digits + 1 + num_digits + 1 + 1

# where the 1s represent BOS, Plus sign, Equal sign, the extra digit in the sum, EOS, respectively.

max_seq_len = 1 + 1 + 1 + 1 + 2 * composer.constants.NUM_DIGITS + (composer.constants.NUM_DIGITS + 1)

assert max_seq_len == composer.data.context_length

Create DataLoader#

train_loader = create_loader(

dataset=train_dataset,

loader_config=composer.data.train_loader,

collate_fn_config=composer.data.collate_fn,

)

valid_loader = create_loader(

dataset=valid_dataset,

loader_config=composer.data.valid_loader,

collate_fn_config=composer.data.collate_fn,

)

test_loader = create_loader(

dataset=test_dataset,

loader_config=composer.data.test_loader,

collate_fn_config=composer.data.collate_fn,

)

The collate_fn defines how to combine these variable-length samples into a

batch. This usually involves padding the sequences in the batch to a common

length, which is typically the length of the longest sequence in the batch. Note

here the padding in collate is “redundant” since in our earlier code we ensured

that all sample has same number of characters by way of padding zeros in front.

For example, 23 + 3 =26 will become 23 + 03 = 026. Consequently, all samples

in the mini-batch will have same length by definition.

torch.manual_seed(composer.global_.seed)

batch_index = 0

for batch in train_loader:

# Each batch is a tuple containing all elements for the batch

inputs_padded, targets_padded, padding_masks_padded_and_expanded, future_masks_expanded = batch

# Print the length of each component in the batch

print("Batch Size:", len(inputs_padded))

# Now you can print shapes or other properties of each batch element

print("Inputs Shape:", inputs_padded.shape)

print("Targets Shape:", targets_padded.shape)

# Decoding and other processing can be done here

# For example, decoding the first sequence in the batch

print("Decoded First Equation/Sample of the Batch:", decode_equation(vocabulary, inputs_padded[0].tolist()))

print("-" * 80)

batch_index += 1

if batch_index == 4:

break

Batch Size: 256

Inputs Shape: torch.Size([256, 10])

Targets Shape: torch.Size([256, 10])

Decoded First Equation/Sample of the Batch: 31+04=035

--------------------------------------------------------------------------------

Batch Size: 256

Inputs Shape: torch.Size([256, 10])

Targets Shape: torch.Size([256, 10])

Decoded First Equation/Sample of the Batch: 37+49=086

--------------------------------------------------------------------------------

Batch Size: 256

Inputs Shape: torch.Size([256, 10])

Targets Shape: torch.Size([256, 10])

Decoded First Equation/Sample of the Batch: 47+26=073

--------------------------------------------------------------------------------

Batch Size: 256

Inputs Shape: torch.Size([256, 10])

Targets Shape: torch.Size([256, 10])

Decoded First Equation/Sample of the Batch: 53+05=058

--------------------------------------------------------------------------------

Model#

We have went into extensive details on the implementation of the decoder in the implementation section. We will not repeat the concepts here, instead we will just compile the model with the configurations.

# Create individual component configurations

masked_self_attention_mha_config = MultiHeadedAttentionConfig(

attention=ScaledDotProductAttention(), d_model=128, H=4, dropout=0.1

)

feed_forward_config = PositionwiseFeedForwardConfig(

d_model=128, d_ff=256, activation=nn.GELU(approximate="tanh"), dropout=0.1, bias=True

)

add_norm_config_1 = AddNormConfig(feature_dim=128, dropout=0.1)

add_norm_config_2 = AddNormConfig(feature_dim=128, dropout=0.1)

# Create DecoderBlockConfig

decoder_block_config = DecoderBlockConfig(

masked_self_attention_mha=masked_self_attention_mha_config,

feed_forward=feed_forward_config,

add_norm_1=add_norm_config_1,

add_norm_2=add_norm_config_2,

)

# Create the overall DecoderConfig

model_config = DecoderConfig(

d_model=128,

vocab_size=vocab_size,

context_length=max_seq_len,

num_decoder_blocks=2,

dropout=0.1,

decoder_block=decoder_block_config,

)

model = GPTDecoder(model_config)

model = model.to(device=composer.trainer.device, dtype=next(model.parameters()).dtype, non_blocking=True)

model_size = model.total_trainable_parameters

print(f"model_size: {model_size}, train_set_size: {train_size}")

composer.model = model_config

model_size: 270226, train_set_size: 7000

Training Paradigm#

Here, we would list some of the training paradigms that we would be using in this project.

Optimizer#

We start off by defining the optimizer for GPT-2. A common choice used is the Adam [Kingma and Ba, 2014] or AdamW [Loshchilov and Hutter, 2017]. We conveniently take the configuration provided in Karpathy’s nanoGPT.

Furthermore, we briefly mention that Karpathy applies weight decay to different

parameter groups - which is quite a common practice. As we can see from the code

below, we define whitelisted and blacklisted modules that we want to apply

weight decay to. The whitelist modules are nn.Linear and the blacklist modules

are nn.LayerNorm, nn.Embedding.

Weight decay, which is basically L2 regularization penalizes the square of the weights, encouraging smaller weight values. This can lead to a “spreading out” effect, as it discourages the model from relying too heavily on a small number of input features, promoting a more even distribution of weight values and, by extension, a more balanced consideration of input dimensions. This regularization technique is particularly beneficial for layers that perform matrix multiplication, as it helps in ensuring that the model utilizes a broader range of input features rather than becoming overly dependent on a few dominant ones. We can find more intuition in the discussion Why not perform weight decay on layernorm/embedding?, Weight decay in the optimizers is a bad idea (especially with BatchNorm) and Weight decay exclusions (Karpathy).

1def apply_weight_decay_to_different_param_groups(

2 model: nn.Module, weight_decay: float

3) -> List[Dict[Literal["params", "weight_decay"], List[torch.nn.Parameter] | float]]:

4 decay: Set[str] = set()

5 no_decay: Set[str] = set()

6 whitelist_weight_modules: Tuple[Type[nn.Module], ...] = (nn.Linear,)

7 blacklist_weight_modules: Tuple[Type[nn.Module], ...] = (nn.LayerNorm, nn.Embedding, LayerNorm)

8

9 for module_name, module in model.named_modules():

10 for parameter_name, _parameter in module.named_parameters():

11 full_parameter_name = f"{module_name}.{parameter_name}" if module_name else parameter_name

12 if parameter_name.endswith("bias"):

13 # biases of all modules are not decayed

14 no_decay.add(full_parameter_name)

15 elif parameter_name.endswith("weight") and isinstance(module, whitelist_weight_modules):

16 # weights of whitelisted modules are decayed

17 decay.add(full_parameter_name)

18 elif parameter_name.endswith("in_proj_weight"):

19 # MHA projection layer, does not exist in my implementation

20 decay.add(full_parameter_name)

21 elif parameter_name.endswith("weight") and isinstance(module, blacklist_weight_modules):

22 # weights of blacklisted modules are not decayed

23 no_decay.add(full_parameter_name)

24 elif (parameter_name.endswith("gamma") or parameter_name.endswith("beta")) and isinstance(

25 module, LayerNorm

26 ):

27 # weights of LayerNorm modules are not decayed

28 # TODO: why do I need to do this is because my custom LayerNorm has gamma and beta

29 # as their "weight" and "bias" attributes, respectively.

30 no_decay.add(full_parameter_name)

31 elif parameter_name.endswith("pos_embed"):

32 no_decay.add(full_parameter_name)

33

34 param_dict = {parameter_name: parameter for parameter_name, parameter in model.named_parameters()} # noqa: C416

35 inter_params = decay & no_decay

36 union_params = decay | no_decay

37 assert not inter_params, f"Parameters {inter_params} are in both decay and no_decay sets."

38 assert not (

39 param_dict.keys() - union_params

40 ), f"Parameters {param_dict.keys() - union_params} were not categorized."

41

42 optim_groups: List[Dict[Literal["params", "weight_decay"], List[torch.nn.Parameter] | float]] = [

43 {"params": [param_dict[parameter_name] for parameter_name in sorted(decay)], "weight_decay": weight_decay},

44 {"params": [param_dict[parameter_name] for parameter_name in sorted(no_decay)], "weight_decay": 0.0},

45 ]

46

47 return optim_groups

We won’t go into too much technical rigour on the optimizer, but note that more modern variations exist, for instance DecoupledAdamW, which furthers decouple the weight decay term \(\lambda\) from the learning rate, as well RAdam [Liu et al., 2019], which is intended to address bias correction factors leading to higher variance in the adaptive learning rate for the initial training iterations.

To this end, we create the optimizer in code as follows, noting that we would not use the exact same configuration as Karpathy, but rather use what is deemed fit for the case at hand.

pprint(composer.optimizer)

optimizer_config_cls = OPTIMIZER_REGISTRY[composer.optimizer.name]

optimizer_pydantic_config = optimizer_config_cls(**composer.optimizer.model_dump(mode="python"))

pprint(optimizer_pydantic_config)

AdamConfig(name='torch.optim.Adam', lr=0.2, betas=(0.9, 0.98), eps=1e-09, weight_decay=0.0)

AdamConfig(name='torch.optim.Adam', lr=0.2, betas=(0.9, 0.98), eps=1e-09, weight_decay=0.0)

assert hasattr(composer.optimizer, "weight_decay")

optimizer = optimizer_pydantic_config.build(

params=apply_weight_decay_to_different_param_groups(model=model, weight_decay=composer.optimizer.weight_decay)

)

pprint(optimizer)

Adam ( Parameter Group 0 │ amsgrad: False │ betas: (0.9, 0.98) │ capturable: False │ differentiable: False │ eps: 1e-09 │ foreach: None │ fused: None │ lr: 0.2 │ maximize: False │ weight_decay: 0.0 Parameter Group 1 │ amsgrad: False │ betas: (0.9, 0.98) │ capturable: False │ differentiable: False │ eps: 1e-09 │ foreach: None │ fused: None │ lr: 0.2 │ maximize: False │ weight_decay: 0.0 )

Learning Rate Scheduler#

Motivation#

In training deep neural networks, learning rate is definitely one of the most important parameter to tune. Optimization algorithms like Adam and SGD tell us how the weights \(\boldsymbol{\theta} \in \boldsymbol{\Theta}\) should be updated, but the learning rate \(\eta\) tells us the rate at which the weights are being updated.

Theoretically and empircally, the magnitude of the learning rate \(\eta\) can have a significant impact on the training process. If the learning rate is too large, we might experience divergence, on the other hand, if the learning rate is too small, the model might take longer to converge or might get stuck in a local minima. The condition number of the problem also impacts optimization efficiency, as discussed in the momentum section, where the concept can be understood as the ratio between the smallest and largest changes possible in response to adjustments in different directions of the parameter space, reflecting the variance in sensitivity across these directions[^1] [Zhang et al., 2023]. As we progress through the training steps, it is also equally important to apply a learning rate scheduler to adjust (may not be monotonous decay) the learning rate discriminatively.

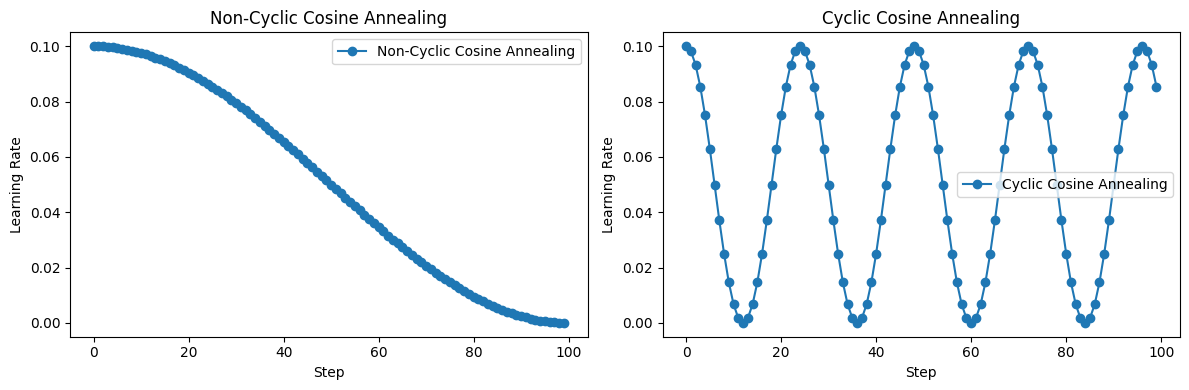

In the paper SGDR: Stochastic Gradient Descent with Restarts by Loshchilov and Hutter, they introduced an heuristic that relies on the empirical observation that we can improve the convergence of the model (usually in ill-conditioned situations) if we want follow an annealing process over the learning rate. This means that at the beginning of training, we do not want to decrease the learning too drastically. My (potentially wrong) intuition is that this may allow the model to consider exploring a larger parameter space without too much constraints than if we were to rapidly decrease the learning rate. The authors further claim that as we progress towards the end of the training, we would want to “fine-tune” the model parameters with a very small learning rate, as it could potentially help “refine” the solution space to find a “more optimal” set of parameters [Loshchilov and Hutter, 2016]. This idea naturally lands us to using cosine function because the cosine curve starts with a gentle slope, which coincides with the idea of gradual decrease in learning rate in the beginning, and the cosine curve naturally flattens and approaches zero towards the end as it reaches the end of its cycle, which again coincides with the idea of fine-tuning the model parameters with a very small learning rate.

Consequently, a cosine decaying scheduler has the below function form for learning rates in the range \(t \in [0, T]\):

Here \(\eta_0\) is the initial learning rate, \(\eta_T\) is the target rate at time \(T\). Furthermore, for \(t>T\) we simply pin the value to \(\eta_T\) without increasing it again. \(T\) represents the end of the learning rate annealing phase rather than the absolute end of training. It’s the point in time when the learning rate reaches \(\eta_T\), the target rate, and beyond which the learning rate is maintained constant at \(\eta_T\).

During \(0 \leq t < T\): The learning rate \(\eta_t\) is actively adjusted according to the cosine annealing formula. It transitions from the initial learning rate \(\eta_0\) towards the target rate \(\eta_T\), following a half-cosine wave.

For \(t \geq T\): The learning rate is set to \(\eta_T\) and no longer changes. This doesn’t necessarily mean that training must stop at \(t = T\). Training can continue beyond \(T\) with the learning rate fixed at \(\eta_T\).

In code, we can observe the behavior of the cosine annealing scheduler as follows:

from __future__ import annotations

from typing import Any, List

import matplotlib.pyplot as plt

import torch

from torch.optim import Optimizer

from torch.optim.lr_scheduler import CosineAnnealingLR, _LRScheduler

def get_learning_rates(optimizer: Optimizer, scheduler: _LRScheduler, steps: int) -> List[float]:

lrs = []

for _ in range(steps):

lrs.append(optimizer.param_groups[0]["lr"])

optimizer.step()

scheduler.step()

return lrs

def plot_learning_rates(

lrs: List[float], title: str, marker: str = "o", ax: plt.Axes | None = None, **kwargs: Any

) -> None:

ax = ax or plt.gca()

ax.plot(lrs, label=title, marker=marker, **kwargs)

ax.set_title(title)

ax.set_xlabel("Step")

ax.set_ylabel("Learning Rate")

ax.legend()

def main() -> None:

initial_lr = 0.1

eta_min = 0

steps = 100

model = torch.nn.Linear(2, 1)

optimizer = torch.optim.SGD(model.parameters(), lr=initial_lr)

scheduler_non_cyclic = CosineAnnealingLR(optimizer, T_max=steps, eta_min=eta_min)

lrs_non_cyclic = get_learning_rates(optimizer, scheduler_non_cyclic, steps)

optimizer = torch.optim.SGD(model.parameters(), lr=initial_lr)

scheduler_cyclic = CosineAnnealingLR(optimizer, T_max=steps // 8, eta_min=eta_min)

lrs_cyclic = get_learning_rates(optimizer, scheduler_cyclic, steps)

# Plotting

fig, axes = plt.subplots(1, 2, figsize=(12, 4))

plot_learning_rates(lrs_non_cyclic, "Non-Cyclic Cosine Annealing", ax=axes[0])

plot_learning_rates(lrs_cyclic, "Cyclic Cosine Annealing", ax=axes[1])

plt.tight_layout()

plt.show()

main()

Warmup#

Our motivation would have ended here, but in practice, we often see that the cosine annealing scheduler is often combined with a warmup phase. In Fig. 18, we can see that the loss curve is relatively smooth and converges way better than the ones without warmup.

Fig. 4 Training loss v.s. # of iterations of Transformers on the De-En IWSLT’14 dataset.#

Image Credit: ON THE VARIANCE OF THE ADAPTIVE LEARNING RATE AND BEYOND

It might be worth having some intuition on why warmup works so well in practice, and in particular, in language models like Transformers.

Firstly, the RAdam paper suggests warmup works as a variance reduction technique, which overcomes the problem of bias correction factors in optimizers like Adam, where having these bias correction factors would lead to larger variance in the adaptive learning rate during the initial training iterations [Lippe, 2023]. More concretely, Adam estimates the first and second moments of the gradient to change the learning rate of each individual parameter (hence adaptive) and having high variance between adaptive learning rates may de-stablize the training. If we don’t want to swap out Adam, then this calls for a warmup phase to stabilize the learning rate and reduce the variance in the early stages of training.

Secondly, language models like Transformers use iteratively applied Layer Normalization across layers can lead to very high gradients during the first iterations, which can be solved by using Pre-Layer Normalization (similar to Pre-Activation ResNet), which applies normalization before the layer’s main operations, contributing to gradient stabilization and reducing the necessity for a warm-up phase, or replacing Layer Normalization by other techniques (Adaptive Normalization, Power Normalization) [Lippe, 2023].

However, even though there are solutions to the problem, certain setups still use the Adam optimizer, and therefore warmup is still a simple and effective technique to stabilize the learning rate in the early stages of training - solving the afforementioned problems (i.e. stabilize the bias correction factors, moving averages of gradients and squared gradients).

To this end, we end our discussion on the motivation behind 1) using cosine annealing schedulers and 2) using warmup phases, often coupled with cosine annealing schedulers. In what follows, we will provide a more formal definition of the cosine annealing scheduler with warmup, and provide a running example to illustrate the behavior of the scheduler.

Definition#

The CosineAnnealingWithWarmupScheduler decays the learning rate \(\eta\)

according to the decreasing part of a cosine curve, with an initial warmup

\(t_{\text{warmup}}\).

This scheduler modulates \(\eta\) within defined upper and lower bounds over a predetermined interval, employing a cosine function. The formula for cosine annealing reflects the shape of a half-cosine wave, which decreases from a maximum value to a minimum and then increases back to the maximum. This cycle can repeat multiple times over the training process, depending on how the scheduler is configured. Although this approach suggests cyclic adjustments (oscillations) within the training duration, for simplicity’s sake, our specific implementation, inspired by MosaicML’s Composer’s CosineAnnealingWithWarmupScheduler, explicitly excludes considerations for such cycles/oscillations.

Definition 17 (Cosine Annealing With Warmup)

The CosineAnnealingWithWarmupScheduler modulates the learning rate \(\eta\)

according to a two-phase process: a warmup phase followed by a

cosine annealing phase. The learning rate multiplier[^lr-multiplier]

\(\alpha_{t}\) at any given time (step) \(t\) is given by:

where we denote:

\(t\) represents the current training step or epoch.

\(\eta_{\max}\) as the maximum learning rate reached during training, and often is the initial learning rate given into an optimizer.

\(t_{\text{warmup}}\) denotes the duration of the warmup period, in terms of the number of steps or epochs, during which the learning rate linearly increases to the maximum learning rate \(\eta_{\max}\).

\(t_{\max}\) as the maximum number of training steps, or maximum number of iterations in an epoch (see here).

\(\tau_w = \frac{t - t_{\text{warmup}}}{t_{\max}}\), the fraction of post-warmup time elapsed,

\(\alpha_f\) is a scaling factor that determines the final learning rate multiplier to decay to (a value between \(0\) and \(1\)), and this is a fixed value. For example, if \(\alpha_f = 0.1\) and the initial learning rate is \(\eta_{\max} = 3e-4\), then the final learning rate will be \(\eta_{\min} = 3e-4 \times 0.1 = 3e-5\).

The actual learning rate \(\eta_{t}\) at time (step) \(t\) is then computed as:

where we emphasize again that \(\eta_{\max}\) is the maximum learning rate reached during training.

A Word on Oscillations

Note that if you set \(t_{\max}\) to the total number of training steps that is needed for the entire dataset \(\mathcal{S}\), the scheduler will only decay the learning rate after the warmup phase and not oscillate further. This configuration means that after completing the linear increase during the warmup, the learning rate will decrease following a cosine curve until it reaches the final learning rate determined by \(\alpha_f\).

Single Cycle (No Oscillation): If \(t_{\max}\) is set to cover exactly one half-cycle of the cosine function from the end of the warmup phase to the conclusion of training, the learning rate will monotonically decrease from its maximum value (at the end of warmup) to its minimum value (as determined by \(\alpha_f\)) without oscillating. This is because the scheduler’s active period only spans a single descent phase of the cosine wave.

Multiple Cycles (Oscillation): If \(t_{\max}\) is set to allow for a longer duration than what is needed for a single half-cycle descent, the cosine annealing function can complete its initial descent and then begin to ascend as part of a new cycle. This leads to oscillations in the learning rate—after decreasing, it will start to increase again, potentially multiple times, depending on the total number of cycles fitted within \(t_{\max}\). This is where the term “oscillation” comes into play; it describes the periodic increase and decrease in the learning rate according to the cosine function over multiple cycles.

True oscillation, where the learning rate decreases and then increases within a training regime, typically requires either a restart mechanism (as seen in Cosine Annealing with Warm Restarts) or an explicit multi-cycle configuration. A standard cosine annealing scheduler, especially with a warmup phase, generally only supports a monotonic decrease within a single cycle, unless it is specifically designed to handle restarts or multiple cycles.

Implementation#

from __future__ import annotations

import math

from functools import partial

from torch.optim.lr_scheduler import LambdaLR

from torch.optim.optimizer import Optimizer

def _get_cosine_schedule_with_warmup_lr_lambda(

current_step: int, *, num_warmup_steps: int, num_training_steps: int, alpha_f: float

) -> float:

"""

Helper function for calculating the learning rate using cosine annealing

with warmup.

Parameters

----------

current_step: int

The current step in the training process.

num_warmup_steps: int

The number of steps for the warmup phase.

num_training_steps: int

The total number of training steps.

alpha_f: float

The minimum learning rate at the end of the schedule.

Returns

-------

float

The calculated learning rate.

"""

if current_step < num_warmup_steps:

alpha = current_step / max(1, num_warmup_steps)

else:

tau_w = (current_step - num_warmup_steps) / num_training_steps

tau_w = min(1.0, tau_w)

alpha = alpha_f + (1 - alpha_f) * (1 + math.cos(math.pi * tau_w)) / 2

return alpha

def get_cosine_annealing_with_warmup(

optimizer: Optimizer,

num_warmup_steps: int,

num_training_steps: int,

alpha_f: float = 0.1,

last_epoch: int = -1,

verbose: bool = False,

) -> LambdaLR:

"""

Create a schedule with a learning rate that decreases following the values

of the cosine function between the initial lr set in the optimizer to 0,

after a warmup period during which it increases linearly between 0 and the

initial lr set in the optimizer.

Parameters

----------

optimizer: `~torch.optim.Optimizer`

The optimizer for which to schedule the learning rate.

num_warmup_steps: int

The number of steps for the warmup phase.

num_training_steps: int

The total number of training steps.

alpha_f: float

The minimum learning rate at the end of the schedule, by default 0.1.

last_epoch: int

The index of the last epoch when resuming training, by default -1.

verbose: bool

Whether to print the learning rate at every update, by default False.

Returns

-------

`torch.optim.lr_scheduler.LambdaLR`

The scheduler with the appropriate schedule.

Examples

--------

>>> from torch import nn

>>> from torch.optim import Adam

>>> dummy_model = nn.Linear(1, 1)

>>> optimizer = Adam(dummy_model.parameters(), lr=3e-4)

>>> scheduler = get_cosine_annealing_with_warmup(optimizer, num_warmup_steps=5, num_training_steps=10, alpha_f=0.5)

>>> assert isinstance(scheduler, LambdaLR)

"""

lr_lambda = partial(

_get_cosine_schedule_with_warmup_lr_lambda,

num_warmup_steps=num_warmup_steps,

num_training_steps=num_training_steps,

alpha_f=alpha_f,

)

return LambdaLR(optimizer, lr_lambda, last_epoch, verbose)

num_warmup_steps = 3 * len(train_loader)

num_training_steps = composer.trainer.max_epochs * (len(train_dataset) // composer.data.train_loader["batch_size"])

alpha_f = 1 # as if no decay

scheduler = get_cosine_annealing_with_warmup(

optimizer, num_warmup_steps=num_warmup_steps, num_training_steps=num_training_steps, alpha_f=alpha_f

)

from omnivault.transformer.core.scheduler import noam_lr_decay

warmup_steps = 3 * len(train_loader)

# lr first increases in the warmup steps, and then decays

noam = lambda step: noam_lr_decay(step, d_model=128, warmup_steps=warmup_steps) # noqa: E731

scheduler_config_cls = SCHEDULER_REGISTRY[cfg.scheduler.name]

if issubclass(scheduler_config_cls, LambdaLRConfig):

scheduler_pydantic_config = scheduler_config_cls(lr_lambda=noam, **cfg.scheduler)

else:

scheduler_pydantic_config = scheduler_config_cls(**cfg.scheduler) # type: ignore[assignment]

composer.scheduler = scheduler_pydantic_config

scheduler = scheduler_pydantic_config.build(optimizer=optimizer)

Criterion#

The Cross Entropy Loss function calculates the difference between two probability distributions - the predicted probability distribution output by the model (logits) and the actual distribution (target labels). It’s primarily used in classification tasks involving \(C\) classes.

\(\mathcal{B}\) : Denotes batch size,

\(K\) : The number of additional dimensions beyond batch and class, representing spatial or other feature dimensions in the input tensor,

\(N=\mathcal{B} \times d_1 \times \ldots \times d_K\) : Total count of individual elements across all dimensions, including batch and spatial dimensions. This value adjusts as per the dimensional complexity:

For \(K=0, N=\mathcal{B}\),

For \(K=1, N=\mathcal{B} \times d_1\),

For \(K>1, N\) scales accordingly.

\(C\) : The total number of classification categories,

\(x\) : Represents the input logits tensor,

\(y\) : Denotes the target tensor,

\(w\) : An optional tensor assigning weights to each class,

\(\mathcal{L}\) : Symbolizes the aggregate loss prior to any reduction,

\(l_b\) : The loss corresponding to the \(b\) th element within the batch, ranging over \(b=1\) to \(\mathcal{B}\).

Inputs and Targets#

Inputs (Logits): The function expects unnormalized logits for each class per input. These logits do not necessarily need to be positive values nor sum to 1. The shape of the input tensor can be:

For unbatched input: \((C)\),

For batched input: \((\mathcal{B}, C)\),

For \(K\)-dimensional input: \((\mathcal{B}, C, d_1, d_2, \ldots, d_K)\), suitable for tasks like pixel-wise classification in images where \(K \geq 1\).

Targets: When configuring the targets for the Cross Entropy Loss function, their expected shapes vary based on the nature of the targets (class indices vs. probabilities) and the dimensionality of the input:

For Class Indices as Targets:

Unbatched input: The shape should be a scalar representing a single class index in \([0, C)\).

Batched input: The shape should be \((\mathcal{B},)\), where each element is a class index for the corresponding input in the batch.

\(K\)-dimensional input: The shape should be \((\mathcal{B}, d_1, d_2, \ldots, d_K)\) for the \(K\)-dimensional case, with each element representing a class index for the corresponding spatial location.

For Probabilities as Targets (applicable in advanced scenarios like label smoothing or multi-label classification):

The shape of the targets must match the shape of the input logits tensor: \((\mathcal{B}, C)\) for batched input or \((\mathcal{B}, C, d_1, d_2, \ldots, d_K)\) for \(K\)-dimensional input. Each element in this tensor should be a probability corresponding to the likelihood of the class, with values in \([0, 1]\).

Loss Computation#

For Class Indices as Targets:

The loss for each element \(n\), accurately spanning across all considered dimensions, is calculated as:

\[ \ell(x, y) = \mathcal{L} = \{l_1, \ldots, l_{N}\}^{\top}, \quad l_n = -w_{y_n} \cdot \log \left( \frac{\exp(x_{n, y_n})}{\sum_{c=1}^{C} \exp(x_{n, c})} \right) \cdot \mathbb{1}\{y_n \neq \text{ignore_index}\} \]Here, \(N\) correctly reflects the aggregate count of elements when considering \(\mathcal{B}\) and the \(K\)-dimensional context. Consequently, if \(K=0\), \(N\) reduces to \(\mathcal{B}\).

For Probabilities as Targets:

In cases where the targets are probabilities, the calculation over each element \(n\), aligning with \(N\)’s definition, should be:

\[ \ell(x, y) = \mathcal{L} = \{l_1, \ldots, l_{N}\}^{\top}, \quad l_n = -\sum_{c=1}^{C} w_c \cdot y_{n, c} \cdot \log \left( \frac{\exp(x_{n, c})}{\sum_{i=1}^{C} \exp(x_{n, i})} \right) \]

Reduction#

No Reduction (

reduction='none'):When the reduction is set to ‘none’, the loss computation preserves the original dimensionality of the input, effectively returning a tensor that maps directly to each input element’s loss. This tensor has the shape \((\mathcal{B}, d_1, \ldots, d_K)\), where each element \(l_{n}\) within this tensor represents the computed loss for the corresponding input element across all dimensions, including the batch and any \(K\)-dimensional space:

\[ \mathcal{L} = \{l_1, \ldots, l_N\} \]This preserves the granularity of loss across the dataset, allowing for detailed analysis or custom reduction post hoc.

Mean Reduction (

reduction='mean'):For the ‘mean’ reduction, the losses across all elements are averaged to yield a single scalar value. This operation accounts for the total count of elements (\(N\)), including those spanning batch and additional dimensions, and is not merely an average over the batch size \(\mathcal{B}\), but over all \(N\) elements:

\[ \mathcal{L}_{mean} = \frac{1}{N} \sum_{n=1}^{N} l_n \]Here, traditionally we think of \(N\) as just the number of elements in the batch, but in the implementation, it spans all elements across the batch and \(K\)-dimensional spaces.

Sum Reduction (

reduction='sum'):With ‘sum’ reduction, the losses for all elements are aggregated into a single scalar through summation, without averaging. This sums the losses across all elements, including those across the batch and \(K\)-dimensional spaces:

\[ \mathcal{L}_{sum} = \sum_{n=1}^{N} l_n \]This scalar represents the total loss accumulated across the entire input set, providing a measure of overall loss magnitude without normalization by the number of elements.