Stage 10. Continuous Integration, Deployment, Learning and Training (DevOps, DataOps, MLOps)#

Fig. 54 CI/CD and automated ML pipeline.#

Continuous Integration (CI)#

Continuous Integration (CI) is a software development practice that focuses on frequently integrating code changes from multiple developers into a shared repository. CI aims to detect integration issues early and ensure that the software remains in a releasable state at all times.

A CI workflow refers to the series of steps and automated processes involved in the continuous integration of code changes. It typically involves building and testing the software, often in an automated and reproducible manner.

The primary goal of a CI workflow is to identify any issues or conflicts that may arise when integrating code changes. By continuously integrating code, developers can catch and fix problems early, reducing the chances of introducing bugs or breaking existing functionality.

CI workflows usually include steps such as code compilation, running tests, generating documentation, and deploying the application to test environments. These steps are often automated, triggered by events such as code pushes or pull requests.

Popular CI tools like Jenkins, Travis CI, and GitHub Actions provide mechanisms to define and execute CI workflows. These tools integrate with version control systems and offer a range of features, including customizable build and test environments, notifications, and integration with other development tools.

Technical Debt is Beyond Bad Code#

First of all, one should move away from the prior that technical debt is equivalent to bad code[1]. While this may be often true, equating technical debt to bad code is a simplification that, if not addressed, can lead to misunderstanding and miscommunication. If one shows up and spurs out the contrapositive of the above statement, to derive to the conclusion that if one writes good code, then there is no technical debt, and in turn, there’s no need to revisit, document, lint, and test the code[1], and even though logically valid, he will be in for a rude awakening.

Notwithstanding the slightly philosophical introduction, the practical situations are in fact, no one writes perfect code, and even just having a small amount of “bad code” will accumulate, some may not be even immediately obvious. If we do not have an assurance policy (read: Continuous Integration/Continuous Deployment) in place, then what we end up is a codebase full of technical debt, and trust me, no developer wants to inherit such a codebase.

On a more serious note, the broader implication of technical debt is that the application sitting on top of it is unreliable, unstable, and insecure, leading to bugs, security vulnerabilities, and performance issues. What’s worse is if one ships this code to production, then the cost of fixing the bugs is exponentially higher than if it was fixed in the development phase. Fortunately, any competent tech organization will have a CI/CD pipeline in place, and will go through various stages of testing and validation before shipping to production.

Before we move on, we need to be crystal clear that CI/CD is not a silver bullet that will eradicate all bugs and technical debt. After all, we often see code breaking in production, and the reason for this is two folds:

How you implement your CI/CD pipeline matters. Skipping certain stages such as type safety checks will inevitably lead to bugs in dynamic languages like Python.

How you write your code matters - consider the case where your unit tests are not robust, then it obviously will not catch the bugs that it is supposed to catch.

Even if you think you fulfill the above two, we quote the well known saying To err is human, to play at the fact that bugs are inevitable, and that means there is no such thing as a 100% bug free codebase. The argument here is not to eradicate bugs, but to reduce them - a numbers game.

Lifecycle#

Insert [image]?

Phase 1. Planning#

The Planning stage involves defining what needs to be built or developed. It’s a crucial phase where project managers, developers, and stakeholders come together to identify requirements, set goals, establish timelines, and plan the resources needed for the project. This stage often involves using project tracking tools and Agile methodologies like scrum or kanban to organize tasks, sprints, and priorities.

Common tech stack includes:

Jira: A popular project management tool that helps teams plan, track, and manage agile software development projects.

Confluence: A team collaboration tool that helps teams create, share, and collaborate on projects.

Phase 2. Development#

Coding or development is where the actual software creation takes place. Developers write code to implement the planned features and functionalities, adhering to coding standards and practices. Version control systems, such as Git, play an important role in this stage, enabling developers to collaborate on code, manage changes, and maintain a history of the project’s development.

Set Up Main Directory in Integrated Development Environment (IDE)#

Let us assume that we are residing in our root folder ~/ and we want to create

a new project called yolo in Microsoft Visual Studio Code, we can do as

follows:

~/ $ mkdir yolo && cd yolo

~/yolo $ code . # (1)

If you are cloning a repository to your local folder yolo, you can also do:

~/yolo $ git clone git@github.com:<username>/<repo-name>.git .

where . means cloning to the current directory.

README, LICENSE and CONTRIBUTING#

README#

The README.md file serves as the front page of your repository. It should

provide all the necessary information about the project, including:

Project Name and Description: Clearly state what your project does and why it exists.

Installation Instructions: Provide a step-by-step guide on how to get your project running on a user’s local environment.

Usage Guide: Explain how to use your project, including examples of common use cases.

Contributing: Link to your

CONTRIBUTING.mdfile and invite others to contribute to the project.License: Mention the type of license the project is under, with a link to the full

LICENSEfile.Contact Information: Offer a way for users to ask questions or get in touch.

We can create a README.md file to describe the project using the following

command:

~/yolo $ touch README.md

LICENSE#

The LICENSE file is critical as it defines how others can legally use, modify,

and distribute your project. If you’re unsure which license to use,

choosealicense.com can help you decide.

~/yolo $ touch LICENSE

After creating the file, you should fill it with the text of the license you’ve chosen. This could be the MIT License, GNU General Public License (GPL), Apache License 2.0, etc.

CONTRIBUTING#

CONTRIBUTING.md outlines guidelines for contributing to your project. This

might include:

How to File an Issue: Instructions for reporting bugs or suggesting enhancements.

How to Submit a Pull Request (PR): Guidelines on the process for submitting a PR, including coding standards, test instructions, etc.

Community and Behavioral Expectations: Information on the code of conduct and the expectations for community interactions.

~/yolo $ touch CONTRIBUTING.md

Version Control#

Version control, such as Git, are foundational to modern software development practices, including Continuous Integration and Continuous Deployment/Delivery (CI/CD). The premise to CI/CD is to enable developers to work on the same code base simultaneously, track every change, and automate the CI/CD processes such as triggering builds, tests, and deployments based on code commits and merges.

Initial Configuration#

Before you start using Git, you should configure your global username and email associated with your commits. This information identifies the author of the changes and is important for collaboration.

git config --global user.name <your-name>

git config --global user.email <your-email>

You should necessarily replace <your-name> and <your-email> with your actual

name and email address. In particular, having the correct email address is

important as it is used to link your commits to your GitHub account.

Setting Up a New Repository#

If you’re starting a new project in a local directory (e.g., ~/yolo), you’ll

follow these steps to initialize it as a Git repository, stage your files, and

make your first commit.

Create a

.gitignoreFile: This file tells Git which files or directories to ignore in your project, like build directories or system files.~/yolo $ touch .gitignore

Populate

.gitignorewith patterns to ignore. For example:.DS_Store __pycache__/ env/

which can be done using the following command:

~/yolo $ cat > .abc <<EOF .DS_Store __pycache__/ env/ EOF

Initialize the Repository:

~/yolo $ git init

This command creates a new Git repository locally.

Add Files to the Repository:

~/yolo $ git add .

This adds all files in the directory (except those listed in

.gitignore) to the staging area, preparing them for commit.Commit the Changes:

~/yolo $ git commit -m "Initial commit"

This captures a snapshot of the project’s currently staged changes.

Connecting to a Remote Repository#

After initializing your local repository, the next step is to link it with a remote repository. This allows you to push your changes to a server, making collaboration and backup easier.

Add a Remote Repository (If you’ve just initialized a local repo or if it’s not yet connected to a remote):

~/yolo $ git remote add origin <repo-url>

Replace

<repo-url>with your repository’s URL, which you can obtain from GitHub or another Git service such as GitLab or Bitbucket.Securely Push to the Remote Using a Token (Optional but recommended for enhanced security):

Before pushing changes, especially when 2FA (Two Factor Authentication) is enabled, you might need to use a token instead of a password.

~/yolo $ git remote set-url origin https://<token>@github.com/<username>/<repository>

Replace

<token>,<username>, and<repository>with your personal access token, your GitHub username, and your repository name, respectively.Push Your Changes:

~/yolo $ git push -u origin main

This command pushes your commits to the

mainbranch of the remote repository. The-uflag sets the upstream, makingorigin mainthe default target for future pushes.

Cloning an Existing Repository#

If you’ve already cloned an existing repository, many of these steps

(specifically from initializing the repo to the first commit) are unnecessary

since the repository comes pre-initialized and connected to its remote

counterpart. You’d typically start by pulling the latest changes with git pull

and then proceed with your work.

Git Workflow#

It is important to establish a consistent Git workflow to ensure that changes are managed effectively and that the project’s history is kept clean.

You can read more about git workflows here:

Virtual Environment#

We can follow python’s official documentation on installing packages in a virtual environment using pip and venv. In what follows, we would give a brief overview of the steps to set up a virtual environment for your project.

Create Virtual Environment#

~/yolo $ python3 -m venv <venv-name>

Activate Virtual Environment#

~/yolo $ source <venv-name>/bin/activate

~/yolo $ <venv-name>\Scripts\activate

Upgrade pip, setuptools and wheel#

(venv) ~/yolo $ python3 -m pip install --upgrade pip setuptools wheel

Managing Project Dependencies#

Once you’ve established a virtual environment for your project, the next step is to install the necessary libraries and packages. This process ensures that your project has all the tools required for development and execution.

Managing Dependencies with requirements.txt#

For project dependency management, the use of a requirements.txt file is a

common and straightforward approach. This file lists all the packages your

project needs, allowing for easy installation with a single command.

For simpler or moderately complex projects, a requirements.txt file is often

sufficient. Create this file and list each dependency on a separate line,

specifying exact versions to ensure consistency across different environments.

Create a

requirements.txtfile:touch requirements.txtPopulate

requirements.txtwith your project’s dependencies. For example:torch==1.10.0+cu113 torchaudio==0.10.0+cu113 torchvision==0.11.1+cu113 albumentations==1.1.0 matplotlib==3.2.2 pandas==1.3.1 torchinfo==1.7.1 tqdm==4.64.1 wandb==0.12.6

You can directly edit

requirements.txtin your favorite text editor to include the above dependencies.Install the dependencies from your

requirements.txtfile:pip install -r requirements.txt

Certain libraries, like PyTorch with CUDA support, may require downloading

binaries from a specific URL due to additional dependencies. In such cases, you

can use the -f option with pip to specify a custom repository for dependency

links:

pip install -r requirements.txt -f https://download.pytorch.org/whl/torch_stable.html

This command tells pip to also look at the given URL for any packages listed

in your requirements.txt, which is particularly useful for installing versions

of libraries that require CUDA for GPU acceleration.

You can also have a requirements-dev.txt file for development dependencies.

touch requirements-dev.txt

These dependencies are often used for testing, documentation, and other development-related tasks.

Managing Dependencies with pyproject.toml#

pyproject.toml is a configuration file introduced in

PEP 518 as a standardized way for

Python projects to manage project settings and dependencies. It aims to replace

the traditional setup.py and requirements.txt files with a single, unified

file that can handle a project’s build system requirements, dependencies, and

other configurations in a standardized format.

Unified Configuration:

pyproject.tomlconsolidates various tool configurations into one file, making project setups more straightforward and reducing the number of files at the project root.Dependency Management: It can specify both direct project dependencies and development dependencies, similar to

requirements.txtandrequirements-dev.txt. Tools likepipcan readpyproject.tomlto install the necessary packages.Build System Requirements: It explicitly declares the build system requirements, ensuring the correct tools are present before the build process begins. This is particularly important for projects that need to compile native extensions.

Tool Configuration: Many Python tools (e.g.,

black,flake8,pytest) now support reading configuration options frompyproject.toml, allowing developers to centralize their configurations.

[build-system]

requires = ["setuptools", "wheel"]

build-backend = "setuptools.build_meta"

[project]

name = "example_project"

version = "0.1.0"

description = "An example project"

authors = [{name = "Your Name", email = "you@example.com"}]

dependencies = [

"requests>=2.24",

"numpy>=1.19"

]

optional-dependencies = {

"dev" = ["pytest>=6.0", "black", "flake8"]

}

[tool.black]

line-length = 88

target-version = ['py38']

[tool.pytest]

minversion = "6.0"

addopts = "-ra -q"

Now if you want to install the dependencies, you can do so with:

pip install .

and for development dependencies:

pip install .[dev]

To this end, you should see the following directory structure:

.

├── CONTRIBUTING.md

├── LICENSE

├── README.md

├── pyproject.toml

├── requirements.txt

├── requirements-dev.txt

└── venv

├── bin

├── include

├── lib

├── pyvenv.cfg

└── share

You can find more information on writing your

pyproject.toml file here.

Pinning DevOps Tool Versions#

In DevOps, particularly in continuous integration (CI) environments, pinning

exact versions of tools like pytest, mypy, and other linting tools is

important. Here are the key reasons:

Reproducibility: Pinning specific versions ensures that the development, testing, and production environments are consistent. This means that code will be tested against the same set of dependencies it was developed with, reducing the “it works on my machine” problem.

Stability: Updates in these tools can introduce changes in their behavior or new rules that might break the build process. By pinning versions, you control when to upgrade and prepare for any necessary changes in your codebase, rather than being forced to deal with unexpected issues from automatic updates.

Tools like black, isort, mypy, and pylint are particularly important to

pin because they directly affect code quality and consistency. Changes in their

behavior due to updates can lead to new linting errors or formatting changes

that could disrupt development workflows.

Example 46 (Pinning Pylint Version)

Consider the Python linting toolpylint. It’s known for its thoroughness in

checking code quality and conformity to coding standards. However, pylint is

also frequently updated, with new releases potentially introducing new checks or

modifying existing ones.

Suppose your project is using pylint version 2.6.0. In this version, your

codebase passes all linting checks, ensuring a certain level of code quality and

consistency. Now, imagine pylint releases a new version, 2.7.0, which includes

a new check for a particular coding pattern (e.g., enforcing more stringent

rules on string formatting or variable naming).

Consequently, if you don’t pin the pylint version, the next time you run

an installation (e.g., pip install -r requirements.txt in local or remote),

pylint has a good chance of being updated to version 2.7.0. This update could

trigger new linting errors in your codebase, even though the code itself hasn’t

changed. This situation can be particularly disruptive in a CI/CD environment,

where a previously passing build now fails due to new linting errors.

Local Pre-Commit Checks (Local Continuous Integration)#

Consider continuous integration (CI) as a practice of merging all developers’ working copies to a shared mainline several times a day. Each integration is verified by an automated build (including linting, code smell, type checks, unit tests etc) to detect integration errors as quickly as possible. This build can be triggered easily by modern version control systems like Git through a simple push to the repository. Tools like GitHub Actions, a CI/CD feature within GitHub, play the role in facilitating these practices.

As we will continuously emphasize, to maximize the effectiveness of tools like Jenkins and GitHub Actions, it’s crucial to maintain consistency and uniformity between the local development environment and the CI/CD pipeline. This alignment ensures that the software builds, tests, and deploys in an identical manner, both locally and in the CI/CD environment. Achieving this uniformity often involves the use of containerization tools like Docker, which can encapsulate the application and its dependencies in a container that runs consistently across different computing environments. By doing so, developers can minimize the ‘it works on my machine’ syndrome, a common challenge in software development, and foster a more collaborative and productive development culture. Moreover, it is also common to see developers get a “shock” when their build failed in the remote CI/CD pipeline, which could have been easily detected locally if and only if they had run the same checks locally. There are some commercial tools that may not be able to same checks locally, but for open-source tools, it is a good practice to run the same checks locally.

Some developers will “forget” to run the same checks locally, and this is where pre-commit hooks come into play. Pre-commit hooks are scripts that run before a commit is made to the version control system.

Guard Rails#

As the name suggests, guard rails are a set of rules and guidelines that help developers stay on track and avoid common security, performance, and maintainability pitfalls. These guard rails can be implemented as pre-commit hooks, which are scripts that run before a commit is made to the version control system.

Bandit is a tool designed to find common security issues in Python code. To install Bandit, run:

pip install -U bandit

and you can place configurations of Bandit in a

.banditfile. But more commonly, we put inpyproject.tomlfile for unification.# FILE: pyproject.toml [tool.bandit] exclude_dirs = ["tests", "path/to/file"] tests = ["B201", "B301"] skips = ["B101", "B601"]

detect-secretsis a tool that can be used to prevent secrets from being committed to your repository. It can be installed using pip:pip install -U detect-secrets

You can find more information in the usage section. People commonly place this as a hook in the

.pre-commit-config.yamlfile.Safety is a tool that checks your dependencies for known security vulnerabilities. You can draw parallels to Nexus IQ, which is a commercial tool that does the same thing.

There are many more guard rails that can be implemented as pre-commit hooks, we cite Welcome to pre-commit heaven - Marvelous MLOps Substack as a good reference below:

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v4.3.0

hooks:

- id: check-ast

- id: check-added-large-files

- id: check-json

- id: check-toml

- id: check-yaml

- id: check-shebang-scripts-are-executable

- id: detect-secrets

- repo: https://github.com/PyCQA/bandit

rev: 1.7.4

hooks:

- id: bandit

Styling, Formatting, and Linting#

Guido Van Rossum, the author of Python, aptly stated, “Code is read more often than it is written.” This principle underscores the necessity of both clear documentation and easy readability in coding. Adherence to style and formatting conventions, particularly those based on PEP8, plays a vital role in achieving this goal. Different teams may adopt various conventions, but the key lies in consistent application and the use of automated pipelines to maintain this consistency. For instance, standardizing line lengths simplifies code review processes, making discussions about specific sections more straightforward. In this context, linting and formating emerge as critical tools for maintaining high code quality. Linting, the process of analyzing code for potential errors, and formatting, which ensures a uniform appearance, collectively boost readability and maintainability. A well-styled codebase not only looks professional but also reduces bugs and eases integration and code reviews. These practices, when ingrained as an intuition among developers, lead to more robust and efficient software development.

This part is probably what most people are familiar with, we list some common tools for styling, formatting, and linting below (cited from Welcome to pre-commit heaven - Marvelous MLOps Substack):

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v4.3.0

hooks:

- id: end-of-file-fixer

- id: mixed-line-ending

- id: trailing-whitespace

- repo: https://github.com/psf/black

rev: 22.10.0

hooks:

- id: black

language_version: python3.11

args:

- --line-length=128

- id: black-jupyter

language_version: python3.11

- repo: https://github.com/pycqa/isort

rev: 5.11.5

hooks:

- id: isort

args: ["--profile", "black"]

- repo: https://github.com/pycqa/flake8

rev: 5.0.4

hooks:

- id: flake8

args:

- "--max-line-length=128"

additional_dependencies:

- flake8-bugbear

- flake8-comprehensions

- flake8-simplify

- repo: https://github.com/pre-commit/mirrors-mypy

rev: v0.991

hooks:

- id: mypy

Tests#

Testing is a critical part of the software development process. It helps ensure that the code behaves as expected and that changes don’t introduce new bugs or break existing functionality. Unit tests, in particular, focus on testing individual components or units of code in isolation. They help catch bugs early and provide a safety net for refactoring and making changes with confidence.

Usually, unit tests are run as part of pre-merge checks to ensure that the changes being merged don’t break existing functionality where as post-merge checks can entail more comprehensive tests such as integration tests, end-to-end tests etc.

Set up pytest for testing codes.

pytest==6.0.2

pytest-cov==2.10.1

In general, Pytest expects our testing codes to be grouped under a folder

called tests. We can configure in our pyproject.toml file to override this

if we wish to ask pytest to check from a different directory. After specifying

the folder holding the test codes, pytest will then look for python scripts

starting with tests_*.py; we can also change the extensions accordingly if you

want pytest to look for other kinds of files

(extensions)[^testing_made_with_ml].

# Pytest

[tool.pytest.ini_options]

testpaths = ["tests"]

python_files = "test_*.py"

References

Git Sanity Checks#

Git sanity checks are a set of rules and guidelines that help developers avoid common mistakes and pitfalls when working with Git. More specifically, we have the below:

commitizen: This hook encourages developers to use the Commitizen tool for formatting commit messages. Commitizen standardizes commit messages based on predefined conventions, making the project’s commit history more readable and navigable. Standardized messages facilitate understanding the purpose of each change, aiding in debugging and project management (though I rarely need to sieve through commit messages) but this is good practice (imagine all your commit message is “111” or “fix bug” or “update”).

commitizen-branch: A specific use of the Commitizen validation that can be configured to work at different stages, such as during branch pushes. This ensures that commits pushed to branches also follow the standardized format, maintaining consistency not just locally but across the repository.

check-merge-conflict: This hook checks for merge conflict markers (e.g.,

<<<<<<<,=======,>>>>>>>). These markers indicate unresolved merge conflicts, which should not be committed to the repository as they can break the codebase. Preventing such commits helps maintain the integrity and operability of the project.no-commit-to-branch: It prevents direct commits to specific branches (commonly the main or master branch). This practice encourages the use of feature branches and pull requests, fostering code reviews and discussions before changes are merged into the main codebase. It’s a way to ensure that changes are vetted and tested, reducing the risk of disruptions in the main development line.

Again, citing from Welcome to pre-commit heaven - Marvelous MLOps Substack:

repos:

- repo: https://github.com/commitizen-tools/commitizen

rev: v2.35.0

hooks:

- id: commitizen

- id: commitizen-branch

stages: [push]

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v4.3.0

hooks:

- id: check-merge-conflict

- id: no-commit-to-branch

Code Correctors#

This is entering “riskier” territory, as code correctors can automatically correct your code.

pyupgradeis a tool that automatically upgrades Python syntax to the latest version that’s supported by the Python interpreter specified. It takes existing Python code and refactors it where possible to use newer syntax features that are more efficient, readable, or otherwise preferred. For instance, when targeting Python 3.9 and above, it might convert old-style string formatting to f-strings, use newer Python 3.9 dictionary merge operators, and more.yesqaautomatically removes unnecessary# noqacomments from the code.# noqais used to tell linters to ignore specific lines of code that would otherwise raise warnings or errors. However, over time, as the code evolves, some of these# noqacomments might no longer be necessary because the issues have been resolved or the code has changed.

Setting Up Pre-Commit#

Install Pre-Commit:

~/yolo (venv) $ pip install -U pre-commit ~/yolo (venv) $ pre-commit install

Create a

.pre-commit-config.yamlFile:~/yolo (venv) $ touch .pre-commit-config.yaml

Populate

.pre-commit-config.yamlwith the desired hooks:Sample

.pre-commit-config.yamlfile:repos: - repo: https://github.com/pre-commit/pre-commit-hooks rev: v4.5.0 hooks: - id: check-added-large-files - id: check-ast - id: check-builtin-literals - id: check-case-conflict - id: check-docstring-first - id: check-executables-have-shebangs - id: check-json - id: check-shebang-scripts-are-executable - id: check-symlinks - id: check-toml - id: check-vcs-permalinks - id: check-xml - id: check-yaml - id: debug-statements - id: destroyed-symlinks - id: mixed-line-ending - id: trailing-whitespace

Run Pre-Commit:

Sample command to run pre-commit on all files:

~/yolo (venv) $ pre-commit run --all-files

This command runs all the hooks specified in the

.pre-commit-config.yamlfile on all files in the repository.

Documentation#

Documentation is severely underlooked in many organizations. However, documentation is a fundamental part of the development process as it provides guidance for users and developers through carefully crafted explanations, cookbooks, tutorials and API references.

The documentation should necessarily be part of the CI/CD pipeline, and everytime you update the documentation, it should be automatically built and deployed to the documentation hosting platform.

Sphinx: A tool that makes it easy to create intelligent and beautiful documentation for Python projects. It is commonly used to document Python libraries and applications, but it can also be used to document other types of projects.

MkDocs: A fast, simple, and downright gorgeous static site generator that’s geared towards building project documentation.

Sphinx API Documentation: A tool that automatically generates API documentation from your source code. It’s commonly used in conjunction with Sphinx to create API references for Python projects.

A Word on Type Safety Checks in Python#

Python is Strongly and Dynamically Typed#

First of all, one should be clear that Python is considered a strongly and dynamically typed language[2].

Dynamic because runtime objects have a type, as opposed to the variable having

a type in statically typed languages. For example, consider a variable

str_or_int in python:

str_or_int: str | int = "hello"

and later in the code, we can reassign it to an integer:

str_or_int: str | int = 42

This is not possible in statically typed languages, where the type of a variable is fixed at compile time.

Strongly because Python does not allow unreasonable arbitrary type conversions/coercions. You are not allowed to concatenate a string with an integer, for example:

"hello" + 42

will raise a TypeError exception.

MyPy: Static Type Checking#

Static type checking is the process of verifying the type safety of a program

based on analysis of some source code. mypy is probably the most recognized

static type checker, in which it analyzes your code without executing it,

identifying type mismatches based on the type hints you’ve provided. mypy is

most valuable during the development phase, helping catch type-related errors

before runtime.

Consider the following very simply example:

def concatenate(a: str, b: str) -> str:

return a + b

Here, we’ve used type hints to specify that a and b are strings, and that

the function returns a string. A subtle bug can be introduced just for the sake

of illustration:

concatenate("hello", 42) # mypy will catch this

This will raise a TypeError exception at runtime, but mypy will catch this

before the code is executed. One would argue that this is a trivial example,

since runtime will catch this error. However, in a larger codebase, you may not

have the luxury of running the entire codebase to catch such errors.

Furthermore, there are even more silent errors that runtime may not catch due

to a certain combination that does not immediately raise an exception.

TypeGuard: Runtime Type Checking#

Unlike mypy, typeguard operates at runtime. In other words, mypy just tell

you “this is wrong” but typeguard will actually raise an error at runtime.

Consequently, we can view typeguard as a runtime type checker and

complementary to mypy.

typeguard is particularly useful in testing scenarios or in development

environments where you want to ensure that type annotations are being respected

at runtime. It can catch type errors that static analysis might not catch due to

the dynamic nature of Python or when dealing with external systems that might

not adhere to the expected types.

To use TypeGuard, you typically employ it through decorators or with type checking in unit tests.

from typeguard import typechecked

@typechecked

def greet(name: str) -> str:

return 'Hello ' + name

try:

greet(42)

except TypeError as e:

print(e)

Release and Versioning#

Phase 3. Build#

Considering we have came so far in the development phase, where we have set up our main directory, version control, virtual environment, project dependencies, local pre-commit checks, documentation, and release and versioning, we can now touch on the build phase - where automation is the key. We need a way to automate whatever we have done in the development phase, and feedback whether the local build is actually “really” successful or not to every team member.

Dockerize the application. Production identical environment.

Infrastructure as Code (IaC) for cloud deployment.

Container orchestration for scaling and managing containers.

Pre-Merge Checks#

Commit checks is to ensure the following:

The requirements can be installed on various OS and python versions.

Ensure code quality and adherence to PEP8 (or other coding standards).

Ensure tests are passed.

name: Commit Checks # (1)

on: [push, pull_request] # (2)

jobs: # (3)

check_code: # (4)

runs-on: ${{ matrix.os }} # (5)

strategy: # (6)

fail-fast: false # (7)

matrix: # (8)

os: [ubuntu-latest, windows-latest] # (9)

python-version: [3.8, 3.9] # (10)

steps: # (11)

- name: Checkout code # (12)

uses: actions/checkout@v2 # (13)

- name: Setup Python # (14)

uses: actions/setup-python@v2 # (15)

with: # (16)

python-version: ${{ matrix.python-version }} # (17)

cache: "pip" # (18)

- name: Install dependencies # (19)

run: | # (20)

python -m pip install --upgrade pip setuptools wheel

pip install -e .

- name: Run Black Formatter # (21)

run: black --check . # (22)

# - name: Run flake8 Linter

# run: flake8 . # look at my pyproject.toml file and see if there is a flake8 section, if so, run flake8 on the files in the flake8 section

- name: Run Pytest # (23)

run: python -m coverage run --source=custom_hn_exercise_counter -m

pytest && python -m coverage report # (24)

This is the name that will show up under the Actions tab in GitHub. Typically, we should name it appropriately like how we indicate the subject of an email.

The list here indicates the workflow will be triggered whenever someone directly pushes or submits a PR to the main branch.

Once an event is triggered, a set of jobs will run on a runner. In our example, we will run a job called

check_codeon a runner to check for formatting and linting errors as well as run thepytesttests.This is the name of the job that will run on the runner.

We specify which OS system we want the code to be run on. We can simply say

ubuntu-latestorwindows-latestif we just want the code to be tested on a single OS. However, here we want to check if it works on both Ubuntu and Windows, and hence we define${{ matrix.os }}wherematrix.osis[ubuntu-latest, windows-latest]. A cartesian product is created for us and the job will run on both OSs.Strategy is a way to control how the jobs are run. In our example, we want the job to run as fast as possible, so we set

strategy.fail-fasttofalse.If one job fails, then the whole workflow will fail, this is not ideal if we want to test multiple jobs, we can set

fail-fasttofalseto allow the workflow to continue running on the remaining jobs.Matrix is a way to control how the jobs are run. In our example, we want to run the job on both Python 3.8 and 3.9, so we set

matrix.python-versionto[3.8, 3.9].This list consists of the OS that the job will run on in cartesian product.

This is the python version that the job will run on in cartesian product. We can simply say

3.8or3.9if we just want the code to be tested on a single python version. However, here we want to check if it works on both python 3.8 and python 3.9, and hence we define${{ matrix.python-version }}wherematrix.python-versionis[3.8, 3.9]. A cartesian product is created for us and the job will run on both python versions.This is a list of dictionaries that defines the steps that will be run.

Name is the name of the step that will be run.

It is important to specify

@v2as if unspecified, then the workflow will use the latest version from actions/checkout template, potentially causing libraries to break. The idea here is like yourrequirements.txtidea, if different versions then will break.Setup Python is a step that will be run before the job.

Same as above, we specify

@v2as if unspecified, then the workflow will use the latest version from actions/setup-python template, potentially causing libraries to break.With is a way to pass parameters to the step.

This is the python version that the job will run on in cartesian product and if run 1 python version then can define as just say 3.7

Cache is a way to control how the libraries are installed.

Install dependencies is a step that will be run before the job.

|is multi-line string that runs the below code, which sets up the libraries fromsetup.pyfile.Run Black Formatter is a step that will be run before the job.

Runs

blackwith configurations frompyproject.tomlfile.Run Pytest is a step that will be run before the job.

Runs pytest, note that I specified

python -mto resolve PATH issues.

Orchestration#

Here you would have many github actions/jenkins workflows that orchestrate the build, test, and release of your application. You might also have another workflow to deploy your data pipeline to say a container registry like AWS ECR or Google GCR.

Infrastructure as Code (IaC)#

You can also define templates for your infrastructure as code (IaC) in Terraform.

Phase 4. Scan and Test#

First and foremost, this piece of article is not to teach you how to write tests, because that would take a whole book to cover. Writing tests is “easy”, but writing good tests is an art, and difficult to master. I want to set the stage for you to understand the importance of testing, and what types of testing are there, along with some intuition.

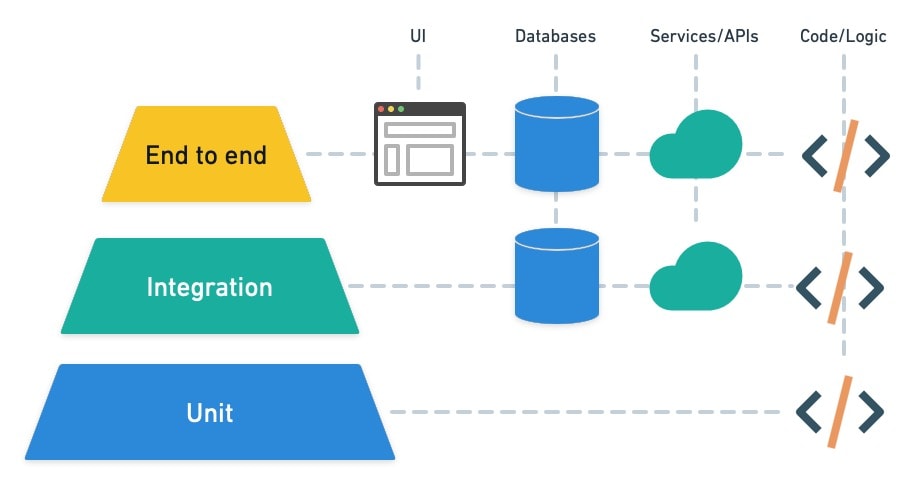

The Testing Pyramid#

The testing pyramid is a visual metaphor that illustrates the ideal distribution of testing methodologies from the base up: starting with unit tests, followed by integration tests, then system testing, and capped off with end-to-end (E2E) tests. This structure emphasizes the importance of a bottom-up approach to testing, where the majority of tests are low-level, quick, and automated unit tests, progressing to fewer, more comprehensive, and often manual tests at the top.

Unit Tests

Integration Tests

System Tests

End-to-End Tests

Fig. 55 The Testing Pyramid#

Image Credit: Testing Pyramid

Unit Testing#

Unit testing is a fundamental tool in every developer’s toolbox. Unit tests not only help us test our code, they encourage good design practices, reduce the chances of bugs reaching production, and can even serve as examples or documentation on how code functions. Properly written unit tests can also improve developer efficiency.

Intuition#

Unit tests are the smallest and most granular tests in the testing pyramid. This can be explained through an analogy of a building. If you think of your application as a building, unit tests are the bricks. They are the smallest, most fundamental building blocks of your application. They test the smallest pieces of code, such as functions, methods, or classes, in isolation from the rest of the application.

You need to ensure each brick is solid and reliable before you can build a sturdy, reliable building. Similarly, you need to ensure each unit of code is solid and reliable before you can build a sturdy, reliable application.

Benefits of Unit Testing#

Early Bug Detection and Reduce Cost#

Why can’t I catch a bug when the application is in production? Cost. It is expensive to revert back and fix the bug. It is much cheaper to fix the bug when it is caught early in the development cycle. Unit tests allow for the detection of problems early in the development cycle, saving time and effort by preventing bugs from propagating to later stages.

A 2008 research study by IBM estimates that a bug caught in production could cost 6 times as much as if it was caught during implementation[3].

Refactoring with Confidence#

Development is an iterative process. You write code, test it, and then refactor it. You repeat this process until you are satisfied with the result.

With a suite of unit tests, developers can make changes to the codebase confidently, knowing that they’ll be alerted if a change inadvertently breaks something that used to work.

Unit Test As Documentation#

Unit tests serve as a form of documentation that describes what the code is supposed to do, helping new developers understand the project’s functionality more quickly.

Dependency Injection#

Dependency Injection (DI) is a design pattern used to manage dependencies between objects in software development. It’s a technique that allows a class’s dependencies to be injected into it from the outside rather than being hardcoded within the class. This approach promotes loose coupling, enhances testability, and improves code maintainability.

At its core, DI involves three key components:

The Client: The object that depends on the service(s).

The Injector: The mechanism that injects the service(s) into the client.

The Service: The dependency or service being used by the client.

Dependency Injection can be implemented in several ways, including constructor injection, setter injection, and interface injection. Each method has its context and use case, but they all serve the same purpose: to decouple the creation of a dependency from its usage.

Link to Unit Testing#

The link between Dependency Injection and unit testing is fundamentally about making code easier to test. DI facilitates the testing process in several ways:

Isolation of Unit Tests: By injecting dependencies into a class, you can easily replace those dependencies with mocks or stubs during testing. This allows you to isolate the unit of code being tested, ensuring that tests are not affected by external factors such as databases, file systems, or network calls.

Flexibility in Test Scenarios: Dependency Injection makes it easier to create different configurations of an object for testing. You can inject different implementations of a dependency to test how the object behaves under various conditions, enhancing test coverage and robustness.

Reduced Boilerplate Code: Without DI, you might find yourself writing a lot of boilerplate code to set up objects for testing, especially if they have numerous and complex dependencies. DI frameworks can automate much of this setup, keeping your test code cleaner and focused on the behavior you’re testing.

No Dependency Injection vs Dependency Injection#

Consider a simple class that processes user data and requires a database connection to store this data. Without DI, the class might directly instantiate a connection to a specific database, making it difficult to test the class without accessing the actual database.

1from typing import Protocol, Tuple

2

3import numpy as np

4from numpy.typing import NDArray

5

6

7class DatasetLoader(Protocol):

8 def load_feature_and_label(self) -> Tuple[NDArray[np.float32], NDArray[np.int32]]:

9 ...

10

11

12class ImageDatasetLoader:

13 def load_feature_and_label(self) -> Tuple[NDArray[np.float32], NDArray[np.int32]]:

14 features = np.array([[0.1, 0.2, 0.3]])

15 labels = np.array([1])

16

17 return features, labels

18

19

20class TextDatasetLoader:

21 def load_feature_and_label(self) -> Tuple[NDArray[np.float32], NDArray[np.int32]]:

22 features = np.array([[0.4, 0.5, 0.6]])

23 labels = np.array([0])

24 return features, labels

25

26

27class TrainerWithoutDependencyInjection:

28 def __init__(self) -> None:

29 self.dataset_loader = ImageDatasetLoader()

30

31 def train(self) -> None:

32 features, labels = self.dataset_loader.load_feature_and_label()

33 print(f"Training model on features: {features} and labels: {labels}")

This is a classic case of tight coupling, where the

TrainerWithoutDependencyInjection class is tightly coupled to the

ImageDatasetLoader class. This makes it difficult to test the

TrainerWithoutDependencyInjection class in isolation, as it’s dependent on the

ImageDatasetLoader class and its behavior. If the dataset_loader is now an

instance of TextDatasetLoader, the TrainerWithoutDependencyInjection class

will fail to work as expected.

To fix this, we can use Dependency Injection to decouple the Trainer class

from the DatasetLoader class. This allows us to inject different

implementations of the DatasetLoader interface into the Trainer class,

making it easier to test and more flexible in terms of the data sources it can

work with.

1class Trainer:

2 def __init__(self, dataset_loader: DatasetLoader) -> None:

3 self.dataset_loader = dataset_loader

4

5 def train(self) -> None:

6 features, labels = self.dataset_loader.load_feature_and_label()

7 print(f"Training model on features: {features} and labels: {labels}")

8

9

10def test_train_model_using_image_dataset_loader() -> None:

11 dataset_loader = ImageDatasetLoader()

12 trainer = Trainer(dataset_loader)

13 trainer.train()

14

15

16def test_train_model_using_text_dataset_loader() -> None:

17 dataset_loader = TextDatasetLoader()

18 trainer = Trainer(dataset_loader)

19 trainer.train()

Stubs and Mocks#

In unit testing, mocks and stubs are both types of test doubles used to simulate the behavior of real objects in a controlled way. They are essential tools for isolating the piece of code under test, ensuring that tests are fast, reliable, and independent of external factors or system states. However, mocks and stubs serve slightly different purposes and are used in different scenarios.

Stubs#

Stubs provide predetermined responses to calls made during the test. They are typically used to represent dependencies of the unit under test, allowing you to bypass operations that are irrelevant to the test case, such as database access, network calls, or complex logic. Stubs are simple objects that return fixed data and are primarily used to:

Provide indirect input to the system under test.

Allow the test to control the test environment by simulating various conditions.

Avoid issues related to external dependencies, such as network latency or database access errors.

Stubs are passive and only return the specific responses they are programmed to return, without any assertion on how they were used by the unit under test.

Mocks#

Mocks are more sophisticated than stubs. They are used to verify the interaction between the unit under test and its dependencies. Mocks can be programmed with expectations, which means they can assert if they were called correctly, how many times they were called, and with what arguments. They are particularly useful for:

Verifying that the unit under test interacts correctly with its dependencies.

Ensuring that certain methods are called with the correct parameters.

Checking the number of times a dependency is interacted with, to validate the logic within the unit under test.

Mocks actively participate in the test, and failing to meet their expectations will cause the test to fail. This makes them powerful for testing the behavior of the unit under test.

Further Readings#

Integration Testing#

Unlike unit testing, which focuses on verifying the correctness of isolated pieces of code, integration testing focuses on testing the connections and interactions between components to identify any issues in the way they integrate and operate together.

Verifying the interactions between system components is crucial, especially since these components might be developed independently or in isolation. A complex system typically encompasses databases, APIs, interfaces, and more, all of which interact with each other and possibly with external systems. Integration testing plays a key role in uncovering system-wide issues, such as inconsistencies in database schemas or problems with third-party API integrations. It enhances overall test coverage and provides vital feedback throughout the development process, ensuring that components work together as intended[4].

Intuition#

The analogy of a building can be extended to understand integration testing. If unit tests verify the integrity of each brick (component), integration testing checks the mortar between bricks (interactions). It ensures that not only are the individual components reliable, but they also come together to form a cohesive whole. Just as a wall relies on the strength of both the bricks and the mortar, a software system relies on both its individual components and their interactions.

Integration testing is like verifying that the electrical and plumbing systems in a building work correctly once they are fitted together, despite each system working perfectly in isolation.

Benefits of Integration Testing#

Exposes Interface Issues#

Integration testing is crucial for detecting problems that occur when different parts of a system interact. It can uncover issues with the interfaces between components, such as incorrect data being passed between modules, or problems with the way components use each other’s APIs.

Validates Functional Coherence#

By testing a group of components together, integration testing ensures that the software functions correctly as a whole. This is particularly important for critical paths in an application where the interaction between components is complex or involves external systems like databases or third-party services.

Highlights Dependency Problems#

Complex systems often rely on external dependencies, and integration testing can reveal issues with these dependencies that might not be apparent during unit testing. This includes problems with network communications, database integrations, and interactions with external APIs.

Improves Confidence in System Stability#

Successful integration tests provide confidence that the system will perform as expected under real-world conditions. This is especially important when changes are made to one part of the system, as it helps ensure that such changes do not adversely affect other parts.

Given the provided overview of integration testing, let’s construct a clear and practical guide to implementing integration testing, focusing on a hypothetical banking application as mentioned. This guide will outline key steps, considerations, and an example to illustrate how integration testing can be effectively applied.

Understanding Integration Testing in Practice#

Let’s adopt the example given in Microsoft’s Code with Engineering Playbook Integration Testing Design Blocks to understand how integration testing can be applied in practice.

Objective: To ensure that independently developed modules of a banking application—login, transfers, and current balance—work together as intended.

Step 1: Identify Integration Points#

First, identify the key integration points within the application that require testing. For the banking application, these points include:

Login to Current Balance: After a successful login, the application redirects the user to their current balance page with the correct balance displayed.

Transfers to Current Balance: After a transfer is initiated, ensure that the transfer completes successfully and the current balance is updated accurately.

Step 2: Design Integration Tests#

For each identified integration point, design a test scenario that mimics real-world usage:

Login Integration Test:

Objective: Verify that upon login, the user is redirected to the current balance page with the correct balance.

Method: Use a mock user with predefined credentials. After login, assert that the redirection is correct and the displayed balance matches the mock user’s expected balance.

Transfers Integration Test:

Objective: Confirm a transfer updates the sender’s balance correctly.

Method: Create a test scenario where a mock user transfers money to another account. Verify pre-transfer and post-transfer balances to ensure the transfer amount is correctly deducted.

Note that it is generally the consensus that integration tests should be using real data and connections. So why did the example use the word “mock”? The example is using the word “mock” to refer to the user, not the data or connections. The user is a mock because it is not a real user, but a simulated user for the purpose of testing.

Techniques for Integration Testing#

Big Bang Testing#

Big Bang Testing is a straightforward but high-risk approach to integration testing where all the components or modules of a software application are integrated simultaneously, and then tested as a whole. This method waits until all parts of the system are developed and then combines them to perform the integration test. The primary advantage of this approach is its simplicity, as it does not require complex planning or integration stages. However, it has significant drawbacks:

Identifying the root cause of a failure can be challenging because all components are integrated at once, making it difficult to isolate issues.

It can lead to delays in testing until all components are ready.

There’s a higher risk of encountering multiple bugs or integration issues simultaneously, which can be overwhelming to debug and fix.

For example, if you want to test whether your Trainer class works correctly to

train a large language model, you might need to integrate the Trainer class

with the LanguageModel class, the DatasetLoader class, and the Optimizer

class. So here you would integrate all these classes at once and test the

Trainer class as a whole.

Incremental Testing#

Incremental Testing is a more systematic and less risky approach compared to Big Bang Testing. It involves integrating and testing components or modules one at a time or in small groups. This method allows for early detection of defects related to interfaces and interactions between integrated components. Incremental Testing can be further divided into two main types: Top-Down Testing and Bottom-Up Testing.

Top-Down Testing#

Top-Down Testing involves integrating and testing from the top levels of the software’s control flow downwards. It starts with the highest-level modules and progressively integrates and tests lower-level modules using stubs (simplified implementations or placeholders) for modules that are not yet developed or integrated. This approach allows for early validation of high-level functionality and the overall system’s architecture. However, it might delay testing of lower-level components and their interactions.

Advantages include:

Early testing of major functionalities and user interfaces.

Facilitates early discovery of major defects.

Disadvantages include:

Lower-level modules are tested late in the cycle, which may delay the discovery of some bugs.

Requires the creation and maintenance of stubs, which can be resource-intensive.

Bottom-Up Testing#

Bottom-Up Testing, in contrast, starts with the lowest level modules and progressively moves up to higher-level modules, using drivers (temporary code that calls a module and provides it with the necessary input for testing) until the entire system is integrated and tested. This method is beneficial for testing the fundamental components of a system early in the development cycle.

Advantages include:

Early testing of the fundamental operations provided by lower-level modules.

No need for stubs since testing begins with actual lower-level units.

Disadvantages include:

Higher-level functionalities and user interfaces are tested later in the development cycle.

Requires the development and maintenance of drivers, which can also be resource-intensive.

Both incremental approaches—Top-Down and Bottom-Up—offer more control and easier isolation of defects compared to Big Bang Testing. They also allow for parallel development and testing activities, potentially leading to more efficient use of project time and resources.

Integration Test vs Acceptance Test#

As we understand the importance of integration testing, which focuses on testing the interactions between components, it is essential to distinguish integration testing from acceptance testing. While both are critical for ensuring the quality of a software system, they serve different purposes and operate at different levels of the testing pyramid.

Integration Testing: Focuses on verifying the interactions between components to identify any issues in the way they integrate and operate together. It ensures that different parts of the system work together as intended from a technical perspective.

Acceptance Testing: Focuses on confirming a group of components work together as intended from a business scenario. It is performed by end-users or clients to validate the end-to-end business flow.

Further Readings#

System Testing#

Intuition#

System testing can be likened to the inspection of a completed building before it’s opened for occupancy. After ensuring that all individual components (bricks, electrical systems, plumbing) are working correctly and are properly integrated, system testing examines the building as a whole to ensure it meets all the specified requirements. This involves checking not only the internal workings but also how the building interacts with external systems (such as electrical grids, water supply systems) and complies with all applicable codes and regulations. In software terms, system testing checks the complete and fully integrated software product to ensure it aligns with the specified requirements. It’s about verifying that the entire system functions correctly in its intended environment and meets all user expectations.

End-to-End Testing#

Intuition#

End-to-end testing takes the building analogy a step further, comparing it to not only inspecting the building as a whole but also observing how it serves its occupants during actual use. Imagine a scenario where we follow residents as they move in, live in, and use the building’s various facilities. This would include checking if the elevator efficiently transports people between floors, if the heating system provides adequate warmth during winter, and if the security systems ensure the residents’ safety. In the context of software, E2E testing involves testing the application’s workflow from beginning to end. This aims to replicate real user scenarios to ensure the system behaves as intended in real-world use. It’s the ultimate test to see if the software can handle what users will throw at it, including interacting with other systems, databases, and networks, to fulfill end-user requirements comprehensively.

Thus, while integration testing focuses on the connections and interactions between components, system testing evaluates the complete, integrated system against specified requirements, and end-to-end testing examines the system’s functionality in real-world scenarios, from the user’s perspective.

Unit vs Integration vs System vs E2E Testing#

The table below illustrates the most critical characteristics and differences among Unit, Integration, System, and End-to-End Testing, and when to apply each methodology in a project.

Unit Test |

Integration Test |

System Testing |

E2E Test |

|

|---|---|---|---|---|

Scope |

Modules, APIs |

Modules, interfaces |

Application, system |

All sub-systems, network dependencies, services and databases |

Size |

Tiny |

Small to medium |

Large |

X-Large |

Environment |

Development |

Integration test |

QA test |

Production like |

Data |

Mock data |

Test data |

Test data |

Copy of real production data |

System Under Test |

Isolated unit test |

Interfaces and flow data between the modules |

Particular system as a whole |

Application flow from start to end |

Scenarios |

Developer perspectives |

Developers and IT Pro tester perspectives |

Developer and QA tester perspectives |

End-user perspectives |

When |

After each build |

After Unit testing |

Before E2E testing and after Unit and Integration testing |

After System testing |

Automated or Manual |

Automated |

Manual or automated |

Manual or automated |

Manual |

Unit Testing:

Tests individual units or components of the software in isolation (e.g., functions, methods).

Ensures that each part works correctly on its own.

Integration Testing:

Tests the integration or interfaces between components or systems.

Ensures that different parts of the system work together as expected.

System Testing:

Tests the complete and integrated software system.

Verifies that the system meets its specified requirements.

Acceptance Testing:

Performed by end-users or clients to validate the end-to-end business flow.

Ensures that the software meets the business requirements and is ready for delivery.

Regression Testing:

Conducted after changes (like enhancements or bug fixes) to ensure existing functionalities work as before.

Helps catch bugs introduced by recent changes.

Functional Testing:

Tests the software against functional specifications/requirements.

Focuses on checking functionalities of the software.

Non-Functional Testing:

Includes testing of non-functional aspects like performance, usability, reliability, etc.

Examples include Performance Testing, Load Testing, Stress Testing, Usability Testing, Security Testing, etc.

End-to-End Testing:

Tests the complete flow of the application from start to end.

Ensures the system behaves as expected in real-world scenarios.

Smoke Testing:

Preliminary testing to check if the basic functions of the software work correctly.

Often done to ensure it’s stable enough for further testing.

Exploratory Testing:

Unscripted testing to explore the application’s capabilities.

Helps to find unexpected issues that may not be covered in other tests.

Load Testing:

Evaluates system performance under a specific expected load.

Identifies performance bottlenecks.

Stress Testing:

Tests the system under extreme conditions, often beyond its normal operational capacity.

Checks how the system handles overload.

Usability Testing:

Focuses on the user’s ease of using the application, user interface, and user satisfaction.

Helps improve user experience and interface design.

Security Testing:

Identifies vulnerabilities in the software and ensures that the data and resources are protected.

Checks for potential exploits and security flaws.

Compatibility Testing:

Checks if the software is compatible with different environments like operating systems, browsers, devices, etc.

Sanity Testing:

A subset of regression testing, focused on testing specific functionalities after making changes.

Usually quick and verifies whether a particular function of the application is still working after a minor change.

References and Further Readings#

Phase 5. Continuous Deployment#

Usually at this stage, we have a fully integrated and tested system that is ready to be deployed from staging environment to production. The deployment process is automated and follows a defined release process.

Release#

For example, we might have a staging environment that is a copy of the

production environment. We deploy to the staging environment first, and after

we have thoroughly tested the system, we deploy to the production environment.

This is an example of a release and can be easily done via workflow automation

like Github Actions, Gitlab CI, etc. If the deployed system is an application,

we can also deploy to container orchestration platforms like Kubernetes etc.

Testing In Production#

Machine learning systems are often non-deterministic, and it is not uncommon to have good local evaluation results but poor production results. Consider a machine learning system that predicts the price of a stock. You might get good backtesting results, but when you deploy to production, you realize that the model is not performing as expected due to various drifts.

We will follow Chip’s book and mention a few techniques to test in production so that we can still “rollback” to a previous version of the system if the production results are not satisfactory.

Shadow Deployment/Mirrored Deployment#

The mirrored deployment is a technique where we deploy both the existing model \(\mathcal{M}_1\) and the new model \(\mathcal{M}_2\) to production. We do the following:

Deploy both \(\mathcal{M}_1\) and \(\mathcal{M}_2\) to production.

For any incoming user requests, we will route to both \(\mathcal{M}_1\) and \(\mathcal{M}_2\) to make predictions, however, we will only use the predictions from the old model \(\mathcal{M}_1\) for the final output to the users.

The new predictions are logged and persisted so the developers can analyze the results later.

By doing this, we can compare the results from the old model \(\mathcal{M}_1\) and the new model \(\mathcal{M}_2\) to see if the new model is performing as expected. If the new model is performing better, we can continue to use it. If the new model is performing worse, we can rollback to the old model \(\mathcal{M}_1\).

Of course one glaring issue is that this is expensive to run since we need to serve twice, and this means inference costs are likely doubled.

A/B Testing#

A/B Testing, also known as Split Testing, involves deploying two or more versions of an application (Version A and Version B) simultaneously to different segments of users. The primary objective is to compare the performance, user engagement, and overall effectiveness of each version to inform data-driven decisions about which version to fully roll out.

Deploy both the old model \(\mathcal{M}_1\) and the new model \(\mathcal{M}_2\) to production.

Certain predictions are routed to \(\mathcal{M}_1\) and certain predictions are routed to \(\mathcal{M}_2\).

Based on predictions and user feedback, we can analyze the results and decide which model to keep.

1. Randomized Traffic Allocation#

Randomly assigning users to different versions (A and B) is essential to eliminate bias and ensure that the test results are statistically valid.

Equal Opportunity: Randomization ensures that each user has an equal chance of being assigned to either version, which helps in controlling for variables that could otherwise skew results.

Representative Sample: This method creates a more representative sample of the overall user population, which helps in generalizing the results beyond the test group.

Minimizes Confounding Variables: Random assignment reduces the risk of confounding factors influencing the outcomes. For instance, if one version is shown to a certain demographic, the results might be biased due to inherent differences between that demographic and others.

2. Sufficient Sample Size#

Having a sufficiently large sample size is critical for obtaining statistically significant results.

Statistical Power: A larger sample size increases the statistical power of the test, making it more likely to detect a true effect if one exists. This means you’re less likely to encounter Type II errors (failing to reject a false null hypothesis).

Confidence Intervals: Larger samples provide narrower confidence intervals, which gives a clearer picture of the effect size and its reliability. This helps in making informed decisions based on the results.

Minimizes Variability: With a larger sample, random variability is reduced, allowing the observed effects to be more accurately attributed to the changes being tested rather than random chance.

Phase 6. Continuous Monitoring and Observability#

Motivation#

Consider a financial institution that has a web-based banking application that allows customers to transfer money, pay bills, and manage their accounts. The motivation for implementing robust observing and monitoring practices is driven by the critical need for security, reliability, performance, and regulatory compliance.

Financial transactions are prime targets for fraudulent activities and security breaches. By monitoring system logs, network traffic, and user activities, the bank can identify and respond to potential security incidents in real time.

Customers expect banking services to be available 24/7, without interruptions. Guaranteeing System Reliability and Availability is therefore paramount to establish trust and confidence in your services. Real-time health checks and performance metrics can identify a failing server or an overloaded network segment, allowing IT teams to quickly reroute traffic or scale resources to prevent service disruption.

In other words, Murphy’s law is always at play, and things will fail at a certain point in time, and you don’t want to be oblivious to it. If a system fails, we need to know it immediately and take action (logging and tracing are important to enable easy debugging).

The What and The Why#

We won’t focus on the how to set up monitoring and observability, as there are many ways to do it. For example, Grafana is one of the most popular open-source observability platforms, and it is commonly used with Prometheus, a monitoring and alerting toolkit.

Instead, we need to give intuition on the what and why of monitoring and observability.

Symptom |

Cause |

|---|---|

I’m serving HTTP 500s or 404s |

Database servers are refusing connections |

My responses are slow |

CPUs are overloaded by a bogosort, or an Ethernet cable is crimped under a rack, visible as partial packet loss |

Users in Antarctica aren’t receiving animated cat GIFs |

Your Content Distribution Network hates scientists and felines, and thus blacklisted some client IPs |

Private content is world-readable |

A new software push caused ACLs to be forgotten and allowed all requests |

The Four Golden Signals#

Verbatim

The below section is verbatim from the Google SRE Book.

The four golden signals of monitoring are latency, traffic, errors, and saturation. If you can only measure four metrics of your user-facing system, focus on these four.

Latency#

The time it takes to service a request. It’s important to distinguish between the latency of successful requests and the latency of failed requests. For example, an HTTP 500 error triggered due to loss of connection to a database or other critical backend might be served very quickly; however, as an HTTP 500 error indicates a failed request, factoring 500s into your overall latency might result in misleading calculations. On the other hand, a slow error is even worse than a fast error! Therefore, it’s important to track error latency, as opposed to just filtering out errors.

Traffic#

A measure of how much demand is being placed on your system, measured in a high-level system-specific metric. For a web service, this measurement is usually HTTP requests per second, perhaps broken out by the nature of the requests (e.g., static versus dynamic content). For an audio streaming system, this measurement might focus on network I/O rate or concurrent sessions. For a key-value storage system, this measurement might be transactions and retrievals per second.

Errors#

The rate of requests that fail, either explicitly (e.g., HTTP 500s), implicitly (for example, an HTTP 200 success response, but coupled with the wrong content), or by policy (for example, “If you committed to one-second response times, any request over one second is an error”). Where protocol response codes are insufficient to express all failure conditions, secondary (internal) protocols may be necessary to track partial failure modes. Monitoring these cases can be drastically different: catching HTTP 500s at your load balancer can do a decent job of catching all completely failed requests, while only end-to-end system tests can detect that you’re serving the wrong content.

Saturation#

How “full” your service is. A measure of your system fraction, emphasizing the resources that are most constrained (e.g., in a memory-constrained system, show memory; in an I/O-constrained system, show I/O). Note that many systems degrade in performance before they achieve 100% utilization, so having a utilization target is essential. In complex systems, saturation can be supplemented with higher-level load measurement: can your service properly handle double the traffic, handle only 10% more traffic, or handle even less traffic than it currently receives? For very simple services that have no parameters that alter the complexity of the request (e.g., “Give me a nonce” or “I need a globally unique monotonic integer”) that rarely change configuration, a static value from a load test might be adequate. As discussed in the previous paragraph, however, most services need to use indirect signals like CPU utilization or network bandwidth that have a known upper bound. Latency increases are often a leading indicator of saturation. Measuring your 99th percentile response time over some small window (e.g., one minute) can give a very early signal of saturation. Finally, saturation is also concerned with predictions of impending saturation, such as “It looks like your database will fill its hard drive in 4 hours.” If you measure all four golden signals and page a human when one signal is problematic (or, in the case of saturation, nearly problematic), your service will be at least decently covered by monitoring.

A Word on Monitoring in Machine Learning Systems#

In the Machine Learning world, we may have to track things like model and data shitfts. For example, model monitoring is about continuously tracking the performance of models in production to ensure that they continue to provide accurate and reliable predictions.

Performance Monitoring: Regularly evaluate the model’s performance metrics in production. This includes tracking metrics like accuracy, precision, recall, F1 score for classification problems, or Mean Absolute Error (MAE), Root Mean Squared Error (RMSE) for regression problems, etc.

Data Drift Monitoring: Over time, the data that the model receives can change. These changes can lead to a decrease in the model’s performance. Therefore, it’s crucial to monitor the data the model is scoring on to detect any drift from the data the model was trained on.

Model Retraining: If the performance of the model drops or significant data drift is detected, it might be necessary to retrain the model with new data. The model monitoring should provide alerts or triggers for such situations.

A/B Testing: In case multiple models are in production, monitor their performances comparatively through techniques like A/B testing to determine which model performs better.

In each of these stages, it’s essential to keep in mind principles like reproducibility, automation, collaboration, and validation to ensure the developed models are reliable, efficient, and providing value to the organization.

Phase 7. Continuous Learning and Training#

As we consistently emphasized on monitoring and observing drifts in machine learning systems, what would you do if you detect a drift? One common approach is to re-train, or fine-tune the model on new data. So on top of continuous integration, continuous deployment, and continuous monitoring, we also have continuous learning and continuous training.